Since we last saw HPE at ISC a year ago, the company embarked on a strong run of success in HPC and supercomputing – successes the company will no doubt be happy to discuss at virtual ISC 2021.

Since we last saw HPE at ISC a year ago, the company embarked on a strong run of success in HPC and supercomputing – successes the company will no doubt be happy to discuss at virtual ISC 2021.

This being the year of exascale, HPE is likely to put toward the top of its list of HPC achievements is its Frontier system getting moved up chronologically on the U.S. Department of Energy’s schedule of exascale installations; the system is expected to be the first American exascale to be stood up later this year at Oak Ridge National Laboratory. El Capitan, another exascale (2 exaflops) system for which HPE-Cray is the lead contractor, is scheduled for installation at Lawrence Livermore National Laboratory in early 2023.

A third exascale system, Argonne National Laboratory’s Aurora, for which Intel is the prime, will have a heavy HPE-Cray flavor, incorporating the HPE Cray EX supercomputer, formerly called Cray “Shasta”.

Stepping down from the rarefied level of exascale, HPE announced a string of customer wins over the past 12 months, including:

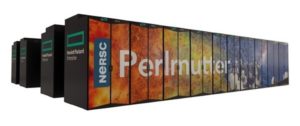

- the U.S. National Energy Research Scientific Computing Center recently unveiled the Perlmutter HPC system, a beast of a machine powered by 6,159 Nvidia A100 GPUs and delivering 4 exaflops of mixed precision performance based on the HPE Cray Shasta platform architecture, including HPE Slingshot HPC interconnect and a Cray ClusterStor E1000 All Flash storage system with 35 petabyte usable capacity;

- in a £1 billion-plus deal over 10 years, Microsoft Azure will integrate HPE Cray EX supercomputers for the UK Met Office, a system expected to be in the top 25 of the Top500 list of the world’s fastest HPC systems, according the weather service, and twice as powerful as any other in the UK.

an HPE Cray EX system targeting modeling and simulation in academic pursuits and industrial areas, including drug design and renewable energy for KTH Royal Institute of Technology (KTH) in Stockholm, funded by Swedish National Infrastructure for Computing (SNIC);

an HPE Cray EX system targeting modeling and simulation in academic pursuits and industrial areas, including drug design and renewable energy for KTH Royal Institute of Technology (KTH) in Stockholm, funded by Swedish National Infrastructure for Computing (SNIC);- the delivery of a 7.2 petaflops supercomputer to support weather modeling and forecasting for the U.S. Air force and Army powered by two HPE Cray EX supercomputers in operation at Oak Ridge National Laboratory, where it is managed by ORNL.

- the expansion of NASA’s “Aitken” supercomputer with HPE Apollo systems that became operational last January 2021 built for compute-intensive CFD modeling and simulation.

- a contract to build a $35M+ supercomputer for the National Center for Atmospheric Research (NCAR) – 3.5 faster and 6 x more energy efficient supercomputer for extreme weather research.

- in tandem with AMD, HPE announced last October that Australia’s Pawsey Supercomputing Centre awarded HPE a $48AUD million systems contract; and that Los Alamos National Lab said it has stood up the “Chicoma” system based on AMD processors and the HPE Cray EX supercomputer.

- to be used by the DOE’s Los Alamos National Laboratory, Lawrence Livermore National Laboratory and Sandia National Laboratory, a $105 million system built with the HPE Cray EX supercomputer (“Crossroads”) to be installed in spring of 2022 with quadrupled performance over the existing system for the U.S. Department of Energy’s National Nuclear Security Administration (NNSA)

- and on the Arm front, last June, the Leibniz Supercomputing Centre (LRZ) in Munich announced it will deploy HPE’s Cray CS500 with Fujitsu A64FX chips based on the Arm architecture

- Microsoft has partnered with HPE to take the Met Office’s new HPE Cray EX supercomputer to the cloud, enabling the Met Office to work and act on the insights of their data using AI, modeling, and simulation.

In addition, this week HPE announced the acquisition Determined AI, a San Francisco-based startup with a software stack designed to train AI models faster using its open source machine learning (ML) platform.

In addition, this week HPE announced the acquisition Determined AI, a San Francisco-based startup with a software stack designed to train AI models faster using its open source machine learning (ML) platform.

We recently talked with HPE’s Joseph George, a long-time figure in the HPC community who came over to the company from Cray when it was acquired by HPE in 2019. George, HPE’s VP, global industry and alliance marketing, said that in some ways, ISC is for HPE a continuation from its June22-24 HPE Discover bash – at least as far as HPC goes.

“Our biggest customer event is HPE Discover, and HPE Discover this year takes place one week before ISC, so it works out well, because a lot of the presentations and content that we’re publishing are there,” George said. “So we’ll be linking back to this content, a lot of really rich, good information for our customers and potentially attendees to take a look at.”

“And a good deal of that content, he said, will support HPE’s “objective of delivering systems that perform like a supercomputer and run like a cloud. Our goal is to power all sorts of new workloads in any size for every data center… We’re building on this heritage and history and impact of exascale. You’ve heard a lot about that from us for a long time. But within the confines of exascale, within that methodology, there are workloads and data that flow fluidly across system architectures and different organizations. So we’re trying to bring that concept to every data center of any size.”

That core message – “perform like a supercomputer and run like a cloud” – means merging the qualities of both entities.

HPE’s Joseph George

“If we look at what we’ve been delivering in a supercomputer space for a long time,” George told us, “the scale that we get to for tackling some of these big challenges that require that supercomputer horsepower and capability and functionality across the software across the network, across storage…, leveraging all of that goodness. But when you get to cloud, you think about the ability to manage it in a more modern way, how can I manage the software, integrate the software with API’s? How am I able to run it at a cloud scale, especially as you start getting into other data centers, or in the enterprise. Our customers are now interfacing with their compute and storage and infrastructure, with things like API’s, with more centralized consoles, that are managed in cloud data centers, so how do we incorporate those elements and those tenants into how our supercomputer customers are managing those devices? And then vice versa: how do we go to customers that are familiar with their cloud infrastructure but bring the performance and power of a supercomputer? It’s bringing together those capabilities, servicing our customers where they are in their journey.”

At ISC, HPE will be involved in more than 10 sessions throughout the conference (see here and here) coving such topics as Pankaj Goyal, HPE VP HPC/AI & Compute Product Management, speaking on “Innovation Powers the HPC and AI Journey”; Torsten Wilde, HPE master system architect, on “Guidelines for HPC Data Center Monitoring and Analytics Framework Development”; and Jonathan Bill Sparks, HPE technologist, who will speak about “Containers in HPC.”

Another session involving HPE and other will be held on Thursday, July 2: “Trends and Directions in HPC: HPE, Microsoft Azure, NEC, T-Systems,” featuring Nicolas Dube, fellow and VP at HPE, and Addison Snell, co-founder and CEO of HPC industry analyst firm Intersect360 Research.

All of this and other activities HPE will be engaged in at ISC can be found at the company’s virtual booth, located at https://www.isc-hpc.com/sponsor-exhibitor-listing.html.