Intel has unveiled a programmable networking device for hyperscalers and their massive data center infrastructures.

Intel has unveiled a programmable networking device for hyperscalers and their massive data center infrastructures.

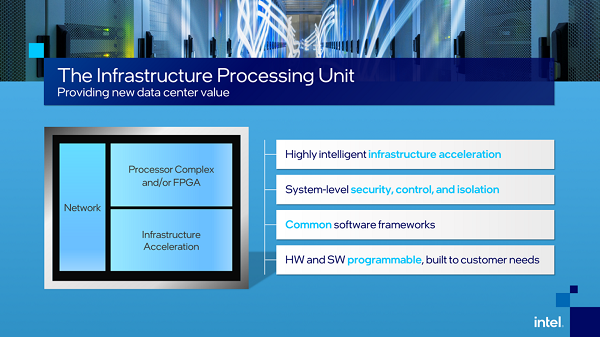

Called the Infrastructure Processing Unit and announced at Intel made the announcement during the Six Five Summit, it’s a networking device is intended to help cloud and communication service providers cut overhead and free up performance for CPUs, to better utilize resources and balance processing and storage.

“It allows cloud operators to shift to a fully virtualized storage and network architecture while maintaining high performance and predictability, as well as a high degree of control,” Intel said in a blog announcing the new product. “The IPU has dedicated functionality to accelerate modern applications that are built using a microservice-based architecture in the data center. Research from Google and Facebook has shown 22 percent1 to 80 percent2 of CPU cycles can be consumed by microservices communication overhead.”

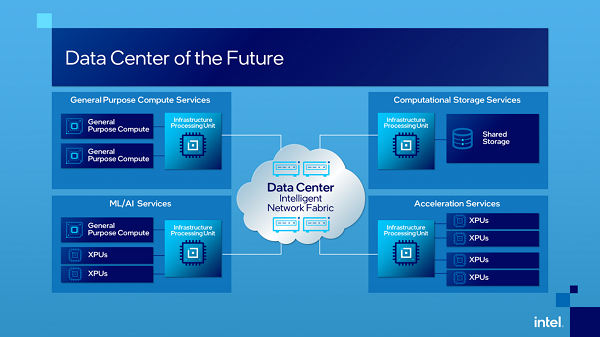

The IPU fits with other Intel efforts in support of heterogenous computing. For several years, the company has declared itself to no longer be a CPU-First but a CPU-Also company with a multi-architecture “XPU” strategy encompassing GPUs, FPGAs, Arm and other processors used in HPC workloads. In fact, as part of its announcement Intel said it will roll out additional FPGA-based IPU platforms and dedicated ASICs. Another XPU-related Intel initiative: its role in forming the CXL consortium based on an interconnect enabling data exchange among disparate chips (see “Tear Down These Walls: How CXL Could Reinvent the Data Center”).

In its view of data center evolution, a new intelligent architecture is required “where large-scale distributed heterogeneous compute systems work together and is connected seamlessly to appear as a single compute platform. This new architecture will help resolve today’s challenges of stranded resources, congested data flows and incompatible platform security. This intelligent data center architecture will have three categories of compute — CPU for general-purpose compute, XPU for application-specific or workload-specific acceleration, and IPU for infrastructure acceleration — that will be connected through programmable networks to efficiently utilize data center resources.”

“The IPU is a new category of technologies and is one of the strategic pillars of our cloud strategy,” said Guido Appenzeller, CTO of Intel’s Data Platforms Group. “It expands upon our SmartNIC capabilities and is designed to address the complexity and inefficiencies in the modern data center. At Intel, we are dedicated to creating solutions and innovating alongside our customer and partners — the IPU exemplifies this collaboration.”

Source: Intel — 1The reference “From Profiling a warehouse-scale computer, Svilen Kanev, Juan Pablo Darago, Kim M Hazelwood, Parthasarathy Ranganathan, Tipp J Moseley, Guyeon Wei, David Michael Brooks, ISCA’15” https://research.google/pubs/pub44271.pdf — figure 4; 2https://research.fb.com/publications/accelerometer-understanding-acceleration-opportunities-for-data-center-overheads-at-hyperscale/