<SPONSORED CONTENT> This insideHPC technology guide, insideHPC Guide to HPC Fusion Computing Model – A Reference Architecture for Liberating Data, discusses how organizations need to adopt a Fusion Computing Model to meet the needs of processing, analyzing, and storing the data to no longer be static.

<SPONSORED CONTENT> This insideHPC technology guide, insideHPC Guide to HPC Fusion Computing Model – A Reference Architecture for Liberating Data, discusses how organizations need to adopt a Fusion Computing Model to meet the needs of processing, analyzing, and storing the data to no longer be static.

Archiving Data Case Study

Active archives are built upon a hibernation paradigm. The active, tiered, and traditional archiving paradigm is not future-proofed and performant for today’s data-in-motion business needs. Thus, organizations must move beyond data hibernation to the total liberation of data and its metadata.

Crucial questions organizations should ask to include:

- What is your relationship with your data?

- Are you making infrastructure decisions for storage?

- Are you using data and its relationship to the organization to make data decisions?

“Data are a precious thing and will last longer than the systems themselves,” said Sir Tim Berners Lee, inventor of the World Wide Web. We must rethink the way we view data and its value,” states Dodd.

Let’s apply the Fusion Computing Model to create the freedom archive paradigm for challenges faced by organizations.

- Data migration from legacy file/archive system faced by the VP of IT Infrastructure, Cloud Executive, System Support VP, and others: They need an orchestration capability that provides a global namespace to allow their communities access to any (authorized) data source(s) regardless of its migration state or media type (destination). The fundamental approach here is to free legacy systems today, allowing data movement to any future platform (core, cloud, edge, or hybrid).

- Data dispersion and resulting sprawl faced by the Chief Data Officer, VP of Infrastructure, Data Security Executive, and others: They need to understand and measure the impact of how, where, and why data and metadata spread. The fundamental approach here is to free data and metadata ingestion, analytics and cataloging beyond the maintained data lifecycle for real-time governance and monitoring. The global data catalog knows how your data are used and by whom and can enforce policy-based governance regardless of back-end technology infrastructure.

Collect, protect, leverage and free your data-in-motion archives based on security-minded applications, widely distributed environments using Fusion Computing Model infrastructure.

What to Consider when Selecting Storage for the HPA Reference Architecture?

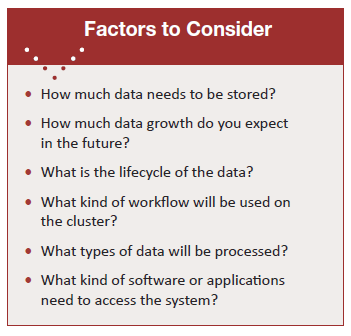

Organizations need to consider the following factors when determining an HPA storage infrastructure required for a system to meet future needs. These questions can help put agility back into the data center.

Storage Technologies

Storage Technologies

HPC storage requires a variable of data classes and technologies that balance performance, availability, and cost. HDDs will always be required for certain types of data classes and play a key role in the Fusion Computing Model. Tape Storage does not allow the active archive principles, and the ability to keep data moving rather than have it in a static container (e.g., Data Lake). Dynamic Random- Access Memory (DRAM) semiconductor memory is commonly used in computers and graphic cards. Still, it may be too expensive to use in HPC at a large or extreme scale rather best used as a specialized resource. Eliminating unneeded technologies and leveraging commodity resources when able helps drive us towards a democratized HPC.

Seagate Storage Portfolio

Seagate offers a wide variety of storage solutions. According to Benjamin King, Seagate, Sales Manager, “Our capacity optimized storage systems allow you to simplify your footprint and move to a vendor agnostic based model to scale your software defined solution. These types of solutions help eliminate silos of data and enable you to drive policy-based decisions across classes of data, using the right resources for the right jobs.”

“We need to get away from the misperception that Hard Disc Drives (HDD) equal static data, which is tape not HDD technology. SSD, HDD and tape all have their place in today’s storage infrastructure balancing performance, availability, and cost. HDDs stand out as the choice for online, randomly accessible mass capacity that is economically viable within an ever evolving system itself. Seagate’s enterprise data systems have the option for compute on board, simplifying your footprint and decreasing the time to access your data.” – Benjamin King, Seagate, Sales Manager

Seagate provides hybrid storage that contains both HDDs and SAS Solid State Drives (SSDs). King indicates, “Being able to provide a hybrid solution inside a Seagate storage enclosure enables a broader range of resources and data classes within a single enclosure. Seagate also offers the only TAA/FIPS Enterprise HDD and SSD Solution on the market as well as a broad portfolio of security features and options to secure your data.”

The Role of Storage in Fusion Computing

Seagate Enterprise Data Systems are suited to various HPC, AI, and Big Data functions. The typical storage steps in HPC and AI storage are listed below.

HPC storage includes:

- Project storage of simulation results (“/home”)

- Scratch storage of checkpoint simulation results (“/scratch”)

- Archive storage of long-term storage (“/archive”)

AI, Analytics and Big Data storage include:

- Ingest: loading of training data

- Data Labeling: data classification and tagging

- Training: developing the AI/ML model

- Inference: running the AI model

- Archive: long term storage

Seagate Storage and Intel Compute Enable Fusion Computing

Seagate used the Fusion Computing model to create a more effective compute and storage infrastructure, including a better storage subsystem. Compute is offloaded to the subsystems so that Intel computing technology might become a category of service versus tiers.

The Seagate Exos AP 4U100 storage system helps keep data in motion and usable as part of the Fusion Computing Model. One feature that makes Exos 4U100 unique is that it is not just a storage device but has both state-of-the-art Seagate storage and Intel compute capability included as an integrated solution. Also, the system is on a modern near-line disk versus many legacy subsystems that rely on tape for storage.

“The Seagate Exos AP 4U100 application platform combines Intel® Xeon® servers with high-density Seagate storage to provide a flexible building block for software-defined storage used in high performance computing. Applications include storage of large-scale input data, as well as storage of simulation result data.” – Wendell Wenjen, Seagate Product Development Manager

According to King, “From a hardware perspective, the features of the Exos 4U100 enable building blocks for max capacity, with quick access to your other technology resources within your environment.” Driving software agnostic reference architectures and decoupling incumbent software stacks from vendor lock-in hardware appliances will enable the collapse of the data center as we know it, enabling the next generation of high-performance architectures.

Features of the Seagate 4U100 Enterprise Storage

The Exos AP 4U100 future-proofs modular data center systems for even greater density with next generation Seagate media. Upgrading a system is as simple as hot-swapping drives because it shares design and multiple field-replaceable units (FRUs) with a serviceable ecosystem with the option for 4x SAS SSDs onboard for a performance class.

Safeguard data with dual Intel® Xeon® Scalable CPUs in two controllers housed within the Exos AP 4U100 enclosure. This feature provides robust redundancy and multi-node capability. Built-in technology minimizes drive performance degradation due to the number of drives and cooling elements. This Seagate storage solution drives performance for both the current generation of Seagate media and future technologies —which is a goal of the Fusion Computing Model.

WWT’s Advanced Technology Center (ATC)

WWT understands the environment and infrastructure data center customers need for HPC processing, including hardware, software, and analytics. WWT has four data centers at the Advanced Technology Center (ATC) containing the High Performance Architecture Framework and Laboratory (Lab) to test Proof-of- Concept (POC) infrastructure, storage, and automation solutions. The ATC Lab includes integrated compute, storage, and network platforms for multiple POCs. ATC staff offer organizations a way to conduct functionality and first-of-a-kind (FOAK) tests of computing, storage, and network solutions from top OEMs and partners—all integrated into a controlled, automated working environment. Labs include server nodes with isolated compute and memory to provide sufficient I/O for massive stress testing of the latest flash storage platforms. Labs include blades, rack-optimized and multi-socket servers with Intel processor sets ranging from Sandy Bridge to Cascade Lake to Ice Lake.

The WWT ATC Lab is designed to investigate customer storage challenges and architect performance, integration, storage costs, and facilities cost.

Conclusion

There is a convergence of data processing with HPC, AI, ML, DL, IoT, IIoT, and Big Data workflow needs. Technical advances in processors, GPUs, fast storage, and memory now allow organizations to analyze, process and store massive amounts of data.

A new infrastructure paradigm called the Fusion Computing Model was developed by Earl J. Dodd of WWT. This model meets the needs of processing, analyzing, and storing the data to be no longer static. At the heart of this model is the concept of a data fountain that is constantly flowing. The Fusion Computing Model contains an HPA reference architecture to help customers determine the proper infrastructure solution. This paper describes the Fusion Computing Model and explores how Seagate and Intel use the model’s elements in storage and compute solutions. WWT has an Advanced Technology Center (ATC) with High Performance Architecture Lab to test Proof-of-Concept (POC) infrastructure solutions.

“Just as NASA has embarked on this 60+ years of human exploration, technology, and science, the HPC industry, along with Enterprise-class computing, is nurturing new technologies combined with best practices to meet the challenges of advanced (Exascale and beyond) computing. Come and take an ATC test flight of the HPA to push the boundaries of your computing paradigm.” – Earl J. Dodd, WWT, Global HPC Business Practice Leader

Over the past few weeks we explored these topics:

- Executive Summary, Customer Challenges, Fusion Computing drives the High Performance Architecture, The Fusion Computing Model: Harnessing the Data Fountain

- Fusion Computing Reference Architecture, HPC and AI Memory Considerations, Importance of HPC Chips and Accelerators, HPC Storage is Critical to Fusion Computing

- Archiving Data Case Study, What to Consider when Selecting Storage for the HPA Reference Architecture, The Role of Storage in Fusion Computing, Seagate Storage and Intel Compute Enable Fusion Computing, WWT’s Advanced Technology Center (ATC), Conclusion

Download the complete insideHPC Guide to HPC Fusion Computing Model – A Reference Architecture for Liberating Data courtesy of Seagate.