Sponsored Post

This technology guide, “insideHPC Guide to Composable Disaggregated Infrastructure (CDI) Clusters,” will show how the Silicon Mechanics Miranda CDI Cluster™ reference architecture can be a CDI solution blueprint, ideal for tailoring to specific enterprise or other organizational needs and technical issues.

This technology guide, “insideHPC Guide to Composable Disaggregated Infrastructure (CDI) Clusters,” will show how the Silicon Mechanics Miranda CDI Cluster™ reference architecture can be a CDI solution blueprint, ideal for tailoring to specific enterprise or other organizational needs and technical issues.

The guide is for a technical person, especially those who might be a sys admin in financial services, life sciences or a similarly compute-intensive field. This individual may be tasked with making CDI work with a realizable ROI or just finding a way to extend the value of their IT investment while still meeting their computing needs.

Key Ingredients of a High-performance CDI Cluster

To fully understand the technology’s impact, it’s important to identify key ingredients of a high performance CDI cluster for diverse workloads. There are several different options for building a high-performance infrastructure. Such a solution can do many different things for an organization, especially those that have multiple different divisions or teams that need to use the same central resource, but have different uses for it:

- For example, sometimes universities might have different principal investigators or research groups trying to use the same cluster, but they do different kinds of work that need different types of configurations.

- Another example is a business that has different divisions working on product development and use different types of simulation software and other technologies in their efforts. These are examples of types of multi-user environments.

The Role of Networking with CDI

The Role of Networking with CDI

High-performance networking, like Infiniband from NVIDIA Networking, is critical to the way CDI operates. It’s possible to disaggregate just about everything—compute (Intel, AMD, FPGAs), data storage (NVMe, SSD, Intel Optane, etc.), GPU accelerators (NVIDIA GPUs), etc. These components become numbers on a chalkboard, and you can rearrange them however you see fit, but the networking underneath all those pipes stays the same. Think of networking as a fixed resource with a fixed effect on performance, as opposed to other resources that are disaggregated.

It is important to plan out an optimal network strategy for a CDI deployment. If there’s a need for peak system performance, you’re covered because InfiniBand can handle it. Conversely, Ethernet is available to scale downward. In this sense, your networking is really the backbone of a CDI system to make sure that it can keep up with all the different levels of service that are required. Moreover, if you expand over time, you’ve got that underlying network to support anything that comes up in the lifecycle of that system.

Use Cases for CDI Clusters

There are several use cases for composable infrastructure:

- AI and machine learning

- HPC

- Multi-tenant environments or environments with a shared resource pool

- Mixed workloads

- Greenfield projects, or net new deployments, where you can design in composable infrastructure from the ground up, or brownfield environments where you want to add a resource pool like GPUs to existing infrastructure without replacing or redesigning the existing cluster or deployment.

When building a typical GPU cluster for one of the above use cases, you need to pick a specific chassis that supports several GPUs. It may support features like Remote Direct Memory Access (RDMA)/GPU Direct or GPU Direct Storage, and if it doesn’t support those features you have to compromise with composable infrastructure.

You get around the compromises by disaggregating your hardware: you pick the CPUs you want, the number of GPUs you want, and then you get features like RDMA/GPU Direct and GPU Direct Storage by leveraging the PCI Express® (PCIe) and Host Physical Address (HPAs) connected to switches that are then connected to the various boxes for the I/O that’s needed. This in turn provides a purpose built machine for specific workloads without the need for investing in hardware that only provides a fraction of the desired -outputs.

CDI’s Scalability and Its Impact on Performance and Latency for ROI

Composable infrastructure allows for the growth of disaggregated resource pools as needed. It’s possible to independently add more GPUs, accelerators, or storage, expanding only the resource classes that are needed. A CDI command center GUI facilitates agile assignment of resources to dynamically provision bare metal instances and schedule jobs via Slurm Workload Manager integration. Bare metal instance performance is consistent with traditional bare metal servers and latency is the same as any PCIe connected component. CDI utilizes high bandwidth, low latency PCIe fabric to ensure maximum performance in ROI.

How can all this be managed? Can it be scripted? Can it be automated? It’s possible to run whatever is needed on top of composable infrastructure but the question should be how to get the best ROI out of this composable infrastructure.

ROI can be measured based on system utilization and greater total jobs being processed because machines are configured specifically to the tasks versus letting hardware go to waste.

Can anything be done to improve their performance for existing or brownfield infrastructure (i.e. when an organization purchases or leases an existing facility) or existing clusters?

Since composable infrastructure is built with PCIe fabric, HPGAs can be added to existing machines and then expand their capabilities, like adding NVMe storage to a machine that may not have any, thereby increasing storage throughput.

Conclusion

Conclusion

We’re experiencing an important inflection point in computing technology today. The digital transformation for businesses is at unparalleled levels. Along with the accelerated growth in data collection, and the dynamic nature of applications, IT management will need to adopt newly crafted and creative approaches to data center administration and rapid deployment of resources to keep pace with the constantly evolving applications driving business decisions and competitiveness.

Many enterprises are turning to demanding workflows such as HPC, AI and edge computing that require massive levels of resources. This means that IT must find flexible and agile solutions to effectually manage the on-premises data center while delivering the flexibility typically afforded with the cloud. CDI is quickly emerging as a compelling option to meet these demands for deploying applications that incorporate advanced technologies such as machine learning, deep learning, and HPC.

The composable disaggregated infrastructure industry is still relatively young and is as dynamic as the composable platforms themselves. But composable architecture has made great strides in the last few years and is likely to continue to do so. Composability won’t necessarily benefit all workloads or environments, but it could go a long way in accommodating applications, especially those that incorporate AI and other advanced technologies.

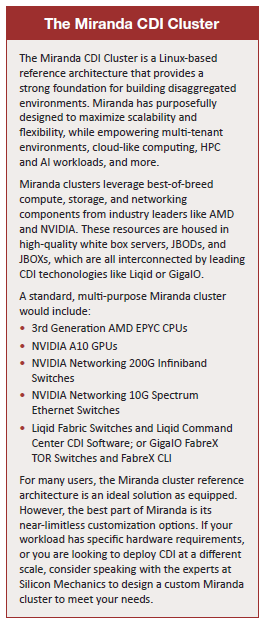

Silicon Mechanics is an engineering firm providing custom solutions in HPC/AI, storage, and networking; delivering best-in-class solutions based on open standards, the latest technologies, and achieving maximum value for clients with a consultative sales approach to every opportunity, working to understand the customer’s use case and present a best fit solution. Through their own engineering prowess, coupled with several strategic partnerships, Silicon Mechanics has defined itself as a clear leader in the CDI space, as illustrated with the Silicon Mechanics Miranda CDI Cluster.

Over the next few weeks we’ve explored these topics:

- Introduction, Benefits of CDI Clusters to the Enterprise

- Technology Use Case Examples, CDI and the Enterprise Infrastructure of the Future

- Key Ingredients of a High-performance CDI Cluster, Conclusion

Download the complete insideHPC Guide to Composable Disaggregated Infrastructure (CDI) Clusters courtesy of Silicon Mechanics.