San Francisco — March 14, 2023 — Whatever your initial impression were of ChatGPT, the capabilities of the generative AI application from OpenAI appear poised to move significantly forward.

San Francisco — March 14, 2023 — Whatever your initial impression were of ChatGPT, the capabilities of the generative AI application from OpenAI appear poised to move significantly forward.

The company has announced a follow-up to GPT-3.5: GPT-4, described by the company as “….a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.”

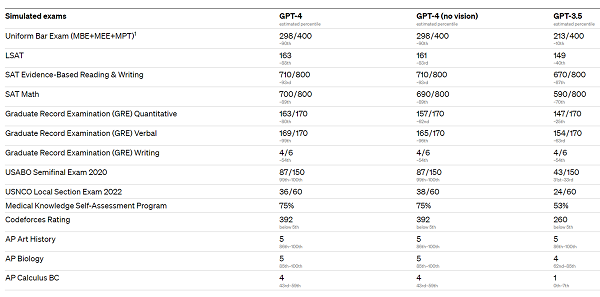

OpenAI said the new version passed a simulated bar exam with a score around the top 10 percent of test takers, whereas GPT-3.5’s score was around the bottom 10 percent. “We’ve spent six months iteratively aligning GPT-4 using lessons from our adversarial testing program as well as ChatGPT, resulting in our best-ever results (though far from perfect) on factuality, steerability, and refusing to go outside of guardrails,” the company said.

After rebuilding its deep learning stack and, together with Azure, co-designing a supercomputer from the ground up for its workload, the company said it trained GPT-3.5 a year ago as a test run of the system. “We found and fixed some bugs and improved our theoretical foundations. As a result, our GPT-4 training run was (for us at least!) unprecedentedly stable, becoming our first large model whose training performance we were able to accurately predict ahead of time. As we continue to focus on reliable scaling, we aim to hone our methodology to help us predict and prepare for future capabilities increasingly far in advance—something we view as critical for safety.”

The commpany said it is releasing GPT-4’s text input capability via ChatGPT and the API (with a waitlist). To prepare the image input capability for wider availability, the company said it is collaborating closely with a single partner, Be My Eyes, to start. And it is open-sourcing OpenAI Evals, its framework for automated evaluation of AI model performance, to allow users to report shortcomings in our models to help guide further improvements.

OpenAI said the distinctions between GPT-3.5 and GPT-4 are subtle when engaged in a casual conversation. The difference comes out when the complexity of the task reaches a sufficient threshold—GPT-4 is more reliable, creative, and able to handle more nuanced instructions than GPT-3.5, according to OpenAI.

OpenAI developers tested the two models on various benchmarks, including simulating exams originally designed for humans. “We proceeded by using the most recent publicly-available tests (in the case of the Olympiads and AP free response questions) or by purchasing 2022–2023 editions of practice exams. We did no specific training for these exams. A minority of the problems in the exams were seen by the model during training, but we believe the results to be representative….”

GPT-4 can accept a prompt of text and images, which—parallel to the text-only setting—lets the user specify any vision or language task, according to OpenAI. Specifically, it generates text outputs (natural language, code, etc.) given inputs consisting of interspersed text and images. Over a range of domains—including documents with text and photographs, diagrams, or screenshots—GPT-4 exhibits similar capabilities as it does on text-only inputs. Furthermore, it can be augmented with test-time techniques that were developed for text-only language models, including few-shot and chain-of-thought prompting. Image inputs are still a research preview and not publicly available, the company said.