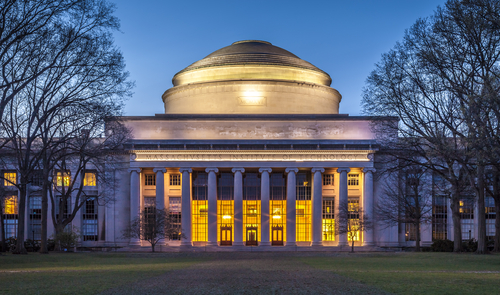

Massachusetts Institute of Technology

CAMBRIDGE, Mass., May 10, 2023 — In an effort to improve fairness or reduce backlogs, machine learning models are sometimes designed to mimic human decision making, such as deciding whether social media posts violate toxic content policies. But researchers from MIT and elsewhere have found that these models often do not replicate human decisions about rule violations. If models are not trained with the right data, they are likely to make different, often harsher, judgements than humans would.

This research was funded, in part, by the Schwartz Reisman Institute for Technology and Society, Microsoft Research, the Vector Institute, and a Canada Research Council Chain.