The perennial question for IT asset acquisition is whether to build or consume, and there is no ‘one size fits all’ answer to the question. Individual components provide the maximum flexibility, but also require the maximum of self support. Reference architectures provide the recipe and guidance, but the customer still has the responsibility for making the system work.

Network Switch Configuration with Dell EMC Ready Nodes

Storage networks are constantly evolving. From traditional Fibre Channel to IP-based storage networks, each technology has its place in the data center. IP-based storage solutions have two main network topologies to choose from based on the technology and administration requirements. Dedicated storage network topology, shared leaf-spine network, software defined storage, and iSCSI SAN are all supported. Hybrid network architectures are common, but add to the complexity.

Networking Dell EMC Microsoft Storage Spaces Direct Ready Nodes

Dell EMC Microsoft Storage Spaces Direct Ready Nodes are built on Dell EMC PowerEdge servers, which provide the storage density and compute power to maximize the benefits of Storage Spaces Direct. “They leverage the advanced feature sets in Windows Server 2016 Datacenter Edition to deploy a scalable hyper-converged infrastructure solution with Hyper-V and Storage Spaces Direct. And for Microsoft server environments, S2D scales to 16 nodes in a cluster, and is a kernel-loadable module, (with no RDMA iWarp or RoCE needed) which is a low risk approach to implementing an S2D cluster.”

Exploring Dell EMC Networking for vSan

This is the second entry in an insideHPC guide series that explores networking with Dell EMC ready nodes. Read on to learn more about Dell EMC networking for vSan.

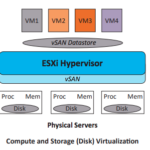

How Do I Network vSAN Ready Nodes?

Dell EMC vSAN Ready Nodes are built on Dell EMC PowerEdge servers that have been pre-configured, tested and certified to run VMware vSAN. This post outlines how to network vSan ready nodes. “As an integrated feature of the ESXi kernel, vSAN exploits the same clustering capabilities to deliver a comparable virtualization paradigm to storage that has been applied to server CPU and memory for many years.”

Speeding Workloads at the Dell EMC HPC Innovation Lab

The Dell EMC HPC Innovation Lab, substantially powered by Intel, has been established to provide customers best practices for configuring and tuning systems and their applications for optimal performance and efficiency through blogs, whitepapers and other resources. “Dell is utilizing the lab’s world-class Infrastructure to characterize performance behavior and to test and validate upcoming technologies.”

Selecting HPC Network Technology

“With three primary network technology options widely available, each with advantages and disadvantages in specific workload scenarios, the choice of solution partner that can deliver the full range of choices together with the expertise and support to match technology solution to business requirement becomes paramount.”

HPC Networking Trends in the TOP500

The TOP500 list is a very good proxy for how different interconnect technologies are being adopted for the most demanding workloads, which is a useful leading indicator for enterprise adoption. The essential takeaway is that the world’s leading and most esoteric systems are currently dominated by vendor specific technologies. The Open Fabrics Alliance (OFA) will be increasingly important in the coming years as a forum to bring together the leading high performance interconnect vendors and technologies to deliver a unified, cross-platform, transport-independent software stack.

High Performance System Interconnect Technology

Today, high performance interconnects can be divided into three categories: Ethernet, InfiniBand, and vendor specific interconnects. Ethernet is established as the dominant low level interconnect standard for mainstream commercial computing requirements. InfiniBand originated in 1999 to specifically address workload requirements that were not adequately addressed by Ethernet, and vendor specific technologies frequently have a time to market (and therefore performance) advantage over standardized offerings.

Special Report on Top Trends in HPC Networking

A survey conducted by insideHPC and Gabriel Consulting in Q4 of 2105 indicated that nearly 45% of HPC and large enterprise customers would spend more on system interconnects and I/O in 2016, with 40% maintaining spending at the same level as the prior year. For manufacturing, the largest subset representing approximately one third of the respondents, over 60% were planning to spend more and almost 30% maintaining the same level of spending going into 2016 implying the critical value of high performance interconnects.