Quantinuum and Microsoft report they have achieved a breakthrough in fault tolerant quantum computing “by demonstrating the most reliable logical qubits with active syndrome extraction, an achievement previously believed to be years away from realization,” the companies said.

Classiq and Quantum Intelligence Partner on Drug Development

SEOUL, SOUTH KOREA and TEL AVIV, ISRAEL | April 04, 2024 — Quantum computing software company Classiq and Quantum Intelligence Corp. today announced the launch of joint research for drug development by applying quantum computing to pharmacology. The collaboration is under the auspices of Classiq’s Quantum Computing For Life Sciences & Healthcare Center, launched with NVIDIA […]

RAVEL Announces Orchestrate GenAI Software and Support for AMD GPU Accelerators

Seattle – April 4, 2024 – RAVEL Inc (formerly StratusCore) today announced the release of RAVEL Orchestrate GenAI, built to provide IT and DevOps administrators with a single-screen interface for managing the assembly and deployment of complex content creation and generative AI environments across teams and pipelines, whether local or remote. RAVEL is also working […]

SiMa.ai Raises $70M for Generative Edge AI Platform

SAN JOSE, Calif.– SiMa.ai, the software-centric, embedded edge machine learning system-on-chip company, today announced it has raised an additional $70M of funding led by Maverick Capital, with participation from Point72 and Jericho, as well as existing investors Amplify Partners, Dell Technologies Capital, Lip-Bu Tan and others. SiMa.ai will utilize the $270M raised to date to […]

SK hynix to Invest $3.9B in Indiana HBM Fab and R&D with Purdue

Memory chip company SK hynix announced it will invest $3.87 billion in West Lafayette, Indiana to build an advanced packaging fabrication and R&D facility for AI products. The project, which the company said is the first of its kind in the U.S., will be an advanced….

Hailo Closes $120M Funding Round and Debuts Hailo-10 Chip for Edge AI

TEL AVIV, Israel, April 2, 2024 — Edge AI chip maker Hailo announced it has extended its series C fundraising round with an additional investment of $120 million and also announced the introduction of its Hailo-10 high-performance generative AI (GenAI) accelerators that usher in an era where users can own and run GenAI applications locally […]

HPC News Bytes 20240401: A $100B AI Data Center, Eviden Says It’s Healthy, Alibaba’s RISC-V Chip, New Optical Interconnect Group, Nvidia Fights CUDA Translation

Happy April Fool’s Day! It was as always an interesting week in the world of HPC-AI, this edition of HPC News Bytes includes commentary on: Microsoft and….

Lightning AI Announces Availability Compiler for PyTorch

New York – March 28, 2024 – Lightning AI today announced the availability of Thunder, a source-to-source compiler for PyTorch designed for training and serving the latest generative AI models across multiple GPUs. Thunder is the culmination of two years of research on the next generation of deep learning compilers, built with support from NVIDIA. […]

Zapata AI and Andretti Acquisition Corp. Announce Closing of Business Combination

BOSTON & INDIANAPOLIS, March 28, 2024 – Industrial generative AI company Zapata Computing, announced today that it has completed its business combination with Andretti Acquisition Corp. (NYSE: WNNR), a special purpose acquisition company. The combined company, which was renamed Zapata Computing Holdings Inc., will operate as Zapata AI and its common stock and warrants will […]

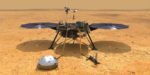

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….