Aug. 31, 2023 — Today, the U.S. Department of Energy (DOE) announced $29 million in funding for seven team awards for research in machine learning, artificial intelligence and data resources for fusion energy sciences. The funding is for projects lasting up to three years, with $11 million in Fiscal Year 2023 dollars and outyear funding contingent on […]

DOE Announces $29M for Research on ML, AI and Data Resources for Fusion Energy Sciences

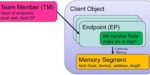

Exascale: Pagoda Updates Programming with Scalable Data Structures and Aggressively Asynchronous Communication

The Pagoda Project researches and develops software that programmers use to implement high-performance applications using the Partitioned Global Address Space model. The project is primarily funded by the Exascale Computing Project and interacts with partner projects….

Sandia: Testing New HPC Technology in Orbit

A Sandia National Laboratories team is working to create an iterative process that uses the International Space Station (ISS) as a proving ground to rapidly test and mature technology in space. In collaboration with the National Nuclear Security Administration, NASA and commercial space company NanoRacks….

Quantum Startup Oxford Ionics Appoints Former Arm CTO/EVP

Oxford, 30 August 2023: Quantum startup Oxford Ionics has appointed former Arm CTO and EVP Dipesh Patel as a non-executive director. Patel was with Arm before becoming an operating partner at Cambridge Innovation Capital. The announcement follows £2 million in funding from the UK’s National Security Strategic Investment Fund (NSSIF) which Oxford Ionics will use […]

DOE Announces $24M for Quantum Networks Research

Aug. 30, 2023 — The U.S. Department of Energy has awarded $24 million for quantum networks research projects. The projects were selected by competitive peer review under the DOE National Laboratory Announcement, Scientific Enablers of Scalable Quantum Communications. The list of projects and more information can be found on the Advanced Scientific Computing Research program homepage. Projects include: […]

Atom Computing Adds Former Sec. of the Navy to Board

August 30, 2023 — Berkeley, CA – Atom Computing has appointed Ken Braithwaite, former Secretary of the Navy, to its board of directors. He served as Secretary of the Navy from May 29, 2020, to January 20, 2021, in the Donald Trump administration. Atom Computing also announced that Greg Muhlner has joined the company as […]

atNorth HPC Nordic Colo Acquires Gompute

Stockholm, Aug. 29th, 2023 — atNorth, a Nordic colocation, high-performance computing, and artificial intelligence service provider, has today announced the company’s acquisition of Gompute, a leading provider of high performance computing (HPC) and data center services.

Gartner Reports Composable Infrastructure Increases Market Penetration up to 10x

August 24, 2023 – Technology industry analyst firm Gartner has issued four new “Hype Cycle” reports for 2023 in which composable infrastructure is given a “high” benefit” rating, according to an announcement from composable infrastructure company GigaIO. While acknowledging barriers to adoption….

VMware and NVIDIA Partner on Generative AI for Enterprises

August 22, 2023 — VMware Inc. (NYSE: VMW) and NVIDIA (NASDAQ: NVDA) today announced the expansion of their partnership with a focus on generative AI. The companies said VMware Private AI Foundation with NVIDIA will enable enterprises to customize models and run generative AI applications, including intelligent chatbots, assistants, search and summarization. The platform will feature generative AI…