Rick Stevens has been named a Fellow of the Association of Computer Machinery (ACM). Stevens is associate laboratory director of the Computing, Environment and Life Sciences directorate at the U.S. Department of Energy’s (DOE) Argonne National Laboratory and a professor of computer science at the University of Chicago. Stevens was honored “for outstanding contributions in […]

Quantum: UChicago Researchers Show Qubits Retaining Information for Hours or Longer — ‘An Eternity’

Touting major potential implications for quantum computing, researchers at the University of Chicago have released a study in which they demonstrated control of “quantum memories of silicon carbide,” the ability to control individual quantum bits — qubits — that retain information for hours or possibly days, instead of fractions of a second. In a paper […]

Veteran Argonne System Helps Find Method to Convert CO2 into Ethanol

By supercomputing standards, Argonne National Lab’s Bebop (stood up in 2017, 1.75 teraflops, bumped off the Top500 list after the June 2019 ranking) seems something of a second-tier player. But veteran, formerly non-Top500 systems like Bebop can still take a star turn, as shown by the results of a research team from Northern Illinois University […]

Exascale Exasperation: Why DOE Gave Intel a 2nd Chance; Can Nvidia GPUs Ride to Aurora’s Rescue?

The most talked-about topic in HPC these days – i.e., another Intel chip delay and therefore delay of the U.S.’s flagship Aurora exascale supercomputer – is something no one directly involved wants to talk about. Not Argonne National Laboratory, where Intel was to install Aurora in 2021; not the Department of Energy’s Exascale Computing Project, […]

DOE Unveils Blueprint for ‘Unhackable’ Quantum Internet: Central Roles for Argonne, Univ. of Chicago, Fermilab

At a press conference held today at the University of Chicago, the U.S. Department of Energy (DOE) unveiled a strategy for the development of a national quantum internet intended to bring “the United States to the forefront of the global quantum race and usher in a new era of communications.” An outgrowth of the National Quantum […]

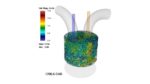

Argonne Claims Largest Engine Flow Simulation Using Theta Supercomputer

Scientists at the U.S. Department of Energy’s (DOE) Argonne National Laboratory have conducted what they claim is the largest simulation of flow inside an internal combustion engine. Insights gained from the simulation – run on 51,328 cores of Argonne’s Theta supercomputer – could help auto manufacturers to design greener engines. A blog post on the […]

Agenda Posted for OpenFabrics Virtual Workshop

The OpenFabrics Alliance (OFA) has opened registration for its OFA Virtual Workshop, taking place June 8-12, 2020. This virtual event will provide fabric developers and users an opportunity to discuss emerging fabric technologies, collaborate on future industry requirements, and address today’s challenges. “The OpenFabrics Alliance is committed to accelerating the development of high performance fabrics. This virtual event will provide fabric developers and users an opportunity to discuss emerging fabric technologies, collaborate on future industry requirements, and address challenges.”

Podcast: A Shift to Modern C++ Programming Models

In this Code Together podcast, Alice Chan from Intel and Hal Finkel from Argonne National Lab discuss how the industry is uniting to address the need for programming portability and performance across diverse architectures, particularly important with the rise of data-intensive workloads like artificial intelligence and machine learning. “We discuss the important shift to modern C++ programming models, and how the cross-industry oneAPI initiative, and DPC++, bring much-needed portable performance to today’s developers.”

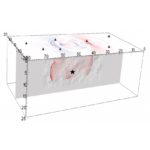

Supercomputing the San Andreas Fault with CyberShake

With help from DOE supercomputers, a USC-led team expands models of the fault system beneath its feet, aiming to predict its outbursts. For their 2020 INCITE work, SCEC scientists and programmers will have access to 500,000 node hours on Argonne’s Theta supercomputer, delivering as much as 11.69 petaflops. “The team is using Theta “mostly for dynamic earthquake ruptures,” Goulet says. “That is using physics-based models to simulate and understand details of the earthquake as it ruptures along a fault, including how the rupture speed and the stress along the fault plane changes.”

Accelerating vaccine research for COVID-19 with HPC and AI

In this special guest feature, Peter Ungaro from HPE writes that HPC is playing a leading role in our fight against COVID-19 to support the urgent need to find a vaccine that will save lives and reduce suffering worldwide. “At HPE, we are committed to advancing the way we live and work. As a world leader in HPC and AI, we recognize the impact we can make by applying modeling, simulation, machine learning and analytics capabilities to data to accelerate insights and discoveries that were never before possible.”