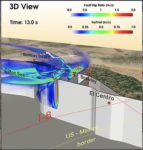

Researchers are using XSEDE supercomputers to model multi-fault earthquakes in the Brawley fault zone, which links the San Andreas and Imperial faults in Southern California. Their work could predict the behavior of earthquakes that could potentially affect millions of people’s lives and property. “Basically, we generate a virtual world where we create different types of earthquakes. That helps us understand how earthquakes in the real world are happening.”

Podcast: Supercomputing Synthetic Biomolecules

Researchers are using HPC to design potentially life-saving proteins. In this TACC podcast, host Jorge Salazar discusses this groundbreaking work with the science team. “The scientists say their methods could be applied to useful technologies such as pharmaceutical targeting, artificial energy harvesting, ‘smart’ sensing and building materials, and more.”

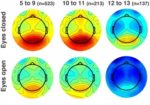

Supercomputing the Complexities of Brain Waves

Scientists are using the Comet supercomputer at SDSC to better understand the complexities of brain waves. With a goal of better understanding human brain development, the HBN project is currently collecting brain scans and EEG recordings, as well as other behavioral data from 10,000 New York City children and young adults – the largest such sample ever collected. “We hope to use portals such as the EEGLAB to process this data so that we can learn more about biological markers of mental health and learning disorders in our youngest patients,” said HBN Director Michael Milham.

SDSC and Sylabs Gather for Singularity User Group

The San Diego Supercomputer Center (SDSC) at UC San Diego, and Sylabs.io recently hosted the first-ever Singularity User Group meeting, attracting users and developers from around the nation and beyond who wanted to learn more about the latest developments in an open source project known as Singularity. Now in use on SDSC’s Comet supercomputer, Singularity has quickly become an essential tool in improving the productivity of researchers by simplifying the development and portability challenges of working with complex scientific software.

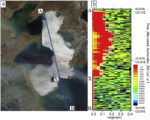

Supercomputing Sea Fog Development to Prevent Maritime Disasters

Over at the XSEDE blog, Kim Bruch from SDSC writes that an international team of researchers is using supercomputers to shed new light on how and why a particular type of sea fog forms. Through for simulation, they hope to provide more accurate fog predictions that help reduce the number of maritime mishaps. “The researchers have been using the Comet supercomputer based at the San Diego Supercomputer Center (SDSC) at UC San Diego. To-date, the team has used about 2 million core hours.”

Comet Supercomputer Assists in Latest LIGO Discovery

This week’s landmark discovery of gravitational and light waves generated by the collision of two neutron stars eons ago was made possible by a signal verification and analysis performed by Comet, an advanced supercomputer based at SDSC in San Diego. “LIGO researchers have so far consumed more than 2 million hours of computational time on Comet through OSG – including about 630,000 hours each to help verify LIGO’s findings in 2015 and the current neutron star collision – using Comet’s Virtual Clusters for rapid, user-friendly analysis of extreme volumes of data, according to Würthwein.”

Fighting the West Nile Virus with HPC & Analytical Ultracentrifugation

Researchers are using new techniques with HPC to learn more about how the West Nile virus replicates inside the brain. “Over several years, Demeler has developed analysis software for experiments performed with analytical ultracentrifuges. The goal is to facilitate the extraction of all of the information possible from the available data. To do this, we developed very high-resolution analysis methods that require high performance computing to access this information,” he said. “We rely on HPC. It’s absolutely critical.”

Christopher Irving Named Manager of HPC Systems at SDSC

Today the San Diego Supercomputer Center announced that Christopher Irving will be the new manager of the Center’s High-Performance Computing systems, effective June 1, 2017. “Christopher has been involved in the many facets of deploying and supporting both our Gordon and Comet supercomputers, so this appointment is a natural fit for all of us,” said Amit Majumdar, director of SDSC’s Data Enabled Scientific Computing division. “He also has been coordinating closely with our User Services Group in his previous role, so he’ll now officially oversee SDSC’s high level of providing HPC and data resources for our broad user community.”

Comet Supercomputer Doubles Down on Nvidia Tesla P100 GPUs

The San Diego Supercomputer Center has been granted a supplemental award from the National Science Foundation to double the number of GPUs on its petascale-level Comet supercomputer. “This expansion is reflective of a wider adoption of GPUs throughout the scientific community, which is being driven in large part by the availability of community-developed applications that have been ported to and optimized for GPUs,” said SDSC Director Michael Norman, who is also the principal investigator for the Comet program.

Over 10,000 Users and Counting for Comet Supercomputer at SDSC

Today the San Diego Supercomputer Center (SDSC) announced that the comet supercomputer has easily surpassed its target of serving at least 10,000 researchers across a diverse range of science disciplines, from astrophysics to redrawing the tree of life. “In fact, about 15,000 users have used Comet to run science gateways jobs alone since the system went into production less than two years ago.”