Drawing on the power of a supercomputer from Dell Technologies and AMD, Durham University and DiRAC scientists are expanding our understanding of the universe and its origins. In scientific circles, everyone knows that more computing power can lead to bigger discoveries in less time. This is the case at Durham University in the U.K., where researchers are unlocking insights into our universe with powerful high performance computing clusters from Dell Technologies.

Free Registration: Supercomputing Frontiers Europe Virtual Conference this Week

Today the organizers of Supercomputing Frontiers Europe 2020 announced that it has waived registration for their virtual conference this week. “Over twenty outstanding keynote and invited speakers at Supercomputing Frontiers Europe 2020 will deliver talks on topics spanning various fields of science and technology. Participants will be able to join remotely, ask questions, and learn at a three-day long virtual event.”

Video: Exploring the Dark Universe

“Heitmann discusses an ambitious end-to-end simulation project that attempts to provide a faithful view of the Universe as seen through the Large Synoptic Survey Telescope (LSST), a telescope currently under construction. She also described how complex, large-scale simulations will be used in order to extract cosmological information from ongoing and future surveys.”

Podcast: Simulating Galaxy Clusters with XSEDE Supercomputers

In this TACC podcast, researchers describe how they are using XSEDE supercomputers to run some of the highest resolution simulations ever of galaxy clusters. One really cool thing about simulations is that we know what’s going on everywhere inside the simulated box,” Butsky said. “We can make some synthetic observations and compare them to what we actually see in absorption spectra and then connect the dots and match the spectra that’s observed and try to understand what’s really going on in this simulated box.”

Video: Making Supernovae with Jets

Chelsea Harris from the University of Michigan gave this talk at the CSGF 2019. “I am developing a FLASH hydrodynamics module, SparkJoy, to perform these simulations at high order. These projects are part of a DOE INCITE project to explore progenitor effects on CC SNe and of the DOE SciDAC program “Towards Exascale Astrophysics of Mergers and Supernovae.”

Podcast: Will the ExaSky Project be First to Reach Exascale?

In this Lets Talk Exascale podcast, Katrin Heitmann from Argonne describes how the ExaSky project may be one of the first applications to reach exascale levels of performance. “Our current challenge problem is designed to run across the full machine [on both Aurora and Frontier], and doing so on a new machine is always difficult,” Heitmann said. “We know from experience, having been first users in the past on Roadrunner, Mira, Titan, and Summit; and each of them had unique hurdles when the machine hit the floor.”

SC19 Invited Talk: OpenSpace – Visualizing the Universe

Anders Ynnerman from Linköping University gave this invited talk at SC19. “This talk will present and demonstrate the NASA funded open source initiative, OpenSpace, which is a tool for space and astronomy research and communication, as well as a platform for technical visualization research. OpenSpace is a scalable software platform that paves the path for the next generation of public outreach in immersive environments such as dome theaters and planetariums.”

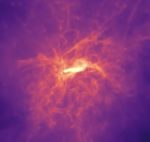

Video: TNG50 cosmic simulation depicts formation of a single massive galaxy

“This cosmic simulation was made possible by the Hazel Hen supercomputer in Stuttgart, where 16,000 cores worked together for more than a year – the longest and most resource-intensive simulation to date. The simulation itself consists of a cube of space measuring more than 230 million light-years in diameter that contains more than 20 billion particles representing dark matter, stars, cosmic gas, magnetic fields, and supermassive black holes (SMBHs).”

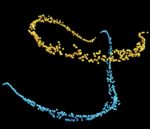

Deep Learning at scale for the construction of galaxy catalogs

A team of scientists is now applying the power of artificial intelligence (AI) and high-performance supercomputers to accelerate efforts to analyze the increasingly massive datasets produced by ongoing and future cosmological surveys. “Deep learning research has rapidly become a booming enterprise across multiple disciplines. Our findings show that the convergence of deep learning and HPC can address big-data challenges of large-scale electromagnetic surveys.”

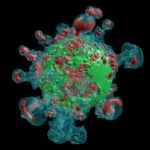

Podcast: ExaStar Project Seeks Answers in Cosmos

In this podcast, Daniel Kasen from LBNL and Bronson Messer of ORNL discuss advancing cosmology through EXASTAR, part of the Exascale Computing Project. “We want to figure out how space and time get warped by gravitational waves, how neutrinos and other subatomic particles were produced in these explosions, and how they sort of lead us down to a chain of events that finally produced us.”