Today NVIDIA released Cuda 9.2, which includes updates to libraries, a new library for accelerating custom linear-algebra algorithms, and lower kernel launch latency. “CUDA 9 is the most powerful software platform for GPU-accelerated applications. It has been built for Volta GPUs and includes faster GPU-accelerated libraries, a new programming model for flexible thread management, and improvements to the compiler and developer tools. With CUDA 9 you can speed up your applications while making them more scalable and robust.”

Call for Applications: NCSA GPU Hackathon in September

NCSA is now accepting team applications for the Blue Waters GPU Hackathon. This event will take place September 10-14, 2018 in Illinois. “General-purpose Graphics Processing Units (GPGPUs) potentially offer exceptionally high memory bandwidth and performance for a wide range of applications. A challenge in utilizing such accelerators has been learning how to program them. These hackathons are intended to help overcome this challenge for new GPU programmers and also to help existing GPU programmers to further optimize their applications – a great opportunity for graduate students and postdocs. Any and all GPU programming paradigms are welcome.”

Altair acquires FluiDyna CFD Technology for GPUs

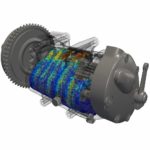

Altair has acquired Germany-based FluiDyna GmbH, a renowned developer of NVIDIA CUDA and GPU-based Computational Fluid Dynamics (CFD) and numerical simulation technologies in whom Altair made an initial investment in 2015. FluiDyna’s simulation software products ultraFluidX and nanoFluidX have been available to Altair’s customers through the Altair Partner Alliance and also offered as standalone licenses. “We are excited about FluiDyna and especially their work with NVIDIA technology for CFD applications,” said James Scapa, Founder, Chairman, and CEO at Altair. “We believe the increased throughput and lower cost of GPU solutions is going to allow for a significant increase in simulations which can be used to further impact the design process.”

ArrayFire Releases v3.6 Parallel Libraries

Today ArrayFire announced the release of ArrayFire v3.6, the company’s open source library of parallel computing functions supporting CUDA, OpenCL, and CPU devices. This new version of ArrayFire includes several new features that improve the performance and usability for applications in machine learning, computer vision, signal processing, statistics, finance, and more. “We use ArrayFire to run the low level parallel computing layer of SDL Neural Machine Translation Products,” said William Tambellini, Senior Software Developer at SDL. “ArrayFire flexibility, robustness and dedicated support makes it a powerful tool to support the development of Deep Learning Applications.”

Bright Computing Release 8.1 adds new features for Deep Learning, Kubernetes, and Ceph

Today Bright Computing released version 8.1 of the Bright product portfolio with new capabilities for cluster workload accounting, cloud bursting, OpenStack private clouds, deep learning, AMD accelerators, Kubernetes, Ceph, and a new lightweight daemon for monitoring VMs and non-Bright clustered nodes. “The response to our last major release, 8.0, has been tremendous,” said Martijn de Vries, Chief Technology Officer of Bright Computing. “Version 8.1 adds many new features that our customers have asked for, such as better insight into cluster utilization and performance, cloud bursting, and more flexibility with machine learning package deployment.”

Video: Inside Volta GPUs

Stephen Jones from NVIDIA gave this talk at SC17. “The NVIDIA Volta architecture powers the world’s most advanced data center GPU for AI, HPC, and Graphics. Features like Independent Thread Scheduling and game-changing Tensor Cores enable Volta to simultaneously deliver the fastest and most accessible performance of any comparable processor. Join us for a tour of the features that will make Volta the platform for your next innovation in AI and HPC supercomputing.”

Call for Papers: International Workshop on Accelerators and Hybrid Exascale Systems

The eight annual International Workshop on Accelerators and Hybrid Exascale Systems (AsHES) has issued its Call for Papers. Held in conjunction with the 32nd IEEE International Parallel and Distributed Processing Symposium, the AsHES Workshop takes place May 23 in Vancouver, Canada. “This workshop focuses on understanding the implications of accelerators and heterogeneous designs on the hardware systems, porting applications, performing compiler optimizations, and developing programming environments for current and emerging systems. It seeks to ground accelerator research through studies of application kernels or whole applications on such systems, as well as tools and libraries that improve the performance and productivity of applications on these systems.”

Allinea to Showcase V7.1 Performance Tools at ISC 2017

Today Allinea software, now part of ARM, announced plans to preview the latest update to its powerful tool suite for developing and optimizing high performance and scientific applications at ISC17 in Frankfurt. “Eliminating performance loss across systems and minimizing the communication overhead often make a critical difference in improving application run times in HPC. We believe that our extended cross-platform support will enable users to achieve unprecedented results by running their systems more efficiently on each of the major platforms and even across platforms,” said Mark O’Connor, director of product management, server and HPC tools, ARM.

Rock Stars of HPC: DK Panda

As our newest Rock Star of HPC, DK Panda sat down with us to discuss his passion for teaching High Performance Computing. “During the last several years, HPC systems have been going through rapid changes to incorporate accelerators. The main software challenges for such systems have been to provide efficient support for programming models with high performance and high productivity. For NVIDIA-GPU based systems, seven years back, my team introduced a novel `CUDA-aware MPI’ concept. This paradigm allows complete freedom to application developers for not using CUDA calls to perform data movement.”

Rock Stars of HPC: John Stone

This Rock Stars of HPC series is about the men and women who are changing the way the HPC community develops, deploys, and operates the supercomputers and social and economic impact of their discoveries. “As the lead developer of the VMD molecular visualization and analysis tool, John Stone’s code is used by more than 100,000 researchers around the world. He’s also a CUDA Fellow, helping to bring HPC to the masses with accelerated computing. In this way and many others, John Stone is certainly one of the Rock Stars of HPC.”