A team of scientists is now applying the power of artificial intelligence (AI) and high-performance supercomputers to accelerate efforts to analyze the increasingly massive datasets produced by ongoing and future cosmological surveys. “Deep learning research has rapidly become a booming enterprise across multiple disciplines. Our findings show that the convergence of deep learning and HPC can address big-data challenges of large-scale electromagnetic surveys.”

Supercomputing Dark Energy Survey Data through 2021

Scientists’ effort to map a portion of the sky in unprecedented detail is coming to an end, but their work to learn more about the expansion of the universe has just begun. “Using the Dark Energy Camera, a 520-megapixel digital camera mounted on the Blanco 4-meter telescope at the Cerro Tololo Inter-American Observatory in Chile, scientists on DES took data for 758 nights over six years. Over those nights, the survey generated 50 terabytes (that’s 50 trillion bytes) of data over its six observation seasons. That data is stored and analyzed at NCSA. Compute power for the project comes from NCSA’s NSF-funded Blue Waters Supercomputer, the University of Illinois Campus Cluster, and Fermilab.”

Radio Free HPC Looks at the European Union’s Big Investment in Exascale

In this podcast, the Radio Free HPC team looks at European Commission’s recent move to fund exascale development with 1 Billion Euros. “While Europe already had a number of exascale initiatives under way, this is a major step forward in that it puts up the money. Under a new legal and funding structure, the Commission’s contribution will be $486 million, or roughly half of the projected EUR 1 Billion total.”

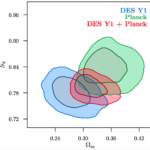

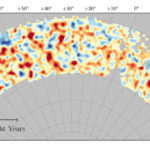

Dark Energy Survey Releases First Three Years of Data

Today scientists from the Dark Energy Survey (DES) released their first three years of data. This first major release of data from the Survey includes information on about 400 million astronomical objects, including distant galaxies billions of light-years away as well as stars in our own galaxy. “There are all kinds of discoveries waiting to be found in the data. While DES scientists are focused on using it to learn about dark energy, we wanted to enable astronomers to explore these images in new ways, to improve our understanding of the universe,” said Dark Energy Survey Data Management Project Scientist Brian Yanny of the U.S. Department of Energy’s Fermi National Accelerator Laboratory.

OSC Helps Map the Invisible Universe

The Ohio Supercomputer Center played a critical role in helping researchers reach a milestone mapping the growth of the universe from its infancy to present day. “The new results released Aug. 3 confirm the surprisingly simple but puzzling theory that the present universe is composed of only 4 percent ordinary matter, 26 percent mysterious dark matter, and the remaining 70 percent in the form of mysterious dark energy, which causes the accelerating expansion of the universe.”

Supercomputing the Dark Energy Survey at NCSA

Researchers are using NCSA supercomputers to explore the mysteries of Dark Matter. “NCSA recognized many years ago the key role that advanced computing and data management would have in astronomy and is thrilled with the results of this collaboration with campus and our partners at Fermilab and the National Optical Astronomy Observatory,” said NCSA Director, Bill Gropp.