As part of the US Department of Energy’s Exascale Computing Project (ECP), the Earthquake Simulation (EQSIM) application development team is creating a computational tool set and workflow for earthquake hazard and risk assessment that moves beyond the traditional empirically based techniques which are dependent on historical earthquake data. With software assistance from the ECP’s software technology group, the EQSIM team is working to give scientists and engineers the ability to simulate full end-to-end earthquake processes.

Exascale Computing Project’s EQSIM Team Helps Assess Infrastructure Earthquake Risk

‘Let’s Talk Exascale’: How Supercomputing Is Shaking Up Earthquake Science

Supercomputing is bringing seismic change to earthquake science. A field that historically has predicted by looking back now is moving forward with HPC and physics-based models to comprehensively simulate the earthquake process, end to end. In this episode of the “Let’s Talk Exascale” podcast series from the U.S. Department of Energy’s Exascale Computing Project (ECP), David McCallen, leader of ECP’s Earthquake Sim (EQSIM) subproject, discusses his team’s work to help improve the design of more quake-resilient buildings and bridges.

XSEDE Supercomputers Simulate Tsunamis from Volcanic Events

Researchers at the University of Rhode Island are using XSEDE supercomputer to show that high-performance computer modeling can accurately simulate tsunamis from volcanic events. Such models could lead to early-warning systems that could save lives and help minimize catastrophic property damage. “As our understanding of the complex physics related to tsunamis grows, access to XSEDE supercomputers such as Comet allows us to improve our models to reflect that, whereas if we did not have access, the amount of time it would take to such run simulations would be prohibitive.”

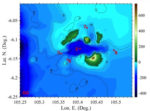

Supercomputing the San Andreas Fault with CyberShake

With help from DOE supercomputers, a USC-led team expands models of the fault system beneath its feet, aiming to predict its outbursts. For their 2020 INCITE work, SCEC scientists and programmers will have access to 500,000 node hours on Argonne’s Theta supercomputer, delivering as much as 11.69 petaflops. “The team is using Theta “mostly for dynamic earthquake ruptures,” Goulet says. “That is using physics-based models to simulate and understand details of the earthquake as it ruptures along a fault, including how the rupture speed and the stress along the fault plane changes.”

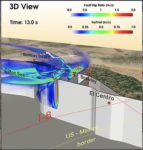

Video: Supercomputing Dynamic Earthquake Ruptures

Researchers are using XSEDE supercomputers to model multi-fault earthquakes in the Brawley fault zone, which links the San Andreas and Imperial faults in Southern California. Their work could predict the behavior of earthquakes that could potentially affect millions of people’s lives and property. “Basically, we generate a virtual world where we create different types of earthquakes. That helps us understand how earthquakes in the real world are happening.”

Gordon Bell Prize Highlights the Impact of Ai

In this special guest feature from Scientific Computing World, Robert Roe reports on the Gordon Bell Prize finalists for 2018. “The finalist’s research ranges from AI to mixed precision workloads, with some taking advantage of the Tensor Cores available in the latest generation of Nvidia GPUs. This highlights the impact of AI and GPU technologies, which are opening up not only new applications to HPC users but also the opportunity to accelerate mixed precision workloads on large scale HPC systems.”

How HPC can Benefit Society

Sharan Kalwani writes that that one of the main reasons he got into computing long ago was the potential that he saw in using powerful news supercomputing tools towards addressing the needs of the society we live in. “The point here is that technically the challenges are very tractable, however society needs to also grow at a comparable, if not higher pace than the technology (which is evolving faster than us).”

Video: Kathy Yelick from LBNL Testifies at House Hearing on Big Data Challenges and Advanced Computing

In this video, Kathy Yelick from LBNL describes why the US needs to accelerate its efforts to stay ahead in AI and Big Data Analytics. “Data-driven scientific discovery is poised to deliver breakthroughs across many disciplines, and the U.S. Department of Energy, through its national laboratories, is well positioned to play a leadership role in this revolution. Driven by DOE innovations in instrumentation and computing, however, the scientific data sets being created are becoming increasingly challenging to sift through and manage.”

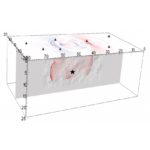

Unravelling Earthquake Dynamics through Extreme-Scale Multiphysics Simulations

Alice-Agnes Gabriel gave this talk at the PASC18 conference. “Earthquakes are highly non-linear multiscale problems, encapsulating geometry and rheology of faults within the Earth’s crust torn apart by propagating shear fracture and emanating seismic wave radiation. This talk will focus on using physics-based scenarios, modern numerical methods and hardware specific optimizations to shed light on the dynamics, and severity, of earthquake behavior.”

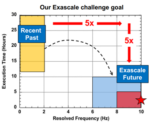

How Exascale will Move Earthquake Simulation Forward

In this video from the HPC User Forum in Tucson, David McCallen from LBNL describes how exascale computing capabilities will enhance earthquake simulation for improved structural safety. “With the major advances occurring in high performance computing, the ability to accurately simulate the complex processes associated with major earthquakes is becoming a reality. High performance simulations offer a transformational approach to earthquake hazard and risk assessments that can dramatically increase our understanding of earthquake processes and provide improved estimates of the ground motions that can be expected in future earthquakes.”