In this special guest feature, Thierry Pellegrino from Dell EMC writes that data analytics powered by HPC & AI solutions are delivering new insights for research and the enterprise. “HPC is clearly no longer reserved for large companies or research organizations. It is meant for those who want to achieve more innovation, discoveries, and the elusive competitive edge.”

Penguin Computing Breaks STAC-M3 Performance Records with WekaIO

Today Penguin Computing and WekaIO announced record performance on the STAC-M3 Benchmark. The STAC-M3 Antuco and Kanaga Benchmark Suites are the industry standard for testing solutions that enable high-speed analytics on time series data, such as tick-by-tick market data. “By combining Penguin Computing Relion servers and FrostByte Storage with the WekaIO File System, the companies have affirmed that this integrated solution is ideal for algorithmic trading and quantitative analysis workloads, common in financial services.”

High-Performance Big Data Analytics with RDMA over NVM and NVMe-SSD

Xiaoyi Lu from OSU gave this talk at the 2018 OpenFabrics Workshop. “The convergence of Big Data and HPC has been pushing the innovation of accelerating Big Data analytics and management on modern HPC clusters. Recent studies have shown that the performance of Apache Hadoop, Spark, and Memcached can be significantly improved by leveraging the high-performance networking technologies, such as Remote Direct Memory Access (RDMA). In this talk, we propose new communication and I/O schemes for these data analytics stacks, which are designed with RDMA over NVM and NVMe-SSD.”

David Bader from Georgia Tech Joins PASC18 Speaker Lineup

Today PASC18 announced that this year’s Public Lecture will be held by David Bader from Georgia Tech. Dr. Bader will speak on Massive-Scale Analytics Applied to Real-World Problems. “Emerging real-world graph problems include: detecting and preventing disease in human populations; revealing community structure in large social networks; and improving the resilience of the electric power grid. Unlike traditional applications in computational science and engineering, solving these social problems at scale often raises new challenges because of the sparsity and lack of locality in the data, the need for research on scalable algorithms and development of frameworks for solving these real-world problems on high performance computers, and for improved models that capture the noise and bias inherent in the torrential data streams. This talk will discuss the opportunities and challenges in massive data-intensive computing for applications in social sciences, physical sciences, and engineering.”

Rock Stars of HPC: DK Panda

As our newest Rock Star of HPC, DK Panda sat down with us to discuss his passion for teaching High Performance Computing. “During the last several years, HPC systems have been going through rapid changes to incorporate accelerators. The main software challenges for such systems have been to provide efficient support for programming models with high performance and high productivity. For NVIDIA-GPU based systems, seven years back, my team introduced a novel `CUDA-aware MPI’ concept. This paradigm allows complete freedom to application developers for not using CUDA calls to perform data movement.”

Dr. Eng Lim Goh presents: HPC & AI Technology Trends

Dr. Eng Lim Goh from Hewlett Packard Enterprise gave this talk at the HPC User Forum. “SGI’s highly complementary portfolio, including its in-memory high-performance data analytics technology and leading high-performance computing solutions will extend and strengthen HPE’s current leadership position in the growing mission critical and high-performance computing segments of the server market.”

The Long Rise of HPC in the Cloud

“As the cloud market has matured, we have begun to see the introduction of HPC cloud providers and even the large public cloud providers such as Microsoft are introducing genuine HPC technology to the cloud. This change opens up the possibility for new users that wish to either augment their current computing capabilities or take the initial plunge and try HPC technology without investing huge sums of money on an internal HPC infrastructure.”

Call for Proposals: Fortissimo Project

The European Fortissimo Project has issued its Second Call for Proposals. Fortissimo is a collaborative project that enables European SMEs to be more competitive globally through the use of simulation services running on High Performance Computing Cloud infrastructure.

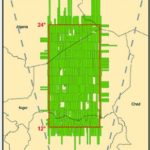

NASA Optimizes Climate Impact Research with Cycle Computing

Today Cycle Computing announced its continued involvement in optimizing research spearheaded by NASA’s Center for Climate Simulation (NCCS) and the University of Minnesota. Currently, a biomass measurement effort is underway in a coast-to-coast band of Sub-Saharan Africa. An over 10 million square kilometer region of Africa’s trees, a swath of acreage bigger than the entirety […]

For SGI, This is Supercomputing at ISC 2016

In this video from ISC 2016, Gabriel Broner from SGI describes the company’s innovative solutions for high performance computing. “As the trusted leader in high performance computing, SGI helps companies find answers to the world’s biggest challenges. Our commitment to innovation is unwavering and focused on delivering market leading solutions in Technical Computing, Big Data Analytics, and Petascale Storage. Our solutions provide unmatched performance, scalability and efficiency for a broad range of customers.”