Today the InfiniBand Trade Association (IBTA) announced the public availability of the InfiniBand Architecture Specification Volume 1 Release 1.4 and Volume 2 Release 1.4. With these updates in place, the InfiniBand ecosystem will continue to grow and address the needs of the next generation of HPC, artificial AI, cloud and enterprise data center compute, and storage connectivity needs.

HDR 200Gb/s InfiniBand Sees Major Growth on Latest TOP500 List

Today the InfiniBand Trade Association (IBTA) reported the latest TOP500 List results show that HDR 200Gb/s InfiniBand accelerates 31 percent of new InfiniBand-based systems on the List, including the fastest TOP500 supercomputer built this year. The results also highlight InfiniBand’s continued position in the top three supercomputers in the world and acceleration of six of the top 10 systems. Since the TOP500 List release in June 2019, InfiniBand’s presence has increased by 12 percent, now accelerating 141 supercomputers on the List.

IBTA Celebrates 20 Years of Growth and Industry Success

“This year, the IBTA is celebrating 20 years of growth and success in delivering these widely used and valued technologies to the high-performance networking industry. Over the past two decades, the IBTA has provided the industry with technical specifications and educational resources that have advanced a wide range of high-performance platforms. InfiniBand and RoCE interconnects are deployed in the world’s fastest supercomputers and continue to significantly impact future-facing applications such as Machine Learning and AI.”

Video: Why InfiniBand is the Way Forward for Ai and Exascale

In this video, Gilad Shainer from the InfiniBand Trade Association describes how InfiniBand offers the optimal interconnect technology for Ai, HPC, and Exascale. “Tthrough Ai, you need the biggest pipes in order to move those giant amount of data in order to create those Ai software algorithms. That’s one thing. Latency is important because you need to drive things faster. RDMA is one of the key technology that enables to increase the efficiency of moving data, reducing CPU overhead. And by the way, now, there’s all of the Ai frameworks that exist out there, supports RDMA as a default element within the framework itself.”

Why InfiniBand rules the roost in the TOP500

In this special guest feature, Bill Lee from the InfiniBand Trade Association writes that the new TOP500 list has a lot to say about how interconnects matter for the world’s most powerful supercomputers. “Once again, the List highlights that InfiniBand is the top choice for the most powerful and advanced supercomputers in the world, including the reigning #1 system – Oak Ridge National Laboratory’s (ORNL) Summit. The TOP500 List results not only report that InfiniBand accelerates the top three supercomputers in the world but is also the most used high-speed interconnect for the TOP500 systems.”

InfiniBand Powers World’s Fastest Supercomputer

Today the InfiniBand Trade Association (IBTA) announced that the latest TOP500 List results that report the world’s new fastest supercomputer, Oak Ridge National Laboratory’s Summit system, is accelerated by InfiniBand EDR. InfiniBand now powers the top three and four of the top five systems. The latest rankings underscore InfiniBand’s continued position as the interconnect of choice for the industry’s most demanding high performance computing (HPC) platforms. “As the makeup of the world’s fastest supercomputers evolve to include more non-HPC systems such as cloud and hyperscale, the IBTA remains confident in the InfiniBand Architecture’s flexibility to support the increasing variety of demanding deployments,” said Bill Lee, IBTA Marketing Working Group Co-Chair. “As evident in the latest TOP500 List, the reinforced position of InfiniBand among the most powerful HPC systems and growing prominence of RoCE-capable non-HPC platforms demonstrate the technology’s unparalleled performance capabilities across a diverse set of applications.”

InfiniBand Accelerates 77 Percent of New HPC Systems on the TOP500

“InfiniBand being the preferred interconnect for new HPC systems shows the increasing demand for the performance it can deliver. Its place at #1 and #4 are excellent examples of that performance,” said Bill Lee, IBTA Marketing Working Group Co-Chair. “Besides of delivering world-leading performance and scalability, InfiniBand guarantees backward and forward compatibility, ensuring users highest return on investment and future proofing their data centers.”

InfiniBand Continues Momentum on Latest TOP500

Today the InfiniBand Trade Association (IBTA) announced that InfiniBand remains the most used HPC interconnect on the TOP500. Additionally, the majority of newly listed TOP500 supercomputers are accelerated by InfiniBand technology. These results reflect continued industry demand for InfiniBand’s unparalleled combination of network bandwidth, low latency, scalability and efficiency.

As demonstrated on the June 2017 TOP500 supercomputer list, InfiniBand is the high-performance interconnect of choice for HPC and Deep Learning platforms,” said Bill Lee, IBTA Marketing Working Group Co-Chair. “The key capabilities of RDMA, software-defined architecture, and the smart accelerations that the InfiniBand providers have brought with their offering resulted in enabling world-leading performance and scalability for InfiniBand-connected supercomputers.”

RoCE Initiative Launches Online Product Directory

Today the RoCE Initiative at the InfiniBand Trade Association announced the availability of the RoCE Product Directory. The new online resource is intended to inform CIOs and enterprise data center architects about their options for deploying RDMA over Converged Ethernet (RoCE) technology within their Ethernet infrastructure.

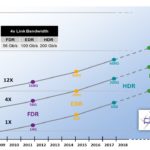

InfiniBand Roadmap Foretells a World Where Server Connectivity is at 1000 Gb/sec

The InfiniBand Trade Association (IBTA) has updated their InfiniBand Roadmap. With HDR 200 Gb/sec technolgies shipping this year, the roadmap looks out to an XDR world where server connectivity reaches 1000 Gb/sec. “The IBTA‘s InfiniBand roadmap is continuously developed as a collaborative effort from the various IBTA working groups. Members of the IBTA working groups include leading enterprise IT vendors who are actively contributing to the advancement of InfiniBand. The roadmap details 1x, 4x, and 12x port widths with bandwidths reaching 600Gb/s data rate HDR in 2017. The roadmap is intended to keep the rate of InfiniBand performance increase in line with systems-level performance gains.”