In this video from the Intel HPC Developer Conference, Prabhat from NERSC describes how high performance computing techniques are being used to scale Machine Learning to over 100,000 compute cores. “Using TB-sized datasets from three science applications: astrophysics, plasma physics, and particle physics, we show that our implementation can construct kd-tree of 189 billion particles in 48 seconds on utilizing ∼50,000 cores.”

Using Machine Learning to Avoid the Unwanted

In this video from the Intel HPC Developer Conference, Justin Gottschlich, PhD from Intel describes how the company doubling down on Anomaly Detection using Machine Learning and Intel technologies. “In this talk, we present future research directions at Intel Labs using deep learning for anomaly detection and management. We discuss the required machine learning characteristics for such systems, ranging from zero positive learning, automatic feature extraction, and real-time reinforcement learning. We also discuss the general applicability of such anomaly detection systems across multiple domains such as data centers, autonomous vehicles, and high performance computing.”

Accelerating Machine Learning on Intel Platforms

In this video from the Intel HPC Developer Conference, Ananth Sankaranarayanan from Intel describes how the company is optimizing Machine Learning frameworks for Intel platforms. Open source frameworks often are not optimized for a particular chip, but bringing Intel’s developer tools to bear can result in significant speedups. For meaningful impact and business value, organizations require that the time to train a deep learning model be reduced from weeks to hours. In this talk, we will present the details of the optimization and characterization of Intel-Caffe and the support of new deep learning convolutional neural network primitives in the Intel Math Kernel Library.”

Preparing Developers for Tomorrow’s Systems

In this special guest feature, Bill Mannel from Hewlett Packard Enterprise writes that upcoming Intel HPC Developer Conference in Salt Lake City is a great opportunity to learn about code modernization for the next generation of high performance computing applications. “As computing systems grow increasingly complex and new architecture designs become mainstream, training developers to write code which runs on future HPC systems will require a collaborative environment and the expertise of the best and brightest in the industry.”

Radio Free HPC Does the Day-by-Day SC16 Preview Show

In this podcast, the Radio Free HPC team previews the ancillary events around SC16 in Salt Lake City. With a full week in store, this could be the best conference yet. After our event roundup, they share their predictions for SC16 total attendance numbers.

Keynotes Announced for Intel HPC Developer Conference at SC16

The Intel HPC Developer Conference at SC16 has announced its keynote speakers. Jonathan Cohen and Kai Li from Princeton will present, Going Where Neuroscience and Computer Science Have Not Gone Before. “Taking place Nov. 12-13 in Salt Lake City, the Intel HPC Developer Conference will bring together developers from around the world to discuss code modernization in high-performance computing.”

2016 Intel HPC Developer Conference Addresses In-Demand Topics

Supercomputing developers and experts from around the globe will converge on Salt Lake City, Utah for the 2016 Intel® HPC Developer Conference on November 12-13 – just prior to SC ‘16. Conference attendance is free, however, those interested in attending should register quickly as Intel is expecting a big response, reflecting the broadening demand for HPC learning opportunities among technical developers. road on to learn about the incredible presenter lineup this year.

Call for Proposals: Intel HPC Developer Conference at SC16

The Intel HPC Developer Conference has issued its Call for Proposals. Held in conjunction with SC16, the event takes place Nov. 12-13 in Salt Lake City.

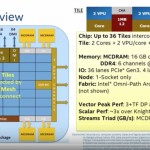

Video: MCDRAM (High Bandwidth Memory) on Knights Landing

“The Intel’s next generation Xeon Phi processor family x200 product (code-name Knights Landing) brings in new memory technology, a high bandwidth on package memory called Multi-Channel DRAM (MCDRAM) in addition to the traditional DDR4. MCDRAM is a high bandwidth (~4x more than DDR4), low capacity (up to 16GB) memory, packaged with the Knights Landing Silicon. MCDRAM can be configured as a third level cache (memory side cache) or as a distinct NUMA node (allocatable memory) or somewhere in between. With the different memory modes by which the system can be booted, it becomes very challenging from a software perspective to understand the best mode suitable for an application.”

Video: A Brief Introduction to OpenFabrics

Sean Hefty from Intel presented this talk at the Intel HPC Developer Conference at SC15. “OpenFabrics Interfaces (OFI) is a framework focused on exporting fabric communication services to applications. OFI is best described as a collection of libraries and applications used to export fabric services. The key components of OFI are: application interfaces, provider libraries, kernel services, daemons, and test applications. Libfabric is a core component of OFI. It is the library that defines and exports the user-space API of OFI, and is typically the only software that applications deal with directly. It works in conjunction with provider libraries, which are often integrated directly into libfabric.”