NERSC is among the early adopters of the new NVIDIA A100 Tensor Core GPU processor announced by NVIDIA this week. More than 6,000 of the A100 chips will be included in NERSC’s next-generation Perlmutter system, which is based on an HPE Cray Shasta supercomputer that will be deployed at Lawrence Berkeley National Laboratory later this year. “Nearly half of the workload running at NERSC is poised to take advantage of GPU acceleration, and NERSC, HPE, and NVIDIA have been working together over the last two years to help the scientific community prepare to leverage GPUs for a broad range of research workloads.”

NERSC Finalizes Contract for Perlmutter Supercomputer

NERSC has moved another step closer to making Perlmutter — its next-generation GPU-accelerated supercomputer — available to the science community in 2020. In mid-April, NERSC finalized its contract with Cray — which was acquired by Hewlett Packard Enterprise (HPE) in September 2019 — for the new system, a Cray Shasta supercomputer that will feature 24 […]

Video: Why Supercomputers Are A Vital Tool In The Fight Against COVID-19

In this video from Forbes, Horst Simon from LBNL describes how supercomputers are being used for coronavirus research. “Computing is stepping up to the fight in other ways too. Some researchers are crowdsourcing computing power to try to better understand the dynamics of the protein and a dataset of 29,000 research papers has been made available to researchers leveraging artificial intelligence and other approaches to help tackle the virus. IBM has launched a global coding challenge that includes a focus on COVID-19 and Amazon has said it will invest $20 million to help speed up coronavirus testing.”

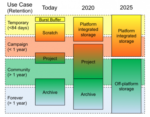

NERSC Rolls Out New Community File System for Next-Gen HPC

NERSC recently unveiled their new Community File System (CFS), a long-term data storage tier developed in collaboration with IBM that is optimized for capacity and manageability. “In the next few years, the explosive growth in data coming from exascale simulations and next-generation experimental detectors will enable new data-driven science across virtually every domain. At the same time, new nonvolatile storage technologies are entering the market in volume and upending long-held principles used to design the storage hierarchy.”

NERSC Supercomputer to Help Fight Coronavirus

“NERSC is a member of the COVID-19 High Performance Computing Consortium. In support of the Consortium, NERSC has reserved a portion of its Director’s Discretionary Reserve time on Cori, a Cray XC40 supercomputer, to support COVID-19 research efforts. The GPU partition on Cori was installed to help prepare applications for the arrival of Perlmutter, NERSC’s next-generation system that is scheduled to begin arriving later this year and will rely on GPUs for much of its computational power.”

MLPerf-HPC Working Group seeks participation

In this special guest feature, Murali Emani from Argonne writes that a team of scientists from DoE labs have formed a working group called MLPerf-HPC to focus on benchmarking machine learning workloads for high performance computing. “As machine learning (ML) is becoming a critical component to help run applications faster, improve throughput and understand the insights from the data generated from simulations, benchmarking ML methods with scientific workloads at scale will be important as we progress towards next generation supercomputers.”

LBNL Breaks New Ground in Data Center Optimization

Berkeley Lab has been at the forefront of efforts to design, build, and optimize energy-efficient hyperscale data centers. “In the march to exascale computing, there are real questions about the hard limits you run up against in terms of energy consumption and cooling loads,” Elliott said. “NERSC is very interested in optimizing its facilities to be leaders in energy-efficient HPC.”

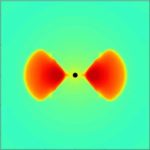

Supercomputing a Neutron Star Merger

Scientists are getting better at modeling the complex tangle of physics properties at play in one of the most powerful events in the known universe: the merger of two neutron stars. “We’re starting from a set of physical principles, carrying out a calculation that nobody has done at this level before, and then asking, ‘Are we reasonably close to observations or are we missing something important?’” said Rodrigo Fernández, a co-author of the latest study and a researcher at the University of Alberta.

Department of Energy to Showcase World-Leading Science at SC19

The DOE’s national laboratories will be showcased at SC19 next week in Denver, CO. “Computational scientists from DOE laboratories have been involved in the conference since it began in 1988 and this year’s event is no different. Experts from the 17 national laboratories will be sharing a booth featuring speakers, presentations, demonstrations, discussions, and simulations. DOE booth #925 will also feature a display of high performance computing artifacts from past, present and future systems. Lab experts will also contribute to the SC19 conference program by leading tutorials, presenting technical papers, speaking at workshops, leading birds-of-a-feather discussions, and sharing ideas in panel discussions.”

Epic HPC Road Trip stops at NERSC for a look at Big Network and Storage Challenges

In this special guest feature, Dan Olds from OrionX continues his Epic HPC Road Trip series with a stop at NERSC. “NERSC is unusual in that they receive more data than they send out. Client agencies process their raw data on NERSC systems and then export the results to their own organizations. This puts a lot of pressure on storage and network I/O, making them top priority at NERSC.”