Barcelona, February 8th 2023 – HPCNow! has announced a real-time HPC cluster monitoring capability. The monitoring stack includes open-source solutions such as Grafana, Elasticsearch and Prometheus, for visualization and data storage, and Slurm plugins plus customized scripts to gather all the information needed by the system administrator. It is delivered using Docker Compose for single-node […]

Liqid Announces Slurm Workload Manager Integration for HPC Workloads

Liqid, provider of a composable disaggregated infrastructure (CDI) platform, today announced dynamic Slurm Workload Manager integration for its Liqid Matrix Software, delivering a tool for HPC deployments designed to optimize resource utilization for artificial intelligence (AI). Liqid uses the Slurm integration to compose servers on submission from pools of compute, storage and GPU resources via […]

vScaler Integrates SLURM with GigaIO FabreX for Elastic HPC Cloud Device Scaling

Open source private HPC cloud specialist vScaler today announced the integration of SLURM workload manager with GigaIO’s FabreX for elastic scaling of PCI devices and HPC disaggregation. FabreX, which GigaIO describes as the “first in-memory network,” supports vScaler’s private cloud appliances for such workloads such as deep learning, biotechnology and big data analytics. vScaler’s disaggregated […]

Video: State of ARM-based HPC

In this video, Paul Isaacs from Linaro presents: State of ARM-based HPC. “This talk provides an overview of applications and infrastructure services successfully ported to Aarch64 and benefiting from scale. “With its debut on the TOP500, the 125,000-core Astra supercomputer at New Mexico’s Sandia Labs uses Cavium ThunderX2 chips to mark Arm’s entry into the petascale world. In Japan, the Fujitsu A64FX Arm-based CPU in the pending Fugaku supercomputer has been optimized to achieve high-level, real-world application performance, anticipating up to one hundred times the application execution performance of the K computer.”

UKRI Awards ARCHER2 Supercomputer Services Contract

UKRI has awarded contracts to run elements of the next national supercomputer, ARCHER2, which will represent a significant step forward in capability for the UK’s science community. ARCHER2 is provided by UKRI, EPCC, Cray (an HPE company) and the University of Edinburgh. “ARCHER2 will be a Cray Shasta system with an estimated peak performance of 28 PFLOP/s. The machine will have 5,848 compute nodes, each with dual AMD EPYC Zen2 (Rome) 64 core CPUs at 2.2GHz, giving 748,544 cores in total and 1.57 PBytes of total system memory.”

Distributed HPC Applications with Unprivileged Containers

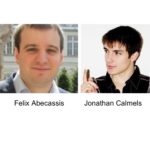

Felix Abecassis and Jonathan Calmels from NVIDIA gave this talk at FOSDEM 2020. “We will present the challenges in doing distributed deep learning training at scale on shared heterogeneous infrastructure. At NVIDIA, we use containers extensively in our GPU clusters for both HPC and deep learning applications. We love containers for how they simplify software packaging and enable reproducibility without sacrificing performance.”

What to expect at SC19

In this special guest feature, Dr. Rosemary Francis gives her perspective on what to look for at SC19 conference next week in Denver. “There are always many questions circling the HPC market in the run up to Supercomputing. In 2019, the focus is even more focused on the cloud in previous years. Here are a few of the topics that could occupy your coffee queue conversations in Denver this year.”

Harvard Names New Lenovo HPC Cluster after Astronomer Annie Jump Cannon

Harvard has deployed a liquid-cooled supercomputer from Lenovo at it’s FASRC computing center. The system, named “Cannon” in honor of astronomer Annie Jump Cannon, is a large-scale HPC cluster supporting scientific modeling and simulation for thousands of Harvard researchers. “This new cluster will have 30,000 cores of Intel 8268 “Cascade Lake” processors. Each node will have 48 cores and 192 GB of RAM.”

Job of the Week: Software Developer/SLURM System Administrator at Adaptive Computing

Adaptive Computing is seeking a Software Developer/SLURM System Administrator in our Job of the Week. The position is located in the Naples, Florida. “This is an excellent opportunity to be part of the core team in a rapidly growing company. The company enjoys a rock-solidindustry reputation in HPC Workload Scheduling and Cloud Management Solutions.Adaptive Computing works with some of the largest commercial enterprises,government agencies, and academic institutions in the world.”