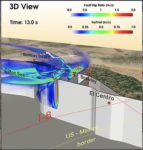

Researchers are using XSEDE supercomputers to model multi-fault earthquakes in the Brawley fault zone, which links the San Andreas and Imperial faults in Southern California. Their work could predict the behavior of earthquakes that could potentially affect millions of people’s lives and property. “Basically, we generate a virtual world where we create different types of earthquakes. That helps us understand how earthquakes in the real world are happening.”

New Texascale Magazine from TACC looks at HPC for the Endless Frontier

This feature story describes how the computational power of Frontera will be a game changer for research. Late last year, the Texas Advanced Computing Center announced plans to deploy Frontera, the world’s fastest supercomputer in academia. To prepare for launch, TACC just published the inaugural edition of Texascale, an annual magazine with stories that highlight the people, science, systems, and programs that make TACC one of the leading academic computing centers in the world.

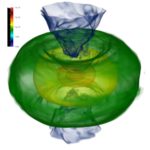

Supercomputing Neutron Star Structures and Mergers

Over at XSEDE, Kimberly Mann Bruch & Jan Zverina from the San Diego Supercomputer Center write that researchers are using supercomputers to create detailed simulations of neutron star structures and mergers to better understand gravitational waves, which were detected for the first time in 2015. “XSEDE resources significantly accelerated our scientific output,” noted Paschalidis, whose group has been using XSEDE for well over a decade, when they were students or post-doctoral researchers. “If I were to put a number on it, I would say that using XSEDE accelerated our research by a factor of three or more, compared to using local resources alone.”

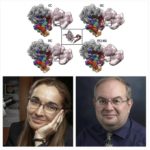

Fighting the West Nile Virus with HPC & Analytical Ultracentrifugation

Researchers are using new techniques with HPC to learn more about how the West Nile virus replicates inside the brain. “Over several years, Demeler has developed analysis software for experiments performed with analytical ultracentrifuges. The goal is to facilitate the extraction of all of the information possible from the available data. To do this, we developed very high-resolution analysis methods that require high performance computing to access this information,” he said. “We rely on HPC. It’s absolutely critical.”

Podcast: A Retrospective on Great Science and the Stampede Supercomputer

TACC will soon deploy Phase 2 of the Stampede II supercomputer. In this podcast, they celebrate by looking back on some of the great science computed on the original Stampede machine. “In 2017, the Stampede supercomputer, funded by the NSF, completed its five-year mission to provide world-class computational resources and support staff to more than 11,000 U.S. users on over 3,000 projects in the open science community. But what made it special? Stampede was like a bridge that moved thousands of researchers off of soon-to-be decommissioned supercomputers, while at the same time building a framework that anticipated the eminent trends that came to dominate advanced computing.”

Supercomputing DNA Packing in Nuclei at TACC

Aaron Dubrow writes that researchers at the University of Texas Medical Branch are exploring DNA folding and cellular packing with supercomputing power from TACC. “In the field of molecular biology, there’s a wonderful interplay between theory, experiment and simulation,” Pettitt said. “We take parameters of experiments and see if they agree with the simulations and theories. This becomes the scientific method for how we now advance our hypotheses.”

Podcast: Combining Cryo-electron Microscopy with Supercomputer Simulation

Scientists have taken the closest look yet at molecule-sized machinery called the human preinitiation complex. It basically opens up DNA so that genes can be copied and turned into proteins. The science team formed from Northwestern University, Berkeley National Laboratory, Georgia State University, and UC Berkeley. They used a cutting-edge technique called cryo-electron microscopy and combined it with supercomputer analysis. They published their results May of 2016 in the journal Nature.

Podcast: Supercomputing Better Soybeans

In this TACC Podcast, Researchers describe how XSEDE supercomputing resources are helping them grow a better soybean through the SoyKB project based from the University of Missouri-Columbia. “The way resequencing is conducted is to chop the genome in many small pieces and see the many, many combinations of small pieces,” said Xu. “The data are huge, millions of fragments mapped to a reference. That’s actually a very time consuming process. Resequencing data analysis takes most of our computing time on XSEDE.”

Podcast: Supercomputing Better Ways to Produce Gamma Rays

In this podcast, researchers from the University of Texas at Austin discuss how they are using TACC supercomputers to find a new way to make controlled beams of gamma rays. “The simulations done on the Stampede and Lonestar systems at TACC will guide a real experiment later this summer in 2016 with the recently upgraded Texas Petawatt Laser, one of the most powerful in the world. The scientists say the quest for producing gamma rays from non-radioactive materials will advance basic understanding of things like the inside of stars. What’s more, gamma rays are used by hospitals to eradicate cancer, image the brain, and they’re used to scan cargo containers for terrorist materials. Unfortunately no one has yet been able to produce gamma ray beams from non-radioactive sources. These scientists hope to change that.”

Podcast: Supercomputing Gels with Stampede

In this TACC Podcast, Jorge Salazar looks at how researchers are using the Stampede supercomputer to shed light on the microscale world of colloidal gels — liquids dispersed in a solid medium as a gel. “Colloidal gels are actually soft solids, but we can manipulate their structure to produce ‘on-demand’ transitions from liquid-like to solid-like behavior that can be reversed many times,” Zia said. Zia is an Assistant Professor of Chemical and Biomolecular Engineering at Cornell University.