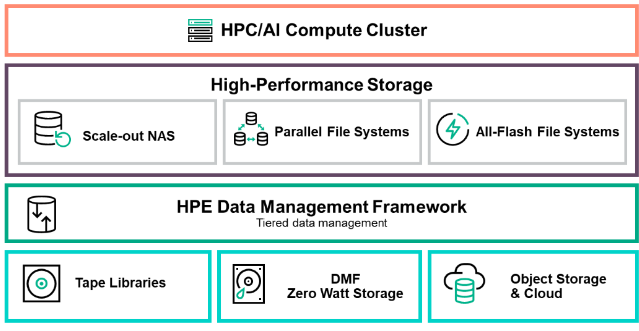

In this edition of Industry Perspectives, HPE explores the next generation of data management requirements as the growing volume of data places even more demand on data management capabilities.

Next generation workloads in high performance computing (HPC) involve more unstructured data than ever before. Single files can be in the multi-petabyte range, with millions of files in a directory, possibly billions of files within a file system, and requiring hundreds of petabytes of capacity for a file system. The sheer volume of data places ever greater demands for data management capabilities to streamline data workflows, minimize Total Cost of Ownership and maintain data integrity.

Simply gathering and collecting files to create a data set can be problematic. Just doing an “ls” to identify files in a directory could take minutes to complete. And simply knowing a file name may not be sufficient to know if it should be part of a data set.

Once a data set has been created, it must be moved to an appropriate staging location for processing, and then moved after processing to an appropriate location for review. Data movement is an integral part of data management, and ideally, this entire data workflow could be automated to streamline the process and minimize manual intervention.

After data is no longer active, it must be archived. Typical incremental backup windows are too small for petabytes of data, putting valuable data at risk of loss or corruption. Continuous backup strategies and outside-the-box methods such as using dynamic file systems offer new techniques to resolve these issues.

These huge volumes of data also require optimized utilization of storage resources. Old data needs to be swept off active resources and performance must be maximized to optimize TCO. Simply adding capacity does not really help, as it would be like a library just adding shelves to house books. Without a management scheme to locate, organize and find things, adding capacity just creates a bigger problem.

Learn how the HPE Data Management Framework provides a data management platform that helps to organize and manage data, streamline data workflows and minimize storage TCO.

The exciting new version of HPE Data Management Framework offers a next generation data management platform with new functionality to handle petabyte-scale data.

Read about next generation data management in a Hyperion Research Technology Spotlight.

To learn more about HPE Data Management Framework, see here.