BROMONT, QC, April 26, 2024 — IBM, the government of Canada and the government of Quebec today announced agreements to develop the assembly, testing and packaging (ATP) capabilities for semiconductor modules for telecommunications, high performance computing (HPC), automotive, aerospace and defence, computer networks and generative AI, at IBM Canada’s plant in Bromont, Quebec. The agreements reflect […]

Nvidia AI Enterprise Available on Oracle US Government Cloud

April 25, 2024 — Oracle today announced that Nvidia AI Enterprise on Oracle Cloud Infrastructure Supercluster is now available in the Oracle U.S. Government Cloud region to help address sovereign AI. Building on the expansion of their partnership, Oracle and Nvidia are helping U.S. government customers train and deploy AI solutions with access to Oracle Cloud Infrastructure’s 100+ services, […]

CINECA Selects E4 Computer with Dell and Vast for Galileo 100 HPC Upgrade

April 23, 2024 — E4 Computer Engineering has won the contract to upgrade Galileo 100, CINECA Interuniversity Consortium’s supercomputer and Tier-1 cloud system for public scientific research capable of serving over 5000 users. Among the requirements for the new HPC infrastructure are increasing the number of vCPUs for VM services by at least 10 times, […]

DOD to Leverage Intel Foundry’s Advanced Process Technology

DOD has awarded Phase Three of its Rapid Assured Microelectronics Prototypes – Commercial program to Intel Foundry. This means RAMP-C customers can manufacture prototypes on Intel 18A, the company’s most advanced process technology. Although Intel and DOD did not disclose the dollar….

Eviden Delivers 104 Pflops Nvidia-Powered EXA1-HE Supercomputer for CEA

Paris – April 17, 2024 – CEA (The French Alternative Energies and Atomic Energy Commission) and Eviden, the Atos advanced computing business unit, today announce the delivery of the EXA1 HE supercomputer, based on Eviden’s BullSequana….

HPC News Bytes 20240408: Chips Ahoy! …and Quantum Error Rate Progress

A good April morning to you! Chips dominate the HPC-AI news landscape, which has become something of an industry commonplace of late, including: TSMC’s Arizona fab on schedule, the Dutch government makes a pitch to ASML, Intel foundry business’s losses, TSMC expands CoWoS capacity, SK hynix to investing in Indiana and Purdue, Quantinuum and Microsoft report 14,000 error-free instances

Asmeret Asefaw Berhe Issues Letter of Farewell as Director of DOE Office of Science

Following the news last week of her departure as director of the U.S. Department of Energy’s Office of Science since May 2022, Dr. Asmeret Asefaw Berhe has issued a letter of farewell to her agency and White House colleagues. Dr. Berhe is currently on leave from the….

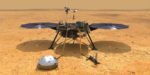

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

ALCF Announces Online AI Testbed Training Workshops

The Argonne Leadership Computing Facility has announced a series of training workshops to introduce users to the novel AI accelerators deployed at the ALCF AI Testbed. The four individual workshops will introduce participants to the architecture and software of the SambaNova DataScale SN30, the Cerebras CS-2 system, the Graphcore Bow Pod system, and the GroqRack […]

EuroHPC JU Energy Efficiency Project REGALE Comes to End

Munich, 26 March 2024 – This March marks the end of REGALE, a European project, funded by the EuroHPC Joint Undertaking, which has carried out research into the development of new software for high performance computing (HPC) centres with a focus on energy efficiency. After three years of research, the project now provides a toolchain that […]