A good spring moring to you! Here’s a rapid (6:09) route through recent news from HPC-AI, including: new supercomputers installed at Los Alamos and France-CEA, Stanford AI Index Report released on the state of AI….

HPC News Bytes 20240422: New HPC Installs, the State of AI, Bitcoin Halving, Argonne-UICs’ Crabtree Institute

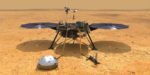

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

Italian Energy Company Eni Acquiring 600 PFLOPS AMD-Powered HPE-Cray EX HPC System

Italian energy giant Eni, long in the vanguard of commercial adoption of supercomputing, announced it is acquiring a monstrous 600 Pflops HPE-Cray EX4000 HPC system comprised of 3472 nodes, each one with a 64-core AMD EPYC CPU and four AMD Instinct….

AMD-based HPC Systems on TOP500 Grows 39%

SANTA CLARA, Calif., — Nov. 14, 2023 — Today, AMD said its microprocessors now power 140 supercomputers on the latest Top500 list, representing a 39 percent year-over-year increase. Additionally, AMD powers 80 percent of the top 10 most energy efficient supercomputers in the world based on the latest Green500 list. “AMD technology continues to be […]

NOAA Supercomputing Capacity Expanded for Advanced National Weather Forecasting

FALLS CHURCH, Va. – The computing capacity of twin supercomputers used by the National Oceanic and Atmospheric Administration (NOAA) have been expanded by 20 percent….

LLNL: 9,000 Exascale Nodes for Power Grid Optimization

Ensuring the nation’s electrical power grid can function with limited disruptions in the event of a natural disaster, catastrophic weather or a manmade attack is a key national security challenge. Compounding the challenge of grid management is the increasing amount of renewable energy sources such as solar and wind that are continually added to the […]

New TOP500 HPC List: Frontier Extends Lead with Performance Upgrade

After a flurry of new competitors in 2022 at the top of the TOP500 list of the world’s most powerful supercomputers, the first list of 2023 – issued here in Hamburg this morning at the ISC conference – reveals the same top 10 systems in the same order. Still, the HPC community will no doubt […]

HPE to Build 67 PFLOPS TSUBAME4.0 HPC for AI-Driven Science at Tokyo Tech

TOKYO – May 19, 2023 – Hewlett Packard Enterprise (NYSE: HPE) today announced that it was selected by Tokyo Institute of Technology (Tokyo Tech) Global Scientific Information and Computing Center (GSIC) to build its next-generation supercomputer, TSUBAME4.0, to accelerate AI-driven scientific discovery in medicine, materials science, climate research, and turbulence in urban environments. TSUBAME4.0 will be built using HPE […]

NERSC RFP: 40 ExaFLOPS Mixed Precision Expected from Perlmutter AI Supercomputer Successor

Last August, NERSC (the National Energy Research Scientific Computing Center) at Lawrence Berkeley National Laboratory announced its intent to commission “NERSC-10,” a next-generation supercomputer to be delivered “in the 2026 timeframe” for the U.S. Department of Energy Office of Science research community. The system will succeed Perlmutter, the 6,000 NVIDIA GPU-powered (with AMD CPUs), 4-exaFLOPS […]

NOAA, ORNL Launch 10 PFLOPS HPE Cray HPC System for Climate Research

April 12, 2023 — Oak Ridge National Laboratory, in partnership with the National Oceanic and Atmospheric Administration, is launching a supercomputer dedicated to climate science research. The system is the fifth supercomputer to be installed and run by the National Climate-Computing Research Center at ORNL. The NCRC was established in 2009 as part of a […]