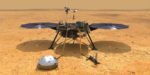

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

HPC News Bytes 20240325: Final GTC Thoughts, Intel and CHIPS Act Largesse, Ultra Ethernet Consortium Expands, Samsung’s GPU/HBM Chip

A happy end-of-March morning to you! Here’s a rapid (5:57) run-though of the latest news in HPC-AI, including: final reflections on Nvidia GTC 2024, Intel to receive $8.5 billion via US CHIPS Act, the expanding Ultra Ethernet Consortium, Samsung’s upcoming GPU/HBM blend.

Argonne: ATPESC Training Application Deadline Extended to March 10

The application deadline for the Argonne Training Program on Extreme-Scale Computing (ATPESC) program has been extended to Sunday, March 10. This year marks the 12th year for ATPESC, which provides intensive, two-week training on the key skills, approaches, and tools to design, implement, and execute computational science and engineering applications on current high-end computing systems […]

Eviden Caught up in Atos’ Financial, Acquisition Struggles

With a longstanding TOP500 supercomputing heritage, a leadership position in European supercomputing, a commitment to build Europe’s first exascale-class system and with major techno- geopolitical stakes on the line, the financial difficulties of Atos and, by extension, its Eviden HPC unit….

Exascale’s New Software Frontier: ExaSGD

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. The Scientific Challenge As more renewable sources of energy are added to the national power grid, it becomes more complex to manage. That’s because renewables such as wind […]

HPC System Analyst Jackie Scoggins Reflects on Her Time at NERSC

As part of cthe 50th anniversary celebrations for the National Energy Research Scientific Computing Center (NERSC), “In Their Own Words” is a Q&A series featuring voices from across the NERSC landscape, past and present, about their experiences at NERSC. Jackie Scoggins arrived at NERSC in 1996 as a system analyst and administrator for the Computational […]

Exascale: Bringing Engineering and Scientific Acceleration to Industry

At SC23, held in Denver, Colorado, last November, members of ECP’s Industry and Agency Council, comprised of U.S. business executives, government agencies, and independent software vendors, reflected on how ECP and the move to exascale is impacting current and planned use of HPC….

IQM Quantum Reports Benchmarks on 20-Qubit System

Espoo, Finland, 20th February 2024 – IQM Quantum Computers announced it has achieved its latest benchmarks measured on its 20-qubit quantum computer. Among the system-level benchmarks IQM obtained: Quantum Volume (QV) of 2^5=32 Circuit Layer Operations Per Second (CLOPS) of 2600 20-qubit GHZ state with fidelity greater than 0.5 Q-score of 11 Quantum volume (QV) is a benchmark that […]

ALCF Developer Session Feb. 28: Aurora’s Exascale Compute Blade

On Wednesday, Feb. 28, the Argonne Leadership Computing Facility will hold a webinar from 11 am-noon CT on the compute blade of the Aurora exascale supercomputer. Registration for the event can be found here. Led by ALCF’s Servesh Muralidharan, the session will cover details of the blade’s components, and the flow of data between them, […]

HPC User Forum Announces Agenda for April 9-10 Event in Reston, VA

ST PAUL, Minn., February 12, 2024— The HPC User Forum, established in 1999 to promote the health of the global HPC industry and address issues of common concern to user, has published an updated agenda spotlighting its list of featured speakers for its upcoming meeting, on Tuesday and Wednesday, April 9-10 at the Hyatt Regency […]