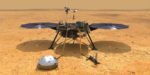

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

Exascale Computing Project: Leveraging HPC and Neural Networks for Cancer Research

What happens when Department of Energy (DOE) researchers join forces with chemists and biologists at the National Cancer Institute (NCI). They use the most advanced high-performance computers to study cancer at the molecular, cellular and population levels.

Exascale: Bringing Engineering and Scientific Acceleration to Industry

At SC23, held in Denver, Colorado, last November, members of ECP’s Industry and Agency Council, comprised of U.S. business executives, government agencies, and independent software vendors, reflected on how ECP and the move to exascale is impacting current and planned use of HPC….

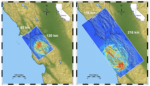

EQSIM and RAJA: Enabling Exascale Predictions of Earthquake Effects on Critical Infrastructure

Nearly 120 years ago, the great San Francisco earthquake of 1906 provided a stark and sobering view of the havoc that can be caused by the sudden and violent movement of Earth’s tectonic plates. According to USGS, the rupture along the San Andreas fault extended 296 miles (447 kilometers) and shook so violently that the […]

@HPCpodcast: Paul Messina and the Journey to Exascale

From the early days of supercomputing through the success of exascale supercomputing, few HPC luminaries have played as important and integral leadership role in HPC as Dr. Paul Messina. So as we observe Exascale Day today, we are delighted to discuss the exascale journey with someone instrumental to the 10 orders of magnitude of improvement in ….

Exascale Day 2023: The Exascale Computing Era Is Here.

With the delivery of the U.S. Department of Energy’s (DOE’s) first exascale system, Frontier, in 2022, and the upcoming deployment of Aurora and El Capitan systems by next year, researchers will have the most sophisticated computational tools at their disposal to conduct groundbreaking research. Exascale machines can perform more than a billion billion calculations per […]

Building a Capable Computing Ecosystem for Exascale

With ECP, working together was a prerequisite for participation. “From the beginning, the teams had this so-called ‘shared fate,’” says Siegel. When incorporating new capabilities, applications teams had to consider relevant software tools developed by others that could help meet their performance targets, and if they didn’t choose to use them….

Members of ECP’s Industry Council in Panel at SC23 on Exascale Computing’s Impact on Industry

July 31, 2023 — At SC23, there will be a panel discussion titled “The Impact of Exascale and the Exascale Computing Project on Industry“ featuring members of Exascale Computing Project’s Industry and Agency Council (IAC), who will discuss how the ECP and the move to exascale computing is impacting industry’s current and planned use of […]

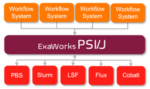

ExaWorks: Tested Component for HPC Workflows

ExaWorks is an Exascale Computing Project (ECP)–funded project that provides access to hardened and tested workflow components through a software development kit (SDK). Developers use this SDK and associated APIs to build and deploy production-grade, exascale-capable workflows on US Department of Energy (DOE) and other computers. The prestigious Gordon Bell Prize competition highlighted the success of the ExaWorks SDK when the Gordewinner and two of three finalists in the 2020 Association for Computing Machinery (ACM) Gordon Bell Special Prize for High Performance Computing–Based COVID-19 Research competition leveraged ExaWorks technologies.

Intel Announces Installation of Aurora Blades Is Complete, Expects System to be First to Achieve 2 ExaFLOPS

Intel today announced the Aurora exascale-class supercomputer at Argonne National Laboratory is now fully equipped with 10,624 compute blades. Putting a stake in the ground, Intel said in its announcement that “later this year, Aurora is expected to be the world’s first supercomputer to achieve a theoretical peak performance of more than 2 exaflops … […]