Nearly 120 years ago, the great San Francisco earthquake of 1906 provided a stark and sobering view of the havoc that can be caused by the sudden and violent movement of Earth’s tectonic plates. According to USGS, the rupture along the San Andreas fault extended 296 miles (447 kilometers) and shook so violently that the motion could be felt as far north as Oregon and east into Nevada. The estimated 7.9-magnitude quake and subsequent fires decimated the major metropolis and surrounding areas: buildings turned to ruins, hundreds of thousands of people left homeless, and a death toll exceeding 3,000. Today, as evidenced by the catastrophic 7.8-magnitude earthquake that struck Turkey in February of 2023, large earthquakes still present a significant potential danger to life and economic security as researchers work to develop ways to better understand earthquake phenomena and quantify associated risks.

High-performance computing (HPC) has enabled scientists to use geophysical and seismographic data from past events to simulate the underlying physics of earthquake processes. This work has led to the development of predictive capabilities for evaluating the potential risks to critical infrastructure posed by future tremors. However, data limitations and the high computational burden of these models has made detailed, site-specific resolution impossible—until now. With the Department of Energy’s (DOE’s) Frontier supercomputer, the world’s fastest and first exascale system, researchers have access to a transformative degree of computational power and problem-solving that extends the range of earthquake prediction to previously unattainable realms.

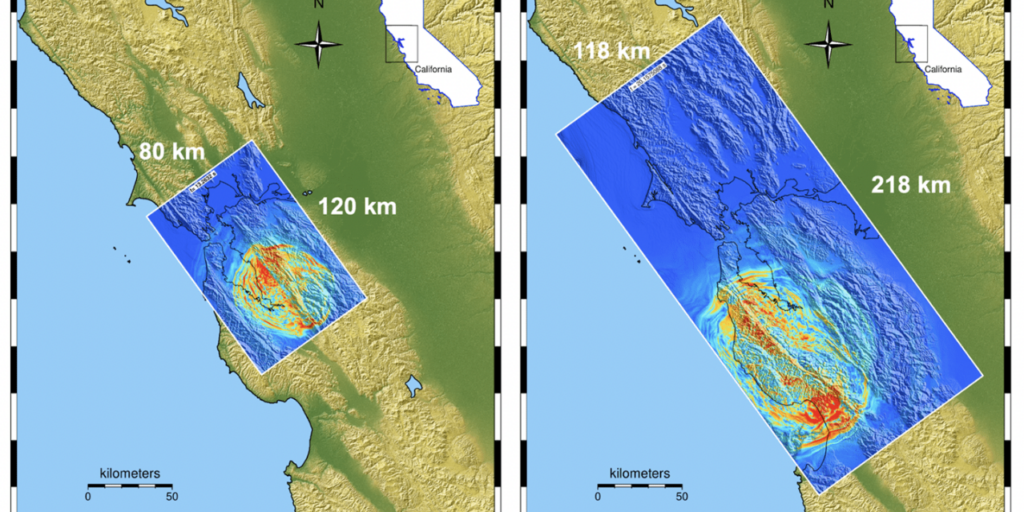

Exascale systems are the first HPC machines to provide the size and speed needed to run regional-level, end-to-end simulations of an earthquake event—from the fault rupture to the waves propagating through the heterogeneous Earth to the waves interacting with a structure—at higher frequencies that are necessary for infrastructure assessments in less time. However, the full potential of exascale could only be realized through a concerted effort to enhance the codes used to run the models and implementation of improved software technology products to boost application performance and efficiency. Through DOE’s Exascale Computing Project (ECP), a multidisciplinary team of seismologists, applied mathematicians, applications and software developers, and computational scientists have worked in tandem to produce EQSIM—a unique framework that simulates site-specific ground motions to investigate the complex distribution of infrastructure risk resulting from various earthquake scenarios. “Understanding site-specific motions is important because the effects on a building will be drastically different depending on where the rupture occurs and the geology of the area,” says principal investigator David McCallen, a senior scientist at Lawrence Berkeley National Laboratory who leads the Critical Infrastructure Initiative for the Energy Geosciences Division and director of the Center for Civil Engineering Earthquake Research at the University of Nevada at Reno. “ECP’s multidisciplinary approach to advancing HPC and its collaborative research paradigm were essential to making this transformative tool a reality. By having all the experts focused on the project together at the same time, we achieved the seemingly impossible.”