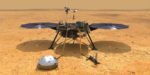

NASA supercomputers have helped several Mars landers survive the nerve-racking seven minutes of terror. During this hair-raising interval, a lander enters the Martian atmosphere and needs to automatically execute many crucial actions in precise order….

Exascale Software and NASA’s ‘Nerve-Racking 7 Minutes’ with Mars Landers

@HPCpodcast: Matt Sieger of OLCF-6 on the Post-Exascale ‘Discovery’ Vision

What does a supercomputer center do when it’s operating two systems among the TOP-10 most powerful in the world — one of them the first system to cross the exascale milestone? It starts planning its successor. The center is Oak Ridge National Lab, a U.S. Department….

Exascale’s New Software Frontier: ExaSGD

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. The Scientific Challenge As more renewable sources of energy are added to the national power grid, it becomes more complex to manage. That’s because renewables such as wind […]

Exascale: E4S Software Deployments Boost Industry Acceptance of Accelerators

Last November at SC23, industry leaders reflected on successful deployments of the Exascale Computing Project’s Extreme-Scale Scientific Software Stack (E4S). They highlighted how E4S at Pratt & Whitney, ExxonMobil, TAE Technologies, and GE Aerospace….

Exascale’s New Software Frontier: Combustion-PELE

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. The scientific challenge Diesel and gas-turbine engines drive the world’s trains, planes, and ships, but the fossil fuels that power these engines produce much of the carbon emissions […]

Exascale’s New Software Frontier: E3SM-MMF

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. The Scientific Challenge Gauging the likely impact of a warming climate on global and regional water cycles poses one of the top challenges in climate change prediction. Scientists […]

Exascale’s New Software Frontier: LatticeQCD for Particle Physics

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. The Science Challenge One of the most challenging goals for researchers in the fields of nuclear and particle physics is to better understand the interactions between quarks and gluons — […]

HPC News Bytes 20240219: AI Safety and Governance, Running CUDA Apps on ROCm, DOE’s SLATE, New Advanced Chips

Happy President’s Day morning to everyone! Today’s HPC News Bytes races (6:22) around the HPC-AI landscape with comments on: developments in AI security and governance, running CUDA (NVIDIA) apps on ROCm (AMD), DOE’s Exascale Software Linear….

Exascale’s New Software Frontier: SLATE

“Exascale’s New Frontier,” a project from the Oak Ridge Leadership Computing Facility, explores the new applications and software technology for driving scientific discoveries in the exascale era. Why Exascale Needs SLATE For nearly 30 years, scores of science and engineering projects conducted on high-performance computing systems have used either the Linear Algebra PACKage (LAPACK) library […]