This 7-year project, sponsored by the DOE Office of Science and the National Nuclear Security Administration, invested $1.8 billion in application development, software technology, and hardware and integration research conducted by more than 1,000 people located throughout the nation, involving DOE national laboratories, universities, and industrial participants. The ECP was focused on four goals: maintaining U.S. leadership in high-performance computing (HPC), promoting the health of the U.S. HPC industry, delivering a sustainable HPC software ecosystem, and creating mission-critical applications.

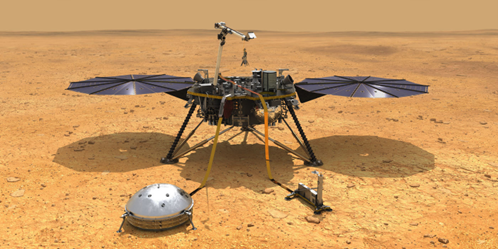

NASA needs HPC at all scales to support and advance many of its applications. For example, one safe-landing challenge is to deploy a parachute at hypersonic speeds. The thin, flexible parachute wobbles and changes shape as it slows the landing vehicle. Most parachute-deployment tests occur in wind tunnels, but the largest payloads require parachutes that would nearly fill the wind tunnel, leaving little room for airflow.

“A wind tunnel obviously cannot be used for that kind of test,” says Tsengdar Lee, high-end computing portfolio manager at NASA headquarters in Washington. “So we do a lot of computation to design the parachutes.”

The complex issues of landing on Mars are among many that NASA supercomputers have addressed for decades. “We are scientists and engineers,” Lee says. “We love to solve problems.” NASA’s ambitious goals present many challenges that can be surmounted with help from exascale systems.

Exascale Opportunities

Lee and his colleagues hope to get some of that help from the DOE’s Frontier supercomputer. It debuted at Oak Ridge National Laboratory in May 2022 as the world’s first exascale computer, performing 1.19 quintillion—a million trillion—calculations per second. This placed Frontier, which uses HPE Cray EX235a architecture equipped with AMD graphics processing units (GPUs), as number one on the TOP500 list as the world’s fastest computer. In April 2023, Frontier became available to all ECP teams.

Supercomputer simulations are essential to an ever-growing list of NASA initiatives. These include jet aircraft noise reduction, green aviation advancement, simulating the aerodynamic stability of the capsule that will deliver a quadcopter to the surface of Saturn’s largest moon, and supporting satellite investigations of planets orbiting deep-space star systems.

“We will continue working to enlarge our resources,” says Piyush Mehrotra, the recently retired former chief of the NASA Advanced Supercomputing Division at the NASA Ames Research Center in California.

Mehrotra was a member of the ECP Industry and Agency Council, a group of senior-level industry, government, and technical advisors. The group serves as a conduit for exchanging information and experiences between the ECP leadership, key industrial organizations, and government agencies working together to advance the technology awareness and computational capabilities of the broad HPC community on the road to exascale. Mehrotra focused his council role “on discovery and science for NASA missions,” he says. “That’s where the satisfaction is. That’s where the return on investment is.”

A stint at Langley Research Center in Virginia as a graduate student in the early 1980s provided Mehrotra’s first experience with NASA. “I saw all the things that were going on and how computing could solve some of the issues,” he says.

Starting with his Ph.D., Mehrotra’s research career focused on harnessing the power of relatively new large-scale distributed machines. He joined NASA Ames in 2000. “I’ve been here ever since, trying to make sure that NASA scientists and engineers get the advanced computing environment they need to reach the different mission milestones,” he explains.

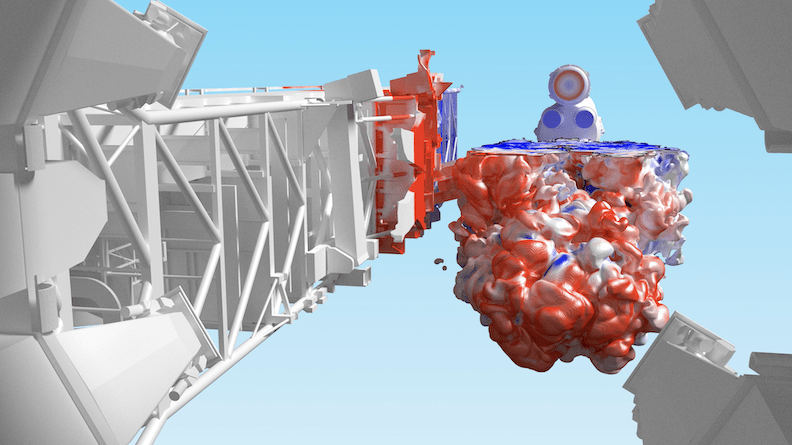

Artemis I launch simulation visualization using the LAVA Cartesian CFD solver. The image shows a slice through the exhaust plumes colored by pressure (red is high, blue is low). Black line indicates the 90% plume exhaust/air interface. The view is from the exhaust hole on the mobile launcher, looking straight up at the vehicle. CREDIT: Timothy Sandstrom, Michael Barad, NASA/Ames

NASA Ames houses the agency’s premier supercomputers. “The Ames Center is chartered to provide supercomputing large-scale simulation for all of NASA,” Mehrotra says. These simulations encompass projects in all five NASA directorates: aeronautics, exploration systems, science, space operations, and space technology.

The NASA Advanced Supercomputing Division (NAS) was founded at Ames in the mid-1980s. The center is home to Pleiades, one of NASA’s premier supercomputers. Some readers might remember Pleiades from its cameo appearance in the movie “The Martian,” where it was used to figure out the best flight trajectory for rescuing the stranded Matt Damon character.

The first machine at NAS was a Cray X-MP that operated with one central processing unit (CPU). Pleiades has enjoyed multiple upgrades over the years and operates with 228,572 CPUs. “The definition of large has been changing over the years,” Mehrotra says. “The first machine was 1/10th of what you have on your iPhone today.”

Speeding Up Climatic Codes

NASA has an operational supercomputing capacity of 45 petaflops (thousands of trillions of operations per second), mainly at Ames. DOE’s Frontier machine is rated at more than 1,000 petaflops. Hardly any of NASA’s current codes can take advantage of exascale computations. Consequently, NASA has begun adapting its codes for DOE exascale architectures that harness thousands of GPUs to prepare for further development on stepping-stone systems.

E4S is Key to Simplifying Software Transitions

NASA can use ECP’s open-source Extreme-scale Scientific Software Stack (E4S) to simplify such software transitions. Working with source code developed with various HPC products, including multiple math libraries and programming models, E4S creates code that can run on virtually any HPC platform, including GPU-enabled architectures.

“We have a lot of codes that need to move onto this new architecture,” Lee says. Many of NASA’s legacy applications are based on highly versatile CPUs. However, the more specialized GPUs can process more data faster and in parallel. In the past, when NASA ran simulations on a DOE system, only the CPUs were used.

“We are working aggressively in the GPU direction,” Lee says. “Thanks to ECP, we now have several projects moving.” These projects involve modernizing and porting NASA model codes — such as the kilometer-scale, global ocean-atmosphere linked simulations of the Goddard Earth Observing System (GEOS) — to exascale systems.

Exascale capabilities are also crucial for NASA’s computational fluid dynamics (CFD) simulations that model weather and climate dynamics involving the movements of water and air. Even now, Mehrotra says, “more than half our systems are used for CFD.”

Exascale capabilities are also crucial for NASA’s computational fluid dynamics (CFD) simulations that model weather and climate dynamics involving the movements of water and air. Even now, Mehrotra says, “more than half our systems are used for CFD.”

CFD applies to GEOS and the Estimating the Circulation and Climate of the Ocean model, which generates some of the largest and most complex simulations that run on NASA supercomputers. Exascale computing will speed up these projects, as well as NASA’s greenhouse-gas inventory, monitoring, observation, and modeling data analyses.

Processing the many satellite observations of trace gases and tracking all the chemical reactions that play out in the atmosphere require a great deal of computing power. “We can do that in a couple of months with our existing capability,” Lee says. “But doing it in real-time will require exascale computing.”

Long-term climate prediction requires a high-resolution linkage between atmospheric and ocean models seasoned with chemistry. Air pollutants interact with atmospheric dust to form clouds and rain that interact with solar (downward) and terrestrial (upward) radiation. “It’s a very complicated aerosol-cloud-radiation feedback process,” Lee says. “That’s definitely an exascale problem.”

Back to the Moon and Beyond

The Launch Ascent and Vehicle Aerodynamics team uses supercomputers to numerically predict the effect of ignition overpressure waves that massive rockets will produce at the Kennedy Space Center. Space shuttle launches generated massive, billowing clouds of steam. These were byproducts of injecting water into the flame trench and at exhaust openings to dampen the onslaught of potentially damaging acoustic wave energy.

Artemis I launch simulation visualization using the LAVA Cartesian CFD solver. The image shows a slice through the exhaust plumes colored by pressure (red is high, blue is low). Black line indicates the 90% plume exhaust/air interface. The view is from the exhaust hole on the mobile launcher, looking straight up at the vehicle.

CREDIT: Timothy Sandstrom, Michael Barad, NASA/Ames

But the Space Launch System (SLS) — designed to return astronauts to the moon — launched at the Kennedy Space Center on Nov. 16, 2022, was much larger than the rockets of the space shuttle era. NASA’s most powerful rocket ever, the SLS stands 17 stories high, subjecting the launch pad to incredible pressures, temperatures, and vibrational acoustic waves as it burns about six tons of propellant each second.Mehrotra says, “If you want to simulate the whole reality, computing systems at the exascale level are needed.”

As plans progress for the Artemis mission to return astronauts to the moon, simulations on NASA’s most powerful supercomputers — Aitken and Electra — also help ensure crew safety in the event of a launch abort. The Orion spacecraft has a launch abort motor that can fire within milliseconds to quickly pull its four-person crew to safety. Orion’s abort motor produces about 400,000 pounds of thrust. Aitken and Electra simulate how vibrations from the motor plumes would affect the crew capsule during many scenarios.

Mehrotra and Lee tout the need to continue advancing exascale computing technology. “I don’t want people to think we have reached exascale, and we can relax now; our scientists and engineers continue to innovate, and new problems will arise,” Lee emphasizes. “As a nation, we need to stay at the leading edge of the technology.”

NASA has continually expanded its supercomputing capabilities since deploying Pleiades in 2008. The agency now maintains six supercomputers in its portfolio. These include Aitken, which eclipsed the often-upgraded Pleiades as NASA’s most powerful supercomputer in 2022. In connection with DOE, NASA will continue to enhance its ability to grapple with complex problems that require advanced computing. “The exascale era is just beginning,” Lee says.

Speak Your Mind