Cerebras Systems’ dinner plate-size chip technology has been a curiosity since the company introduced the Wafer Scale Engine in 2019. But it’s become more than just a curiosity to the AI industry — and to the venture community.

Cerebras Systems’ dinner plate-size chip technology has been a curiosity since the company introduced the Wafer Scale Engine in 2019. But it’s become more than just a curiosity to the AI industry — and to the venture community.

Today, Cerebras announced an oversubscribed $1.1 billion Series G funding round at $8.1 billion valuation. This as the company moves toward a promised initial public offering.

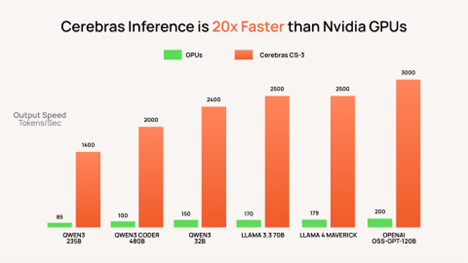

The company regularly announces eye-popping performance numbers (it says the fastest OpenAI, Meta Llama, Code-Gen models run on Cerebras), and of late Cereras has increasingly taken aim at GPU market leader NVIDIA. Today’s announcement included a bar chart (below) reporting Cerebras delivers 20x inference performance superiority over (unspecified) NVIDIA GPUs.

Last week, Cerebras announced an AI data center in Oklahoma City with more than 44 exaflops of compute power that the company said is the fastest in the world.

The wafer-scale architecture enables AI models and memory to be placed entirely on a single chip, avoiding memory bandwidth and latency issues while reducing data movement. Cutting cross-device communication and orchestration also improve efficiency — Cerebras says its systems use about one-third the power of other GPUs.

“Since our founding, we have tested every AI inference provider across hundreds of models. Cerebras is consistently the fastest,” said Micah Hill-Smith, CEO of benchmarking firm Artificial Analysis.

Cerebras said it is enoying “massive demand.” “New real-time use cases – including code generation, reasoning, and agentic work – have increased the benefits from speed and the increased the cost of being slow, driving customers to Cerebras. Today, Cerebras is serving trillions of tokens per month, in its own cloud, on its customers premises, and across leading partner platforms.”

Source: Cerebras

In 2025, companies such as AWS, Meta, IBM, Mistral, Cognition, AlphaSense, Notion and others chosenCerebras, joining GlaxoSmithKline, Mayo Clinic, the US Department of Energy, the US Department of Defense, the company said. Individual developers have also chosen Cerebras for their AI work. On Hugging Face, the leading AI hub for developers, Cerebras is the #1 inference provider with over 5 million monthly requests, the company reported.

The latest venture round was led by Fidelity Management & Research Company and Atreides Management, and included participation from Tiger Global, Valor Equity Partners, and 1789 Capital, as well as existing investors Altimeter, Alpha Wave, and Benchmark. Citigroup and Barclays Capital acted as joint placement agents for the transaction.

Cerebras said it will use the venture funds to expand its technology portfolio with continued inventions in AI processor design, packaging, system design and AI supercomputers. “In addition, it will expand its U.S. manufacturing capacity and its U.S. data center capacity to keep pace with the explosive demand for Cerebras products and services.”