[SPONSORED GUEST ARTICLE] We hear of $50 billion artificial intelligence (AI) factories, and we try to wrap our heads around the gargantuan scale and complexity of this new class of data center. We think of the tens of thousands of components, the investment and the risk such an undertaking requires, along with the necessity that every piece of equipment, down to the smallest, works as required for the entire facility to operate correctly.

With today’s growing adoption in the AI data center of liquid cooling – essential for controlling AI cooling costs and energy usage – minimizing risk includes the use of high-quality connectors. Liquid cooling connectors play a crucial role in today’s HPC-class AI data centers. If they malfunction and leak fluid onto electronic components, havoc ensues. Once the data center is operational, data center managers and IT directors don’t want to worry, or even think, about them. You want ultra-reliability.

CPC (Colder Products Company) has built a portfolio of 10,000-plus connector products in its nearly 50-year history. A business unit of Pump Solutions Group within Dover Corporation, CPC has channeled its resources into advancing connector technology. Over the decades, the company has pushed connector technology boundaries and extended the limits of innovation, with the spotlight on precision and quality.

That’s why CPC has partnered with leading companies in the technology industry, and it’s why CPC experts were tapped during liquid cooling system design for one of the first exascale-class supercomputers – a 10,600-node, $500 million system that can run a quintillion calculations per second.

We’ll discuss the CPC exascale supercomputer story later in this article. For now, let’s define what quick disconnect (QD) couplings are all about.

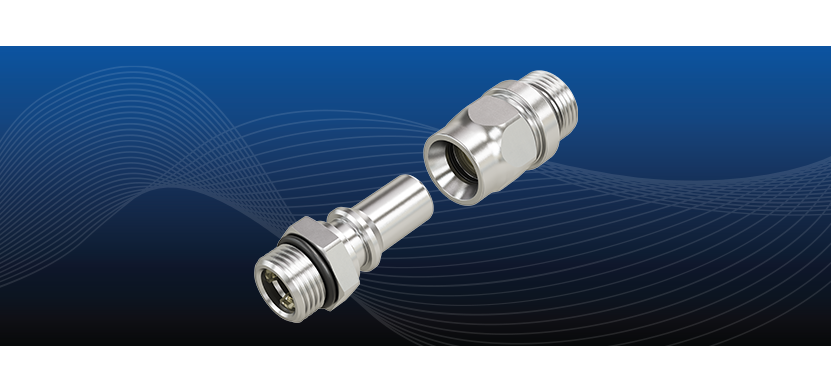

A CPC QD consists of two parts: a coupling socket and a coupling plug that, when connected, create a fluid flow path. Each coupling has a valve architecture with multi-lobed seals to provide redundant protection against leakage over extended periods of time. The valve design allows these liquid cooling quick disconnects to close quickly and reliably upon disconnection. The non-spill (or dry break) patented design of CPC Everis® connectors allows disconnection without drips, including when under pressure.

CPC’s new Everis UQDB06 and UQD06 Connector Set, announced in July, brings a 3/8-inch flow path to its ever-growing UQD family, which also includes 1/8-inch and 1/4-inch options. Everis quick disconnects are uniquely built for thermal management applications via ultra-reliable, dripless liquid cooling design.

CPC’s new Everis UQDB06 and UQD06 Connector Set, announced in July, brings a 3/8-inch flow path to its ever-growing UQD family, which also includes 1/8-inch and 1/4-inch options. Everis quick disconnects are uniquely built for thermal management applications via ultra-reliable, dripless liquid cooling design.

The data center liquid cooling market is growing quickly. According to Hyperion Research, about two-thirds of HPC/AI sites have implemented liquid cooling, with adoption expected to reach nearly 80 percent in the next 12-18 months. This is why CPC offers QDs at hyperscale volumes and short lead times to support customers’ rapid scale up.

As electronic systems become more complex and operate at higher power, dissipating excess heat efficiently becomes a major issue. With customers demanding lower weight and smaller sizes, liquid cooling is the answer. Thermal management with reliable liquid cooling quick disconnects fittings is required to help prevent failures and improve system efficiency.

Cutting-edge artificial intelligence and HPC clusters don’t just require the highest performing GPUs and CPUs, they are now packed in incredibly dense configurations. That leads to substantially higher wattage densities at both the node and rack level, with rack power densities increasing to well over 100kW. Further, given their expense and the critical research that the systems often enable, these high-density clusters run 24/7 at 100 percent capacity for sustained periods.

The move to liquid cooling in HPC-class AI data centers is on, and it’s a key factor in moving the power usage effectiveness (PUE) of computing centers closer to the 1.0 goal. Liquid cooling benefits include:

- 85% reduction in carbon footprint

- Minimized latency by maximizing cluster interconnect density

- 3,500-4,000 times more efficient at transferring heat

- Significant cost savings results from more efficient cooling

- Noise reduced due to slower fan speed

CPC’s new Everis UQDB06 and UQD06 Connector Set, with a 3/8-inch flow path, is designed to handle AI’s higher-flow liquid cooling requirements in hyperscale AI, data center and high-performance computing (HPC) applications.

While higher flow rates alone can improve cooling, the industry is ultimately seeking optimized cooling. “Increased flow often requires higher pumping capabilities, which can affect overall system design and energy efficiency,” said Patrick Gerst, General Manager of CPC’s thermal business unit. “Increasing the connector’s flow path size is one way to add flow capacity.”

Supercomputing Architecture and CPC

In the world of supercomputing, the TOP500 list of the world’s most powerful supercomputers, issued twice per year, is a closely watched measure of leadership-class performance in HPC. At the top of the list, the first three are exascale-class systems capable of more than a quintillion (a billion billion) calculations per second.

As engineers drew up the architecture for one of the first exascale systems, liquid cooling was a given, but the quick disconnect provider was not. The engineering team conducted a survey of what was available, including a review of incumbent suppliers’ products.

Primary drivers were flow rates and form factor fit. Since they couldn’t find exactly what they wanted, it was important to align with a partner willing to work closely with them to find a product that met their needs.

“We were challenged to create a solution that didn’t exist,” said Barry Nielsen, Senior Applications Development Manager in CPC’s thermal business. “We applied our decades of fluid management connector expertise to the liquid cooling aspects of this exciting, new extensible computing platform. The ability to seamlessly plug new or different blades into the system means connection to the liquid cooling manifolds needs to be equally seamless.”

The CPC team made assessments of liquid cooling options, looking at hose barb configurations, joining methods, connection orientations on differing tubing sizes, upstream/downstream flow rates and more. The compact size of the liquid-cooled cabinet required quick disconnects to fit into tight spaces without adversely affecting either flow rates or ease of use.

CPC Everis® UQD06-UQDB06-QD

In response, CPC developed its patented Everis Series QDs. Multiple sizes of Everis Series connectors are now part of the liquid cooling architecture for these exascale-class systems.

“Connectors are among the primary flow path resistors in an HPC liquid cooling system, depending on their configuration and valve type,” CPC’s Nielsen said. “You would be surprised at how things like termination style or hand mate vs. blind mate configurations can impact the valve flow coefficient (Cv). We model flow through our QDs theoretically in addition to testing the connectors empirically. Customers can be assured that flow efficiency is top of mind for us as much as it is for them.”

Nielsen agrees that close collaboration yielded positive outcomes for the customer, CPC and high-performance computing in general. “Now there is an ultra-compact connection technology for thermal management that quickly and securely connects fluid manifolds to high-powered blades, all of which are liquid cooled. The entire HPC industry is the beneficiary of that creative thinking.”