A happy Monday morn to you. This week’s HPC News Bytes offers a quick (4:53) run-through of the major news in our sector over the past week. This morning we look at: Gartner predicts accelerated growth for composable computing; Proposed ABI (application binary interface) for MPI to simplify parallel apps; AMD buys AI software company Mipsology – a sign of more AI M&A activity to come?; HPC clone on Google Cloud Platform

Harvard Uses Google Cloud to Clone Supercomputer for Medical Research Runs

A Harvard scientist used Google Cloud Platform compute resources to construct an HPC clone to conduct heart disease study, according to a Reuters story, “a novel move that other researchers could follow to get around a shortage of powerful computing resources….”

Zorroa Launches Boon AI; No-code Machine Learning for Media-driven Organizations

Zorroa Corp., a leading provider of accessible machine learning (ML) integration solutions backed by Gradient Ventures, Google’s AI-focused venture fund, today officially launched a first-of-its-kind ML SaaS platform, Boon AI. Boon unlocks the ML API ecosystem through a single point-and-click visual interface, reducing cost of ML adoption and making ML accessible as a competitive differentiation in media-driven organizations.

The Continuous Intelligence Report: The State of Modern applications, DevSecOps and the Impact of COVID-19

Sumo Logic, (Nasdaq: SUMO), the pioneer in continuous intelligence, released exclusive findings from its highly-anticipated fifth annual report. ”The Continuous Intelligence Report: The State of Modern Applications, DevSecOps and the Impact of COVID-19” provides an inside look into the state of the modern application technology stack, including changing trends in cloud and application adoption and usage by customers, and the impact of COVID-19 as an accelerant for digital transformation efforts.

The Continuous Intelligence Report: The State of Modern applications, DevSecOps and the Impact of COVID-19

Sumo Logic, (Nasdaq: SUMO), a pioneer in continuous intelligence, released exclusive findings from its highly-anticipated fifth annual report. ”The Continuous Intelligence Report: The State of Modern Applications, DevSecOps and the Impact of COVID-19” provides an inside look into the state of the modern application technology stack, including changing trends in cloud and application adoption and usage by customers, and the impact of COVID-19 as an accelerant for digital transformation efforts.

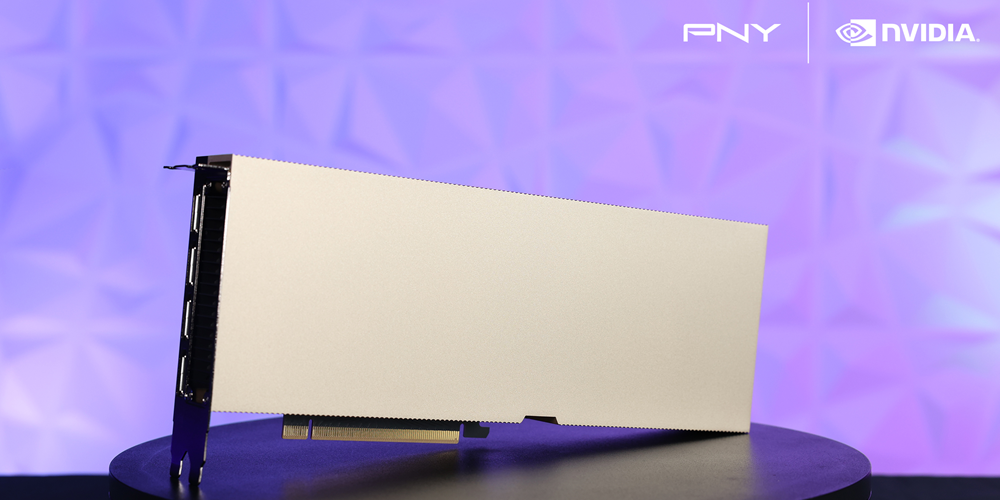

Google Cloud and NVIDIA Set New Training Records on MLPerf v0.6 Benchmark

Today the MLPerf effort released results for MLPerf Training v0.6, the second round of results from their machine learning training performance benchmark suite. MLPerf is a consortium of over 40 companies and researchers from leading universities, and the MLPerf benchmark suites are rapidly becoming the industry standard for measuring machine learning performance. “We are creating a common yardstick for training and inference performance,” said Peter Mattson, MLPerf General Chair.

Video: High Performance Computing on the Google Cloud Platform

“High performance computing is all about scale and speed. And when you’re backed by Google Cloud’s powerful and flexible infrastructure, you can solve problems faster, reduce queue times for large batch workloads, and relieve compute resource limitations. In this session, we’ll discuss why GCP is a great platform to run high-performance computing workloads. We’ll present best practices, architectural patterns, and how PSO can help your journey. We’ll conclude by demo’ing the deployment of an autoscaling batch system in GCP.”

Moving HPC Workloads to the Cloud with Univa’s Navops Launch 1.0

Today Univa announced the release of Navops Launch 1.0, the latest version of the most powerful hybrid HPC cloud management product and a significant advancement in the migration of HPC workloads to the cloud. Navops Launch meshes public cloud services and on-premise clusters to cost-effectively meet increasing workload demand. “A unique automation engine allows Navops Launch to integrate third-party data sources, including storage fabric, management systems, cluster and cloud environment attributes.”

Google Cloud TPU Machine Learning Accelerators now in Beta

John Barrus writes that Cloud TPUs are available in beta on Google Cloud Platform to help machine learning experts train and run their ML models more quickly. “Cloud TPUs are a family of Google-designed hardware accelerators that are optimized to speed up and scale up specific ML workloads programmed with TensorFlow. Built with four custom ASICs, each Cloud TPU packs up to 180 teraflops of floating-point performance and 64 GB of high-bandwidth memory onto a single board.