Today the MLPerf effort released results for MLPerf Training v0.6, the second round of results from their machine learning training performance benchmark suite.

Today the MLPerf effort released results for MLPerf Training v0.6, the second round of results from their machine learning training performance benchmark suite.

We are creating a common yardstick for training and inference performance. We invite everyone to become involved by going to mlperf.org or emailing info@mlperf.org” said Peter Mattson, MLPerf General Chair.

MLPerf is a consortium of over 40 companies and researchers from leading universities, and the MLPerf benchmark suites are rapidly becoming the industry standard for measuring machine learning performance. The MLPerf Training benchmark suite measures the time it takes to train one of six machine learning models to a standard quality target in tasks including image classification, object detection, translation, and playing Go.

NVIDIA Sets Eight Records for Training on MLPerf

Continuing their momentum in Machine Learning, NVIDIA today claimed eight records in training performance, including three in overall performance at scale and five on a per-accelerator basis.

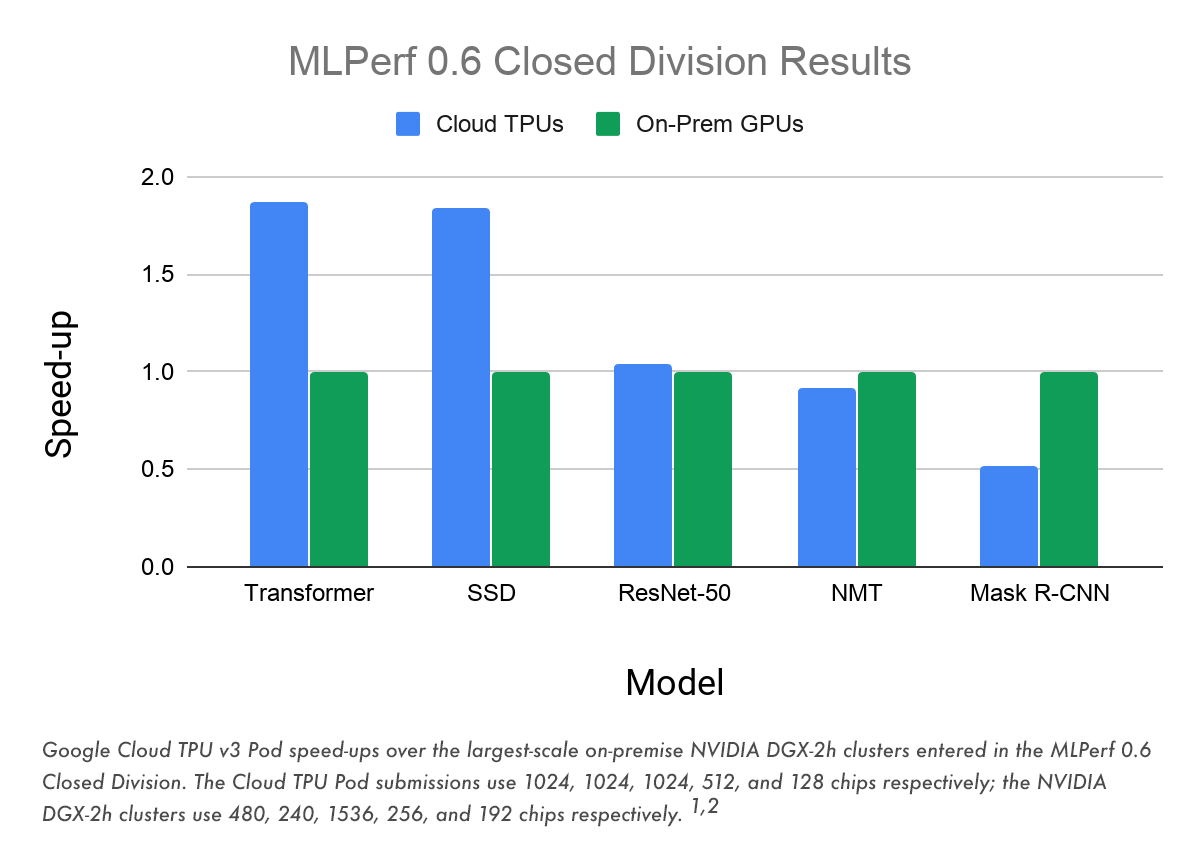

The Google Cloud Platform (GCP) also did well, with three new performance records in the latest round of the MLPerf benchmark competition

All three record-setting results ran on Cloud TPU v3 Pods, the latest generation of supercomputers that Google has built specifically for machine learning. These results showcased the speed of Cloud TPU Pods— with each of the winning runs using less than two minutes of compute time.

Improvements in MLPerf Training v0.6

The first version of MLPerf Training was v0.5; this release, v0.6, improves on the first round in several ways. According to the MLPerf Training Special Topics Chairperson Paulius Micikevicius, “these changes demonstrate MLPerf’s commitment to its benchmarks’ representing the current industry and research state.”

The improvements include:

- Raises quality targets for image classification (ResNet) to 75.9%, light-weight object detection (SSD) to 23% MAP, and recurrent translation (GNMT) to 24 Sacre BLEU. These changes better align the quality targets with state of the art for these models and datasets.

- Allows use of the LARS optimizer for ResNet, enabling additional scaling.

- Experimentally allows a slightly larger set of hyperparameters to be tuned, enabling faster performance and some additional scaling.

- Changes timing to start the first time the application accesses the training dataset, thereby excluding startup overhead. This change was made because the large scale systems measured are typically used with much larger datasets than those in MLPerf, and hence normally amortize the startup overhead over much greater training time.

- Improves the MiniGo benchmark in two ways. First, it now uses a standard C++ engine for the non-ML compute, which is substantially faster than the prior Python engine. Second, it now assesses quality by comparing to a known-good checkpoint, which is more reliable than the previous very small set of game data.

- Suspends the Recommendation benchmark while a larger dataset and model are being created.

Submissions showed substantial technological progress over v0.5. Many benchmarks featured submissions at higher scales than v0.5. Benchmark results on the same system show substantial performance improvements over v0.5, even after the impact of the rules changes are factored out. (The higher quality targets lead to higher times on ResNet, SSD, and GNMT. The change to overhead timing leads to lower times especially on larger systems. The improved engine and different quality target make MiniGo times substantially different.)

The rapid improvement in MLPerf results shows how effective benchmarking can be in accelerating innovation.” said Victor Bittorf, MLPerf Submitters Working Group Chairperson.

MLPerf Training v0.6 showed increased support for the benchmark and greater interest from submitters. MLPerf Training v0.6 received sixty-three entries, up more than 30%. Submissions came from five submitters, up from three in the previous round. Submissions included the first submission to the “Open Division” submission, which allows the model to be further optimized or a different model to be used (though the same model was used in the v0.6 submission) as a means of showcasing more potential performance innovations through software changes. The MLPerf effort now has over 40 supporting companies, and recently released a complementary inference benchmark suite.

Sign up for our insideHPC Newsletter