By Timothy Prickett Morgan • Get more from this author

When Nvidia did a preview of its next-generation “Kepler” GPU chips back in March, the company’s top brass said that they were saving some of the goodies in the Kepler design for the big event at Nvidia’s GPU Technical Conference in San Jose, which runs this week. And true to its word, the Kepler GPUs do have some goodies that will make them considerably more useful for graphics and HPC compute workloads.

The two big innovations baked into the Kepler GPU are called Hyper-Q and Dynamic Parallelism, and they are integral to the company’s plans for the Kepler GPUs to have somewhere between three and four times the performance per watt compared to the prior generation of Fermi GPUs.

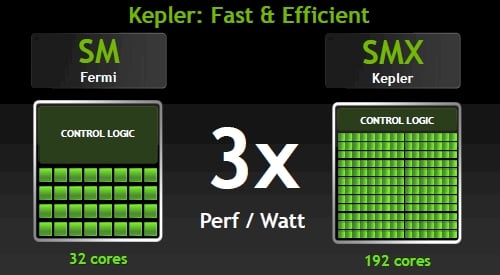

The first architectural change that Nvidia made is a tradeoff between clock speed and core counts that all CPU and GPU makers are wrestling with every day. Power consumption rises with the log of clock speed, so reducing the clock speed a little can have a dramatic impact on overall power consumption on a component.

And so concurrently with the shrink from the 40 nanometer processes used with the Fermi GPUs to the 28 nanometer processes used to etch the Keplers, Nvidia is cranking up the core counts and slowing down the clock speeds, increasing the parallelism and the overall performance of GPU while significantly lowering its power draw and heat dissipation.

There are two different Kepler GPUs in development. The Kepler1 chip, also known as GK104, is aimed at graphics cards and Tesla GPU coprocessors, where single-precision floating point math is what matters most.

Until now, Nvidia has not said much about the Kepler2 GPUs – also known as GK110 internally – except that they will be tuned for double-precision floating point math and will support more GDDR5 memory, will have ECC scrubbing on that memory, will have different packaging aimed at servers, and will cost more money than Tesla cards based on the Kepler1 units. A little more info on the Kepler2 GPUs was divulged today at the GTC 2012 event, thankfully.

Nvidia’s SMX architecture for the Kepler GPU

Nvidia’s SMX architecture for the Kepler GPU

The Fermi GPU had 512 cores, with 64KB of L1 cache per core and a 768KB L2 cache shared across a group of 32 cores known as a streaming multiprocessor, or SM. This was the first time that Nvidia added cache memory to the cores and made them look a lot more like standard CPUs in terms of their memory hierarchy. A Fermi GPU had 16 of these SMs and either 3GB or 6GB of GDDR5 memory.

The initial Fermis only shipped with 448 cores activated in the top-end models, but as yields improved at Taiwan Semiconductor Manufacturing Corp on its 40 nanometer process, Nvidia was able to ship chips with all 512 cores running.

The Fermis burned between 225 watts and 250 watts in a discrete graphics card and Tesla coprocessor cards; they originally ran at 1.15GHz with the 448 core version and were boosted to 1.3GHz with the 512 core variant. The 512 core Fermi GPU could do 665 gigaflops of double-precision floating point math and 1.33 teraflops at single precision.

With the Keplers, Nvidia is moving on to what it calls an SMX, or streaming multiprocessor extreme, architecture. With the Kepler1 chips, Nvidia is putting 192 cores into a streaming multiprocessor group with slightly modified CUDA cores. Eight of these SMX units are on a single GPU chip for a total of 1,536 cores.

The cores have a base speed of 1006MHz with a turbo boost speed of 1058MHz (no, that is not much of a boost), and even given the fact that the GPU has three times as many cores, dropping the clock speed by a third means it only burns 195 watts. It therefore offers much better performance per watt – about three times, according to Sumit Gupta, senior product manager of the Tesla line at Nvidia, who spoke to El Reg ahead of the GPU Technical Conference.

The Kepler GPUs are not just about shrinking the cores and adding more of them running at a lower speed to a GPU to boost performance. That would probably not be enough to take on the exascale computing tasks that Nvidia is wrestling with as it positions its Tesla GPU coprocessors as the preferred compute engines for future supercomputers, even if this would probably be good enough to make graphics chips that could compete against whatever Advanced Micro Devices could come up with.

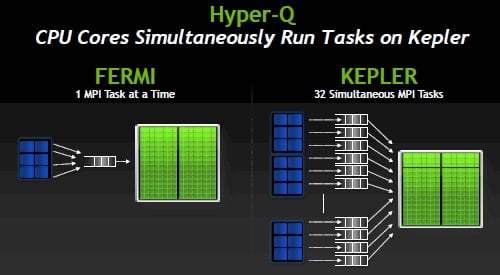

One new technology that is going to make the Keplers much better than the Fermis is called Hyper-Q, and as the name suggests, it creates a queue for message passing interface (MPI) tasks running on parallel and hybrid CPU-GPU clusters so multiple MPI tasks can be dispatched from the CPU to the GPU in parallel.

This is so obvious in hindsight that you might have already been thinking that this has already happened, but Gupta says that the Fermi GPUs could only handle one MPI task at a time.

Nvidia’s Hyper-Q feature for Kepler GPUs

Nvidia’s Hyper-Q feature for Kepler GPUs

The Kepler GPUs, by contrast, can have up to 32 distinct MPI tasks beamed to them from the CPU and dispatch them to different segments of the GPU to have them run on isolated chunks of the cores.

It is not clear what the granularity is on the Hyper-Q function, but it is probably no coincidence that there are eight SMX units with 192 cores, and it would not be surprising that Nvidia is allowing for 48 cores to run 32 different tasks at once, effectively partitioning an SMX into four units. Those 48 cores are 50 per cent larger than an SM block on a Fermi GPU, which had 32 cores that ran about 35 per cent faster. So the net performance on this SMX sub-block and the SM block would be more or less the same.

Get to work, you lazy core

No matter how Nvidia is doing it, the important thing is that the CUDA cores are not going to be sitting around tapping their feet, waiting for MPI to send them work from the CPU. While seismic workloads can already stress out a GPU dispatching one MPI task to the GPU, there are many workloads that can submit four or eight MPI tasks, says Gupta, and on the current Fermi GPU coprocessors, the efficiency for sparse matrices or finite element analysis can look “really bad”.

On the VGEMM double precision matrix multiply portion of the Linpack Fortran benchmark test, Hyper-Q helps significantly. The VGEMM to peak ration on the Fermi GPUs was at best around 65 per cent of peak theoretical performance, while on the Kepler GPUs it is in the range of 80 to 85 per cent.

On typical workloads, customers were seeing GPU utilization on the Fermis in the range of 25 to 50 per cent, but now customers can expect – thanks to Hyper-Q and depending of course on their code – efficiencies of between 70 and 90 per cent for any particular time slice.

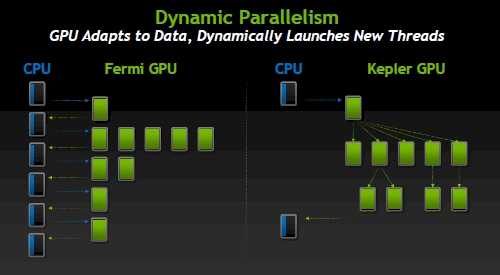

Not only is the Kepler GPU better at juggling work that the CPU offloads to it than the Fermi chip was, but with the Dynamic Parallelism feature of the chip, the GPU can launch work for itself as it deals with nested loops, recursion, and nested calls to libraries.

“The GPU has become more autonomous,” says Gupta, “and this makes the GPU programing a lot easier. If you have to go back and forth to the CPU all the time to run routines, you lose many of the advantages of using a GPU in the first place.” So Dynamic Parallelism gets rid of that.

Nvidia’s Dynamic Parallelism for Kepler GPUs

Nvidia’s Dynamic Parallelism for Kepler GPUs

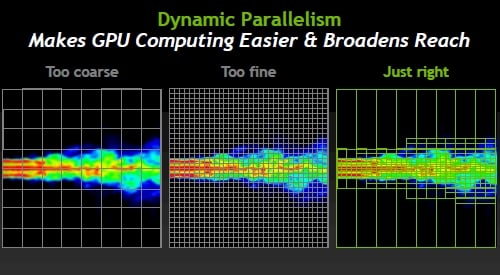

The idea behind Dynamic Parallelism is not just to make the GPU more autonomous for its own sake, but to allow for the granularity of calculations to reflect the density of the data that is being generated for a simulation. While this may be a a little tough to grasp conceptually, one picture makes it clear why Dynamic Parallelism is a very powerful addition to the GPU toolkit:

Variable granularity is what Dynamic Parallelism does for GPUs

Variable granularity is what Dynamic Parallelism does for GPUs

The driving force behind Dynamic Parallelism in the Kepler GPUs is to allow for regions of simulation to be dynamically adjusted. If you do it too coarsely, your simulation yields crap results, and if you do it too finely, you get good results but it takes forever because you are doing calculations on regions of virtual space in the simulation where nothing interesting is happening.

The idea is to do coarser calculations where space is boring and finer calculations where lots of stuff is going on, and more importantly, to allow the GPU to make decisions about the granularity of calculations on the fly. The GPU reacts to the data, launching new threads to do finer-grained calculations where required.

Add it all up, and Gupta says that the Kepler GPUs will appeal to a much broader set of calculation and simulation workloads. “All of these people who were sitting on the fence will now move to GPUs,” declares Gupta.

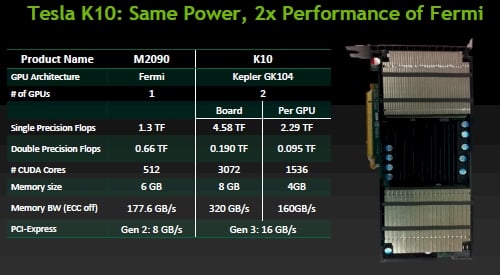

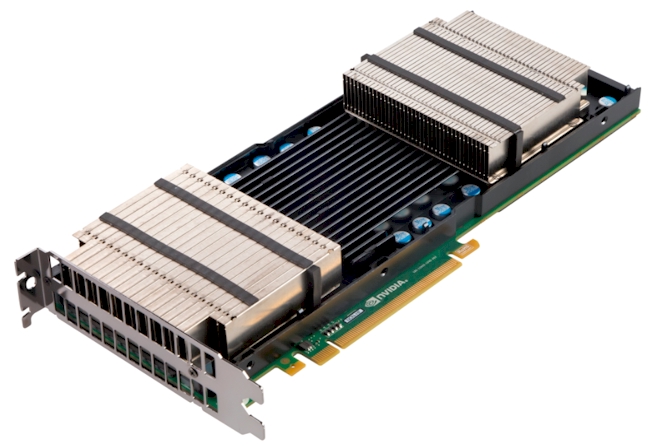

In the meantime, Nvidia is packaging up the Tesla K10 coprocessor card for servers, which puts two of the Kepler1 or GX104 GPUs on a single card and offers three times the single-precision math oomph of a top-end Tesla M2090 card using the full-on Fermi GPU.

The Tesla K10 and K20 GPU coprocessors slide into PCI-Express 3.0 slots, which means that at this point in the server cycle, they only work with Intel’s Xeon E5 family of “Sandy Bridge” processors for two-socket and four-socket servers. No other server chip is supporting PCI-Express 3.0 slots at this time.

Old Tesla M2090 versus new Tesla K10

Old Tesla M2090 versus new Tesla K10

As you can see, the Tesla K10 can’t do much in terms of double-precision math, but at 4.58 teraflops per card and 320GB/sec of memory bandwidth (that’s with ECC turned off on the GDDR5 memory) feeding those 3,072 cores on the board from the two ranks of 4GB memory (one for each GPU) and 16GB/sec of bandwidth out to the PCI bus, there are plenty of customers doing seismic, signal, image, and life sciences workloads that only use single-precision math anyway. So the Telsa K10s will be fine.

Those doing finite element analysis, computational fluid dynamics, various physics simulations, and financial calculations and simulations that are dependent on double-precision floating point math will have to wait for the Tesla K20 cards using the Kepler2 GPUs. Perhaps not patiently, but with AMD not really doing much with its FireStream GPU coprocessors and Intel not shipping its MIC parallel X86 coprocessors, waiting is the best and pretty much the only option. ®

This article originally appeared in The Register. It appears here in its entirety as part of a Richard Chirgwin • Get more from this author

Looking at the fundamental properties of matter can take some serious computing grunt.

Take the calculation needed to help understand kaon decay – a subatomic particle interaction that helps explain why the universe is made of matter rather than anti-matter: it soaked up 54 million processor hours on Argonne National Laboratory’s BlueGene/P supercomputer near Chicago, along with time on Columbia University’s QCDOC machine, Fermi National Lab’s USQCD (the US Center for Quantum Chromo-Dynamic) Ds cluster, and the UK’s Iridis cluster at the University of Southampton and the DIRAC facility.

Take the calculation needed to help understand kaon decay – a subatomic particle interaction that helps explain why the universe is made of matter rather than anti-matter: it soaked up 54 million processor hours on Argonne National Laboratory’s BlueGene/P supercomputer near Chicago, along with time on Columbia University’s QCDOC machine, Fermi National Lab’s USQCD (the US Center for Quantum Chromo-Dynamic) Ds cluster, and the UK’s Iridis cluster at the University of Southampton and the DIRAC facility.

The reason so much iron was needed: the kaon decay spans 18 orders of magnitude, which this Physorg article describes as akin to the size difference between “a single bacterium and the size of our entire solar system”. At the smallest scale, the decays measured in the experiment were 1/1000th of a femtometer.

The actual kaon decay described by the calculation spans distance scales of nearly 18 orders of magnitude, from the shortest distances of one thousandth of a femtometer — far below the size of an atom, within which one type of quark decays into another — to the everyday scale of meters over which the decay is observed in the lab,” Brookhaven explains in its late March release.

Back in 1964, a Nobel-winning Brookhaven experiment observed CP (charge parity) violation, setting up a long-running mystery in physics that remains unsolved.

The present calculation is a major step forward in a new kind of stringent checking of the Standard Model of particle physics — the theory that describes the fundamental particles of matter and their interactions — and how it relates to the problem of matter/antimatter asymmetry, one of the most profound questions in science today,” said Taku Izubuchi of the RIKEN BNL Research Center and BNL, a member of the research team hat published their findings in Physical Review Letters.

The research is seeking to quantify how much the kaon decay process departs from Standard Model predictions. This “unknown quantity” will then be hunted in calculations in the next generation of IBM supercomputers, BlueGene/Q. ®

This article originally appeared in The Register. It appears here in its entirety as part of a cross-publishing agreement.

PCIe 3.0 is backward-compatible with PCIe 2.0 and 1.0, so you can still use these cards on current and older boards. You’ll just have less available bandwidth to/from the CPU.