For about 40 years, developers and users could count on an increase in CPU clock speed that would make applications run faster. Existing code could just be moved over to the new system and the application would show a speedup. Some amount of tuning might be required to get even more performance out of the system, usually by recompiling to take advantage of new machine instructions. However, as clock rates have planed out, performance gains need to come from additional core counts and even more new instructions. This means that rethinking algorithms, APIs, and using the latest compilers has become critical for the next generation of application performance enhancements. As you read this article, and the weekly series to follow, you will learn why code modernization is so important.

Many of the applications that are used in scientific and technical computing date back many years. Computers were large, had a single processing unit and limited memory. As systems evolved for much of the past 40 years, clock rates were increased on a regular basis, ensuring application performance improvements. Memory became plentiful, such that more data could be kept in memory and additional simulation algorithms could be developed. As computer systems were developed through the 1990’s and beyond, more than one CPU was now placed on a single board in both servers and in workstations. Applications could now start to take advantage of multiple processing units, housed within the same server, but still running with a single operating system. Compiler directives could be incorporated into the code which could spread out computation to the separate CPUs. An example of compiler directives that support shared memory processing is OpenMP, originally created in 1997.

Many of the applications that are used in scientific and technical computing date back many years. Computers were large, had a single processing unit and limited memory. As systems evolved for much of the past 40 years, clock rates were increased on a regular basis, ensuring application performance improvements. Memory became plentiful, such that more data could be kept in memory and additional simulation algorithms could be developed. As computer systems were developed through the 1990’s and beyond, more than one CPU was now placed on a single board in both servers and in workstations. Applications could now start to take advantage of multiple processing units, housed within the same server, but still running with a single operating system. Compiler directives could be incorporated into the code which could spread out computation to the separate CPUs. An example of compiler directives that support shared memory processing is OpenMP, originally created in 1997.

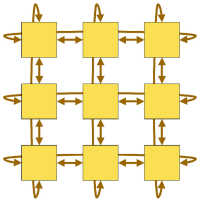

The next step in this ongoing evolution was twofold. The first was the development of an Application Programming Interfaces (API) that allowed for application to spread out the work to individual and separate servers. Although the servers had to be connected to a network, each server would be able to do the work assigned, and then communicate the results as need to the other work threads. This API became known as the Message Passing Interface (MPI). The second was from a hardware perspective as multiple computing cores were becoming common on a single chip, or socket. The number of cores per socket rose from a dual core implementation in the early to mid-2000’s in mainstream computers, up to about 15 currently in the Intel Xeon family.

Together, these hardware and software innovations have created an environment for technical computing that far exceeds what was available just a few years ago. Applications need to be adapted and written for hundreds and thousands of cores. With the combination of API’s, such as MPI and OpenMP, applications can now be spread over hundreds of servers with thousands of cores. To take advantage of these software and hardware advances, application code must be modernized using both innovative thinking as well as the tools available to each developer.