This is the third article in a series on six strategies for maximizing GPU clusters. These best practices can be used to maximize GPU resources in a production HPC environment.

Strategy 3: Manage User Environments

In a perfect world, there would be one version of all compilers, libraries, and profilers. To make things even easier, hardware would never change. However, technology marches forward, and such a world does not exist. Software tool features are updated, bugs are fixed, and performance is increased. Developers need these improvements but at the same time must manage these differences. A typical example on many Linux systems is the software libraries on HPC systems. HPC applications are often built with specific compiler versions and linked to other specific library versions that all must work correctly together. The dependency tree can become quite complex.

Download the insideHPC Guide to Managing GPU Clusters

Allowing users to manage these differences “by hand“ is a poor solution. Managing environments is done with a combination of methods. Source code is often made more robust by using conditional definitions to expose correct code sections for various compilers or software environments (e.g. #define, #ifdef , #ifndef, etc. in C include files). In combination with this approach, the users software environment has defined values that point to tool and library locations, manual pages, and other important “defines“ that makes sure the development toolset is correctly configured. (e. g. INCLUDEPATH, LD_LIBRARY_PATH, CUDA_INCLUDE_PATH, etc. in the users shell environment.) Administrators can set default environment defines, however, when users need to change a define (or more likely a set of defines) to point to a different version of the software, they often do it “by hand“ in their shell configuration files (e.g. .bashrc or .cshrc ). Changing these settings can be complicated, error prone, and hard to manage for developers. Without some form of “environment management“ the “hand managed“ development environment very quickly becomes a fragile solution for software developers.

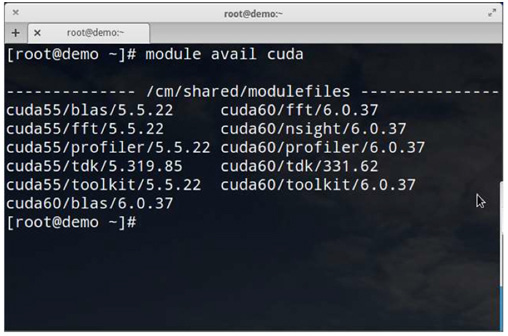

The most widely used open source environment management tool is called “Modules“ and is available from http://modules.sourceforge.net. (Another tool called lmod performs a similar function and is available from https://www.tacc.utexas.edu/research-development/tacc-projects/lmod). By using the Module tool, a user’s environment “defines“ can be changed with one command. For example, if configured correctly, the command: $ module load cuda60/toolkit/6.0.37

will set users development environment to use the NVIDIA CUDA Toolkit version 6.0.37. Once set the developer can be assured that they are using a correctly configured development environment. It would be equally easy to change to a newer CUDA environment, such as version 7, with a similar command.

The use of Modules (or lmod) is a powerful method that can help reduce developer and administrator frustrations. Although the Modules package has been a boon to HPC users and administrators, each environment must be configured and tested before the developers can take advantage of the functionality. This requires considerable thought and planning because module naming needs to be consistent and represent the important differences between tools as the number of options grows.

Unique among cluster management options, Bright Cluster Manager offers fully configured Modules environment for NVIDIA GPU clusters. There is no need to develop and test Module environments for new software. Indeed, in addition to NVIDIA GPU development, Bright Cluster Manager includes many preconfigured module files, for compilers, mathematical, and MPI libraries. Additional modules can be configured centrally by the system administrator, or locally by an individual user. Most popular shells are supported, including bash, ksh, sh, csh, tcsh.

Combined with the automatic GPU software updates, the Bright Cluster Manager offers developers instant availability of new development environments. When NVIDIA releases a new CUDA version, a fully tested Module based development environment is made available as part of the automatic update. Developers can begin using the new environment right away. As an example, Figure 2 shows two CUDA environments existing side-by-side on a cluster. The developer can choose an environment using a single module load command.

Figure 2: Bright Cluster Manger provides a fully configured development environment using Modules configured for the NVIDIA software environment. Developers can change environments with a single command.

Strategy 4: Provide Seamless Support for all GPU Programming Models

In addition to the CUDA environment mentioned above, developers have many options when programming GPUs. In particular, NVIDIA GPUs have an excellent HPC software ecosystem. There are many approaches to GPU programming but most HPC applications utilize one of the following compiler methodologies:

- NVIDIA CUDA – the CUDA programming model was developed by NVIDIA and provides a powerful model to express parallel GPU algorithms in a C/C++ and Fortran. The nvcc CUDA compiler is available for NVIDIA hardware.

- OpenCL – is a framework for writing programs that execute across heterogeneous platforms such as CPU and GPUs. Based on the C99 standard, the clcc compiler will allow code development on heterogeneous processing architectures.

- OpenACC – is a multi-vendor supported specification based largely on early work by The Portland Group that allows new and existing C and Fortran programs to be modified with comment-based directives. (i.e., the function of the original program is not changed.) A compiler that supports OpenACC is then used to create GPU-ready programs. More information can be found from the http://www.OpenACC.org

A fourth method using optimized libraries in conjunction with a conventional host compiler can also be used to add GPU capabilities to applications. There are several accelerated libraries available to developers including:

- cuBLAS – an implementation of the Basic Linear Algebra Subprograms package

- cuSPARSE – a set of sparse matrix subroutines

- cuFFT – an implementation of FFT algorithms for NVIDIA GPUs.

- cuDNN library of primitives for Deep Neural Networks

- NPP – NVIDIA Performance Primitives is a very large collection of 1000’s of image processing primitives and signal processing primitives.

There are also wrappers for other languages (Python, Perl, Java, and others) that allow GPUs to be used. In a typical HPC environment C/C++ and Fortran compilers from GNU, The Portland Group, Intel, and others are used. The Portland Group, Cray, PathScale and GNU gcc 5 compilers now support OpenACC, however, Intel compilers do not. To help with debugging and profiling there are applications such as TAU, Allinea, and TotalView)

Most HPC clusters also include a healthy mix of MPI (Message Passing Interface) libraries including OpenMPI, MPICH, MPICH-MX, and MVAPICH. Finally there are many scientific libraries, including mathematical routines that are made available to developers.

This diverse high performance software tool ecosystem is necessary for today’s HPC installations. As mentioned previously, managing these options can be done by using the Modules package. Without some kind of automatic and systematic compiler/library management a developer would need to spend time creating the critical environment to compile and run the applications for both CPUs and GPUs. Any changes, such as new versions or new hardware, can easily break a handmade development environment.

The task of creating and testing Module configuration often falls on the system administrator. One common approach is to create an entire tool chain environment including math (e.g., PETSc or ScaLAPACK), MPI libraries, and others. Users can invoke various tool chains when using a specific compiler or library version. The real power of Modules is when a new compiler or library version is available, a new tool chain can be built and installed next to the existing tool chain. Thus, users can easily move between old and new version with ease. A similar configuration should be created for all GPU tools as well.

Bright Cluster Manager comes with many of the popular GPU (and CPU) based tool chains preinstalled with full Module support. That is, there is no need to weave together various tool chains for use with the Modules package. Developers can easily move between virtually all development environments with a simple Modules command. The installed Module configuration is also very flexible and extensible. As mentioned above, administrators can add new Module environments for virtually any installed package. With a fully integrated GPU tool chain, developers can begin working right away, there is no need to configure or construct a development environment.

Next week we’ll dive into strategies for dealing with the convergence of of HPC and big data analytics. If you prefer you can download the complete insideHPC Guide to Managing GPU Clusters courtesy of NVIDIA and Bright Computing.