On Monday of this week, we covered a quick press release from Mellanox regarding their message performance. Specifically, it detailed the number of messages they could dispatch per second, per node. In turn, this allows one to hypothesize about the latency required to perform a single message transfer.

On Monday of this week, we covered a quick press release from Mellanox regarding their message performance. Specifically, it detailed the number of messages they could dispatch per second, per node. In turn, this allows one to hypothesize about the latency required to perform a single message transfer.

Why do we care? Well, HPC interconnect technologies have continuously improved upon their large message performance, which usually translates to higher theoretical peak bandwidth values. Historically, it was very expensive for the operating system to start, send and receive messages via traditional, framed and connection-oriented interconnects [eg, Ethernet]. As such, application architects would aggregate messages into larger blocks in order to overcome the software overhead associated with a message. Passing individual, small messages was udder lunacy.

Enter HPC in 2010. Those aggregate messages no longer exceed the capabilities of the interconnect. Moreover, many of the latest applications are taking serious advantage of the latency improvements of modern RDMA interconnects. Long story short, apps can flood the network with small messages.

Mellanox has gone to great lengths in the last two years to no long improve bandwidth performance of Infiniband interconnects, but also improve the message throughput. Gilad Shainer, Mellanox’s Senior Director of HPC, was kind enough to send me a few slides with more technical meat on their results.

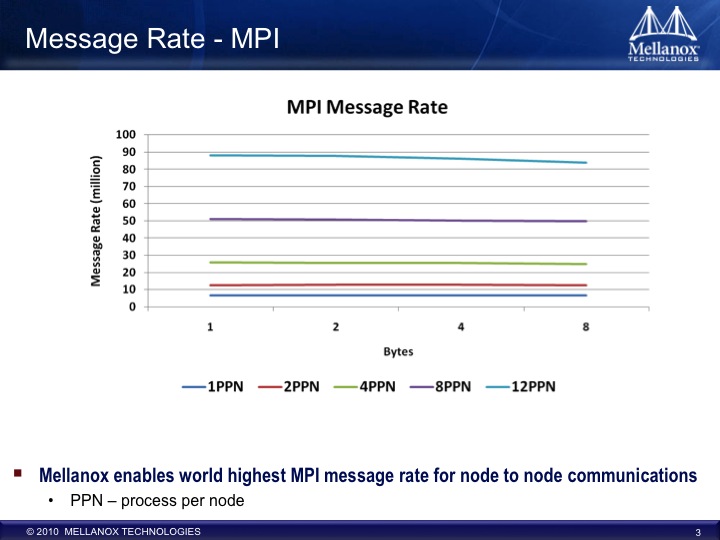

The test includes five different thread layouts. 1, 2, 4, 8 and 12 MPI threads were tested 1, 2, 4 and 8 byte message payloads. No word on whether this was an Intel or AMD system-based system. At the low end, the single threaded test achieves a reasonably linear performance across message payload sizes of between 8 and 9 million messages per second. This is a message every 0.125 microseconds.

The test includes five different thread layouts. 1, 2, 4, 8 and 12 MPI threads were tested 1, 2, 4 and 8 byte message payloads. No word on whether this was an Intel or AMD system-based system. At the low end, the single threaded test achieves a reasonably linear performance across message payload sizes of between 8 and 9 million messages per second. This is a message every 0.125 microseconds.

The tests go on to scale up to the 90 million messages per second for 12 threads using 1 byte message payloads. The performance at 12 threads isn’t perfectly linear, but it only drops to ~82 million messages per second at 8 byte payloads.

Thanks to Brian Sparks and Gilad Shainer for sharing benchmark info! Click on the image for a full view of the results.