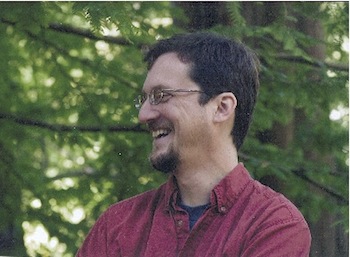

This series is about the men and women who are changing the way the HPC community develops, deploys, and operates the supercomputers we build on behalf of scientists and engineers around the world. John Shalf, this month’s HPC Rock Star, leads the Advanced Technology Group for Lawrence Berkeley National Lab, has authored more than 60 publications in the field of software frameworks and HPC technology, and has been recognized with three best papers and one R&D 100 award.

This series is about the men and women who are changing the way the HPC community develops, deploys, and operates the supercomputers we build on behalf of scientists and engineers around the world. John Shalf, this month’s HPC Rock Star, leads the Advanced Technology Group for Lawrence Berkeley National Lab, has authored more than 60 publications in the field of software frameworks and HPC technology, and has been recognized with three best papers and one R&D 100 award.

Among the works he has co-authored are the influential “View from Berkeley” (led by David Patterson, and others), the DOE Exascale Steering Committee, and the DARPA IPTO Extreme Scale Software Challenges report that sets DARPA’s information technology research investment strategy for the next decade.

He also leads the LBNL/NERSC Green Flash project — which is developing a novel HPC system design (hardware and software) for kilometer-scale global climate modeling that is hundreds of times more energy efficient than conventional approaches — and participates in a large number of other activities that range from the DOE Exascale Steering Committee to Program Committee Chair for SC2010 Disruptive Technologies exhibit.

Shalf’s energy and dedication to HPC are helping to actively shape the future of HPC, and that’s what makes him this month’s HPC Rock Star.

insideHPC: How did you get started in HPC?

John Shalf: I spent a lot of time as a kid hanging out in the physics department and computing center at Randolph Macon College (RMC) in Ashland, where I grew up. The professors there gave me (and other neighborhood kids) accounts on their IBM mainframe and Perkin-Elmer unix minicomputer, and access to the supply rooms behind the classrooms were there were hundreds of computing technology artifacts such as 3D stacked core memories from old IBM systems, and adders constructed using vacuum tube logic. My friends and I spent a lot of time in the summers and after school in 4th thru 6th grade, exploring the back rooms and having the professors patiently explain what we were looking at and how it worked. We also got our first taste of the UNIX operating system and CRT terminals, albeit we learned more about playing Venture (a text video game) than programming.

When I was about 11, Dr. Maddry offered to teach me how to build a computer in exchange for my help cleaning up his lab during the summer. We actually had a race where he built a computer using a Z80 chip, and I built my computer using an 8080a. Both of our computers had 128 bytes (yes bytes… not kilobytes) of memory, ran at 500khz (could run faster if you turned off the fluorescent lights In the room), and was programmed using a set of dip-switches on the board. I still remember the 8212 tristate latches and the TTL discrete logic chips we needed to glue everything together. I had a blast building it, and just as much fun programming it, despite the rudimentary nature of the user interface, and the low-resolution of the display system (12 LED’s lined up in a row to show the data and the memory addresses). After that, I become hooked on computer architecture and machine design.

In college, I took my first HPC course and become interested in parallel computation. Where we got accounts on the HPC systems (Cray vector and IBM) at the NSF supercomputing systems. I was particularly fascinated with Thinking Machines systems, but also learned a lot about dataflow computing. Around this time, I collected many old machines through surplus auctions as well to learn how they worked. I had quite a collection of PDP-8s and PDP-11s, and started the Society for the Preservation of Archaic Machines (SPAM). The chemistry department maintained many PDP’s for their experiments, so they became a resource for manuals, circuit diagrams, advice on machine repair, and a FORTH interpreter that ran on top of RSTS.

During this time, I also discovered Ron Kriz’s vislab, where I developed an interest in computer graphics and visualization as another way to interact with the HPC community. Whereas I had been connected to computing only through my study of computer architecture and programming, the vislab and working on programming / optimization of material science codes for the Engineering Science and Mechanics (ESM) department opened me up to direct collaboration with science groups. It was there that I learned that the interdisciplinary collaborations in HPC is where the rubber hits the road. That the pursuit of answers to scientific grand-challenges required such broad-based collaborations is what makes “supercomputing” so exciting.

insideHPC: What would you call out as one or two of the high points of your career — some of the things of which you are most proud?

Shalf: My first real job in HPC was at NCSA, where I divided my time between NCSA’s HPC consulting group (led by John Towns), Ed Seidel’s General Relativity Group, and Mike Norman’s Laboratory for Computational Astrophysics. This was the golden years for NCSA and the NSF HPC Centers program as well. NCSA Mosaic was just getting popular. I got to work on HPC codes on a variety of platforms. The LCA was developing its first AMR codes (Enzo). I got to learn how to work on virtual reality programs in the CAVE, and participated in national-scale high-performance networking test beds for the SC1995 IWAY experiment. There was such a wide variety of computer architectures — Cray YMP, a Convex C3880, and a Thinking Machines CM5.

What an amazing time!

It was also a time of great transition because it was clear that our vector machines were going to be turned off eventually and replaced by clusters of SMPs (SGI’s and Convex Exemplars initially, followed by clusters). It’s very similar to what is happening to the HPC community today as we transition to multicore. It was an exciting time to start in HPC. There were new languages like HPF, messaging libraries like PVM and P4, and MPI. It was unclear what path to take to re-develop codes for these emerging platforms, so we tried all of the options using toy codes. Everyone was busily creating practice codes to try out each of these emerging alternatives to re-develop their entire code base to survive this massive transition of the hardware/software ecosystem.

The first few implementations of the parallel codes worked, but revealed serious impediments to future/collaborative code development. When Ed Seidel’s group moved to the Max Planck Institute in Potsdam Germany, Paul Walker and Juan Masso hatched a plan to create a new code infrastructure, called Cactus, to combine what we’d learned about how to parallelize the application efficiently and hide the MPI code from the application developers with clever software engineering to support collaborative/multidisciplinary code development. Cactus was so titled by Paul because it was to “solve thorny problems in General Relativity”. I had a huge amount of fun developing components for the first versions of Cactus, which is still used today (www.cactuscode.org). We had a huge sense of purpose and dedication to the development of Cactus infrastructure — creating advanced I/O methods, solver plug-ins, remote steering/visualization interfaces, etc. I continued to work with subsequent Cactus developers (Gabrielle Allen, Tom Goodale, Erik Schnetter, and many others) many years after leaving Max Planck to extend it for Grid computing and new computing systems. One of the first things the group did when I came to LBNL was to run the “Big Splash” calculation on the NERSC “Seaborg” system, of inspiraling colliding black holes. The calculation was ground-breaking, in that it disproved a long-held model for initial conditions for these inspiraling mergers, and its demonstration of what you could do with large scale computing resources ultimately spawned the DOE “INCITE” program. The work with the Cactus team is one of the highlights of my career, even though there was a cast of hundreds contributing to its success.

The Green Flash project is also one of the projects that has been a lot of fun. Like Cactus, there are a large number of people working on different aspects of this multi-faceted project. I definitely love this kind of broad interdisciplinary work. We get to re-imagine computing architecture, programming models, and application design massively parallel chip architectures that we anticipate will be the norm by 2018. Our multi-disciplinary team is on the forefront of applying co-design processes to the development of efficient computing systems for the future. There are a lot of similarities between the move towards manycore/power-constrained architectures and the massive disruptions that occurred at the start of my career when everyone was moving from vectors to MPPs. It is exciting to have such an open slate for exploration, and a time for radical concepts in computer architecture to be reconsidered.

insideHPC: What do you see as the single biggest challenge we face (the HPC community) over the next 5-10 years?

Shalf: The move to exascale computing is the most daunting challenge that the community faces over the next decade. If we do not come up with novel solutions, then we will have to contend with a future where we must maintain our pace of scientific discovery without future improvements in computing capability.

The exascale program is not just about “exaFLOPS,” it’s about the phase transition of our entire computing industry that affects everything from cell phones to supercomputers. This is as big a deal as the conversion from vectors to MPI two decades ago. We cannot lose sight of the global nature of this disruption — that is not just about HPC. DARPA’s UHPC program strikes the right tone here. We need that next 1000x improvement for devices of all scales. Until recently we have been limited by costs and chip lithography (how many transistors we could cram onto a chip), but now hardware is constrained by power, software is constrained by programmability, and science is squeezed in between. Even if we solve those daunting challenges, science may yet be limited by our ability to assimilate results and even validate those results.

I think there is a huge problem with us conflating success in “exascale” with the idea that the best science must consume an entire exascale computing system (the same is true to some extent with our obsession with scale for “petascale.”). The best science comes in all shapes and sizes. The investment profile should be more balanced towards scientific impact (scientific merit, whether it is measured in papers or US competitiveness). There is a role for stunts to pave the way to understand how to navigate the path to the next several orders of magnitude of scaling. But the focus should definitely be more on creating a better computing environment for everyone — more programmable, better performing, and more robust.

We do have a tendency to say that the solution to all of our programmability problems is just finding the right programming model. This puts too much burden on language designers and underplays the role of basic software engineering for creating effective software development environments. Dan Reed once said that our current software practices are “pre-industrial,” where new HPC applications developers join the equivalent of a “guild” to learn how to program a particular kind of application. Languages and hardware play a role (just as the steam engine played a role in the start of the industrial revolution), but software engineering and good code structures that clearly separate the roles of CS experts from domain scientists (frameworks like Cactus, Chombo, and Vorpal) and algorithm designers are also critical areas that often get under-appreciated in the development of future apps.

insideHPC: How do you keep up with what’s going on in the community and what do you use as your own “HPC Crystal Ball?”

Shalf: For hardware design and computer science, attending many meetings to interact with the community plays an essential role in gauging the zeitgeist of the community. Given the huge amount of conflicting information, you need to talk to a lot of people to get a more statistical view of what technology paths are actually practical and what is just wishful thinking. Getting someone to talk over a beer is always more insightful for the “HPC Crystal Ball” than simply accepting their PowerPoint presentation or paper at face value. You have to constantly look at what other people are doing.

I’ve always enjoyed the SIAM PP (SIAM Conference on Parallel Processing for Scientific Computing) and SIAM CSE (SIAM Conference on Computational Science and Engineering) meetings as a great source for seeing ideas that are still “in progress.” Normally, conferences have a strict vetting process for papers. The presented work is usually thoroughly vetted and mostly complete. There is little opportunity to drastically change the direction of such work. However, the SIAM meetings support having people getting together through mini-symposiums to discuss work that is still in progress, and in some cases, is not fully baked. This is where there is a real exchange of wild ideas and new ways of thinking about solving problems. I think there is a role for both types of meetings, but I definitely see more of the pulse of the community in the SIAM mini-symposiums.

I also find that journals that are targeted more at domain scientists have a lot of information about future directions of the community. You quickly find out what is important and why. More importantly, you learn the vocabulary to actually communicate with scientists about their work.

insideHPC: What motivates you in your professional career?

Shalf: Scientists like to do things because they are interesting. Engineers like to do things that are “useful”. I’m an engineer who likes to hang out with scientists to get a bit of both the “interesting” and the “useful.” If I can do things that are both interesting AND useful, I’m very happy.

There is a recent article in Science Magazine (Vol 329, July 16, 2010) entitled “learning pays off.” It showed research that people who went into science because they were excited by the science, and not simply because they were good at math, were the most likely to continue in the field. This makes total sense to me. I’m just a science geek. I’m not a scientist or physicist by training, but I love to read Science and Nature magazine from cover to cover whenever a new one arrives. I just love to learn new things and explore. Supercomputing is a veritable smorgasbord of ideas and different science groups. The deeper I dive into my professional career, the more I learn and the more people I meet who have radically different perspectives on computing and in science. It’s so much fun to learn something new every day.

It’s also fun trying to be the man-in-the-middle to communicate between people with disparate backgrounds. Because of my diverse interests, my career has run the gamut from Electrical Engineering and computer hardware design, to code development for a scientific applications team, to computer science, and then back again to hardware design. I remember the perspective I had when I was in each of those different roles (when in EE, I thought the scientists were all just bad programmers, and when working for the apps group, I thought the hardware architects were just idiots who would not listen to the needs of the application developers). All of the interesting things happening in supercomputing are happening in the communication between these fields, and I love to be there, right in the middle. This is why co-design has become such a popular term: it’s where all of the action is today.

insideHPC: Are there any people who have been an influence on you during your years in this community?

Shalf: Many, many people. Nick Liberante, and English professor with uncompromising standards for excellence, taught me how to organize thoughts for writing, and the importance of memorization to facilitate that organization process. Ron Kriz taught me the value of persistence, collaboration across multiple disciplines, and to be undaunted by the challenges of new and rapidly evolving technologies. Ed Seidel has had a huge influence on my career by launching me into the HPC business and teaching me how far you can push yourself if you set seemingly unrealistic stretch goals. Ed and Larry Smarr, Maxine Brown, and Tom Defanti demonstrated the power of demonstrating the “seemingly impossible” is within our grasp through ambitious demonstrations like the SC95 IWAY. Donna Cox taught me the magic that can result from bringing both scientists and artists together (seemingly disparate groups) to create powerful communication media. Tom Defanti taught me the importance of articulating what I want to do (either by writing, or presenting to others) by saying “It’s not a waste of time if you have the right attitude. You are writing the future.” He also showed me how we can reinvent ourselves to take on new challenges as he went from CAVE VR display environments and jumped in to high performance international optical networking.

insideHPC: What type of ‘volunteer’ activities are you involved in — both professional activities within the community, and personal volunteer activities.

Shalf: I would say I’ve gotten way over committed to SC-related volunteer activities. In the past, I’ve spent some time helping with the LBL summer high-school students program. This year, I’ve gotten completely immersed in participating in the program committees and organization of HPC-related conferences. I’m on the program committees for IPDPS, ISC, ICS, and SC. It’s fun to participate in the organization and planning of so many different conferences, but it’s a lot of work. I would like to get back to working with the high school and undergraduate students to get them excited about this field.

insideHPC: How can we both attract the next generation of HPC professionals into the community, and provide them with the experience-based training that they will need to be successful.

Shalf: Well, first we should call it “supercomputing” rather than HPC if we want to attract new talent. It sounds interesting when a high-school kid says they want to work on supercomputers. If they say they want to work on High Performance Computing, they’ll have their underwear pulled up around their ears by the class bully in no time.

I ended up in this field because of the patience of a few physics professors at RMC when I was growing up. There is no degree in supercomputing (or HPC) because the field is fundamentally interdisciplinary. So you have to catch kids early to get them excited about the breadth of experiences that supercomputing can offer.

Closing Comments from John Shalf

We are back in a transition phase for our entire hardware/software ecosystem that is much like the transition we made to MPI. Times of disruption are also great times of opportunity for getting new ideas put into practice. The world is wide open with possibilities. It’s a great time to be involved in computing research.