Today IBM announced they are collaborating with the U.S. Air Force Research Laboratory (AFRL) on a first-of-a-kind brain-inspired supercomputing system powered by a 64-chip array of the IBM TrueNorth Neurosynaptic System. The scalable platform IBM is building for AFRL will feature an end-to-end software ecosystem designed to enable deep neural-network learning and information discovery. The system’s advanced pattern recognition and sensory processing power will be the equivalent of 64 million neurons and 16 billion synapses, while the processor component will consume the energy equivalent of a dim light bulb – a mere 10 watts to power.

Today IBM announced they are collaborating with the U.S. Air Force Research Laboratory (AFRL) on a first-of-a-kind brain-inspired supercomputing system powered by a 64-chip array of the IBM TrueNorth Neurosynaptic System. The scalable platform IBM is building for AFRL will feature an end-to-end software ecosystem designed to enable deep neural-network learning and information discovery. The system’s advanced pattern recognition and sensory processing power will be the equivalent of 64 million neurons and 16 billion synapses, while the processor component will consume the energy equivalent of a dim light bulb – a mere 10 watts to power.

AFRL was the earliest adopter of TrueNorth for converting data into decisions,” said Daniel S. Goddard, director, information directorate, U.S. Air Force Research Lab. “The new neurosynaptic system will be used to enable new computing capabilities important to AFRL’s mission to explore, prototype and demonstrate high-impact, game-changing technologies that enable the Air Force and the nation to maintain its superior technical advantage.”

IBM researchers believe the brain-inspired, neural network design of TrueNorth will be far more efficient for pattern recognition and integrated sensory processing than systems powered by conventional chips. AFRL is investigating applications of the system in embedded, mobile, autonomous settings where, today, size, weight and power (SWaP) are key limiting factors.

The IBM TrueNorth Neurosynaptic System can efficiently convert data (such as images, video, audio and text) from multiple, distributed sensors into symbols in real time. AFRL will combine this “right-brain” perception capability of the system with the “left-brain” symbol processing capabilities of conventional computer systems. The large scale of the system will enable both “data parallelism” where multiple data sources can be run in parallel against the same neural network and “model parallelism” where independent neural networks form an ensemble that can be run in parallel on the same data.

“The evolution of the IBM TrueNorth Neurosynaptic System is a solid proof point in our quest to lead the industry in AI hardware innovation,” said Dharmendra S. Modha, IBM Fellow, chief scientist, brain-inspired computing, IBM Research – Almaden. “Over the last six years, IBM has expanded the number of neurons per system from 256 to more than 64 million – an 800 percent annual increase over six years.’’

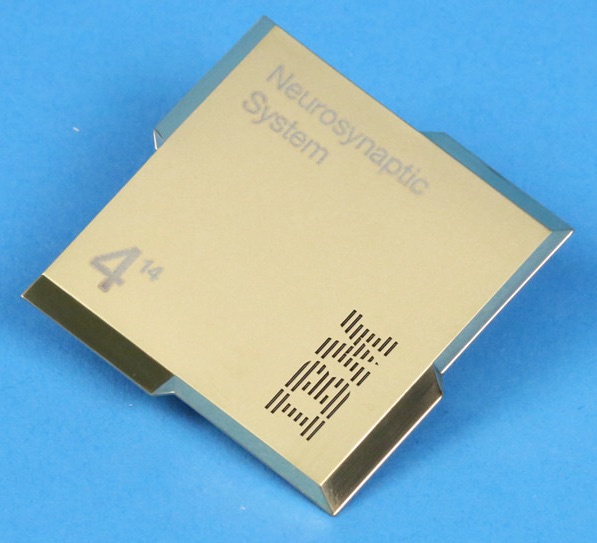

The system fits in a 4U-high (7”) space in a standard server rack and eight such systems will enable the unprecedented scale of 512 million neurons per rack. A single processor in the system consists of 5.4 billion transistors organized into 4,096 neural cores creating an array of 1 million digital neurons that communicate with one another via 256 million electrical synapses. For CIFAR-100 dataset, TrueNorth achieves near state-of-the-art accuracy, while running at >1,500 frames/s and using 200 mW (effectively >7,000 frames/s per Watt) – orders of magnitude lower speed and energy than a conventional computer running inference on the same neural network.

The IBM TrueNorth Neurosynaptic System was originally developed under the auspices of Defense Advanced Research Projects Agency’s (DARPA) Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) program in collaboration with Cornell University. In 2016, the TrueNorth Team received the inaugural Misha Mahowald Prize for Neuromorphic Engineering and TrueNorth was accepted into the Computer History Museum. Research with TrueNorth is currently being performed by more than 40 universities, government labs, and industrial partners on five continents.