Navigating through the maze of hardware and software options presents a serious challenge to developing cost-effective real-time vision applications. There are number of open source libraries and APIs that already provide functionality for deep learning over neural networks on a variety of GPU and CPU platforms. But this only complicates the task for AI developers by requiring them to know far more than they need to about each platform’s details in order to create efficient vision-based applications.

Navigating through the maze of hardware and software options presents a serious challenge to developing cost-effective real-time vision applications. There are number of open source libraries and APIs that already provide functionality for deep learning over neural networks on a variety of GPU and CPU platforms. But this only complicates the task for AI developers by requiring them to know far more than they need to about each platform’s details in order to create efficient vision-based applications.

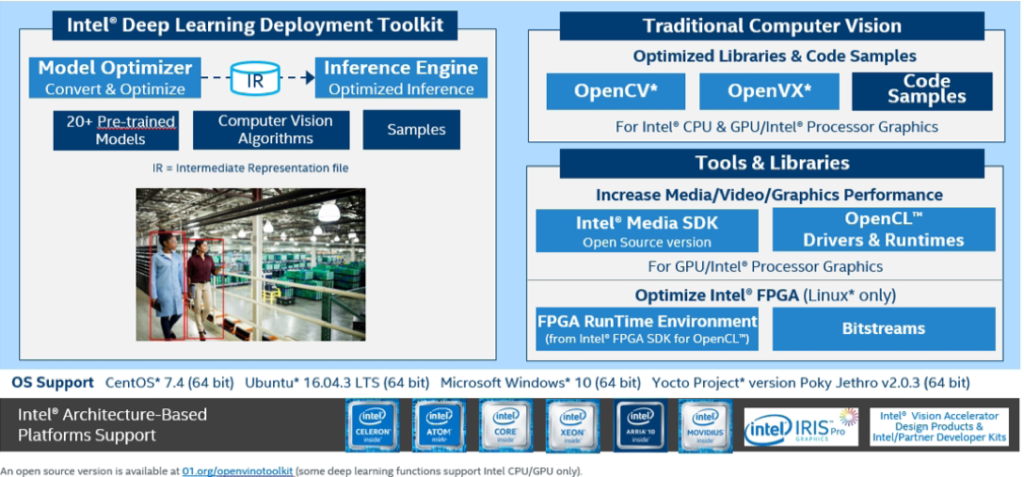

To make things easier, Intel® released OpenVINO™, a free download toolkit optimized for Intel hardware. Using a common API, Intel has abstracted away most multi-platform programming issues. This toolkit supports heterogeneous execution across CPUs and computer vision accelerators including GPUs, Intel® Movidius™ hardware, and FPGAs.

OpenVINO stands for Open Visual Inferencing and Neural Network Optimization. Applications that emulate human vision rely on neural networks for analyzing imagery. OpenVINO provides an optimized neural network independent of the underlying hardware.

Originally called the Intel Computer Vision SDK, so much new functionality was added that it needed a new name. OpenVINO now includes some new APIs: the Deep Learning Deployment toolkit, a common deep learning inference toolkit, and optimized functions for OpenCV* and OpenVX*, with support for the ONNX*, TensorFlow*, MXNet*, and Caffe* frameworks.

This is a single toolkit that the data scientist and AI software developer can use for quickly developing high-performance applications that employ neural network inference and deep learning to emulate human vision over various platforms.

Highly optimized versions of OpenCV and OpenVX ensure best possible performance. These complementary libraries are most often found in image processing over CPUs and GPUs, such as real-time video analysis and machine learning applications. The toolkit also includes a library of functions, pre-optimized kernels, and optimized calls for both OpenCV and OpenVX.

Image courtesy of Intel

Image courtesy of Intel

The Deep Learning Deployment Toolkit, a major part of OpenVINO, includes the Model Optimizer and the Inference Engine. It’s the Model Optimizer that provides a major performance boost. The Model Optimizer imports trained models from various popular frameworks and converts them to a unified intermediate representation (IR). Along the way, it converts data types to match the underlying hardware, and further optimizes neural network topologies through node merging, fusion, and quantization.

The Deep Learning Inference Engine gives AI developers a simple and unified API for inference with heterogeneous support across various platforms (CPU, CPU with graphics, FPGA, and VPU). It does this by using plugins for each hardware type that are loaded dynamically as needed. This way the Inference Engine is able to deliver the best performance without having you write multiple code pathways for each platform.

The latest (2018 R5) release of OpenVINO extends neural network support with a preview of 3D convolutional-based networks that could potentially provide new application areas beyond computer vision. Also introduced is a preview of the Neural Network Builder API, which provides a flexible way to create graphs from simple API calls. This lets you use the Inference Engine without needing to load intermediate representation (IR) files.

Also noted in this release is the use of new parallelization techniques on multicore platforms for a significant boost in CPU performance, and the addition of three optimized pre-trained models, to bring the total to 30 such models in the toolkit. These new models provide text detection within indoor and outdoor scenes, as well as single-image, super-resolution networks for enhancing input images.

The best thing is that the entire OpenVINO Toolkit is available as a free download.

Download Intel® Distribution of OpenVINO™ for free now