A new paper from IIT Hyderabad in India surveys applications and architectural-optimizations of Micron’s Automata Processor. Now accepted in the Journal of Systems Architecture, the survey by Sparsh Mittal reviews nearly 60 papers.

A new paper from IIT Hyderabad in India surveys applications and architectural-optimizations of Micron’s Automata Processor. Now accepted in the Journal of Systems Architecture, the survey by Sparsh Mittal reviews nearly 60 papers.

Problems from a wide variety of application domains can be modeled as “nondeterministic finite automaton” (NFA) and hence, efficient execution of NFAs can improve the performance of several key applications. Since traditional architectures, such as CPU and GPU are not inherently suited for executing NFAs, special-purpose architectures are required for accelerating them. Micron’s automata processor (AP) exploits massively parallel in-memory processing capability of DRAM for executing NFAs and hence, it can provide orders of magnitude performance improvement compared to traditional architectures. This paper presents a survey of techniques that propose architectural optimizations to AP and use it for accelerating problems from various application domains such as bioinformatics, data-mining, network security, natural language, high-energy physics, etc.”

On conventional processors such as CPUs and GPUs, NFA execution is highly inefficient. This happens because the von-Neumann architecture of conventional processors incurs high data-movement overheads due to irregular memory accesses arising from the transition table lookups. Further, the multi-core processors do not run a sufficient number of threads to identify the patterns present, and the vector processors get bottlenecked by the branch divergence due to the if-else style code of NFA execution.

Similarly, the solutions based on ternary content addressable memory (TCAM) do not scale well and those based on ASIC do not generalize or get bottlenecked by the memory bandwidth. While FPGAs are effective in accelerating NFAs, even the high-end FPGAs can fit only a few hundred NFAs. This necessitates partitioning of large automata across multiple FPGAs.

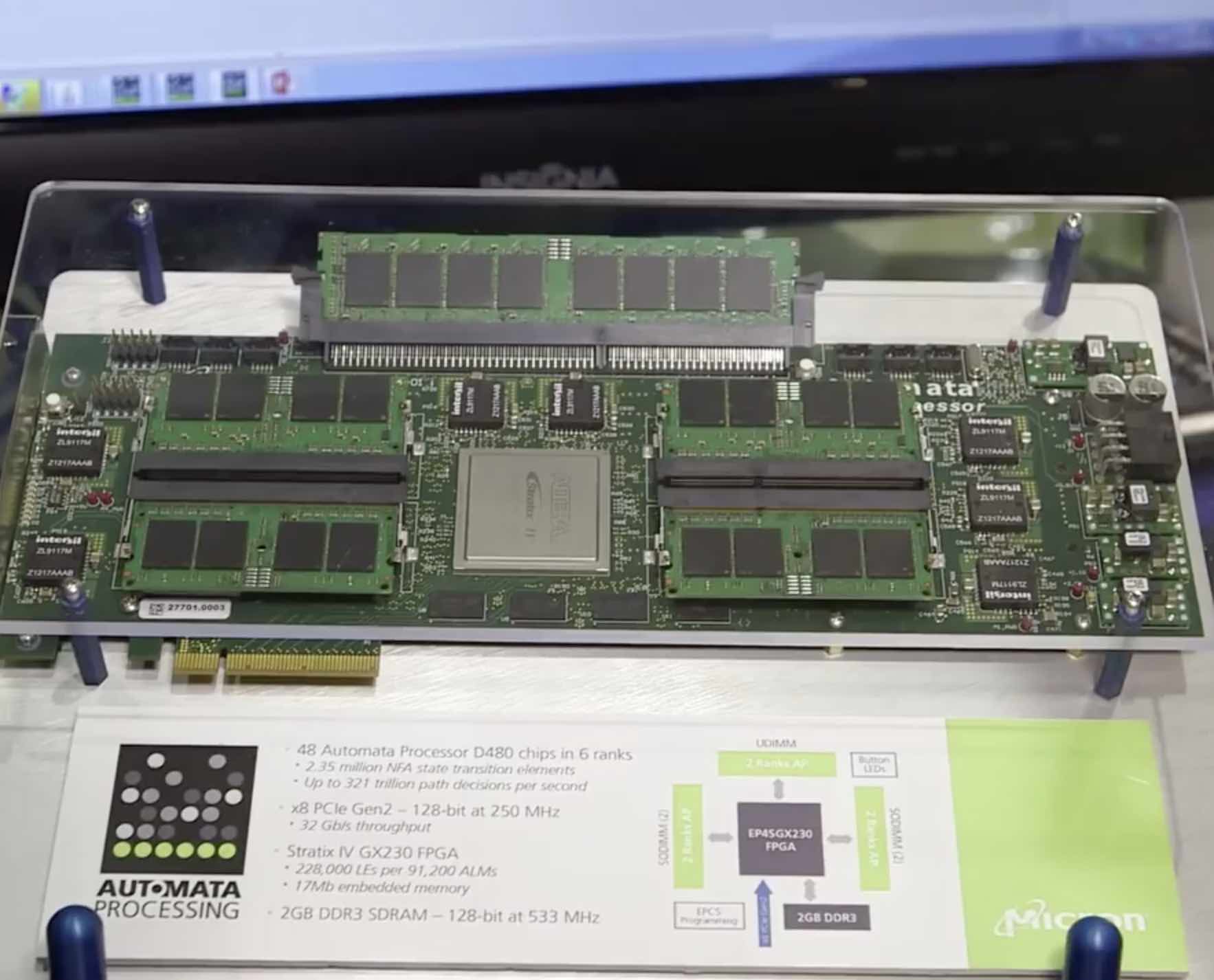

Micron’s Automata Processor is a novel accelerator which avoids data-transfer overheads in NFA-execution by handling NFA transitions entirely within memory. AP implements NFA states and matching rules using reconfigurable elements and next-state activation using a hierarchical reconfigurable routing scheme which has high fan-in and fan-out. By virtue of this, AP can concurrently match an input pattern against many possible patterns, offering massive parallelism. However, AP architecture also has limitations. For example, it has limited hardware resources, high reconfiguration latency and no support for floating-point numbers or arithmetic computations. Hence, effective utilization of AP presents challenges. Several recent works propose techniques to address these challenges.

In this video from SC14, Paul Dlugosch from Micron describes the new Automata processor for Big Data.