The Compute Express Link (CXL) Consortium is chasing the utopian tech dream, now being realized in an increasing number of high performance servers, of a high-speed, open-interface interconnect that enables the heterogenous Babel of CPUs and accelerators to talk to each other, to all get along. It’s a critically important capability for AI, machine learning and HPC workloads leveraging multiple computing architectures.

The Compute Express Link (CXL) Consortium is chasing the utopian tech dream, now being realized in an increasing number of high performance servers, of a high-speed, open-interface interconnect that enables the heterogenous Babel of CPUs and accelerators to talk to each other, to all get along. It’s a critically important capability for AI, machine learning and HPC workloads leveraging multiple computing architectures.

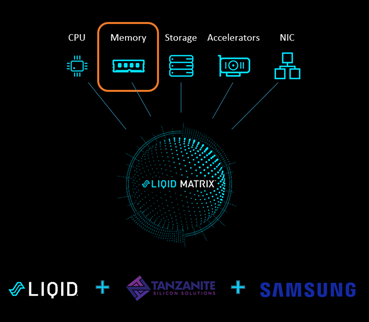

For composable computing companies, such as Liqid, whose software enables data center technology disaggregation into pools of compute elements to be drawn upon as workloads require, a polygot interconnect like CXL is heaven-sent. Now Liqid has announced a collaboration with memory technology provider Samsung and memory pooling technology company Tanzanite Silicon Solutions to demonstrate composable memory via the CXL 2.0 protocol. The companies said they are demonstrating the capability today at Dell Technologies World.

“Delivering high-speed CPU-to-memory connections for the first time, CXL decouples DRAM from the CPU, the final hardware element to be disaggregated,” Liqid said. “With native support for CXL, Liqid Matrix composable disaggregated infrastructure (CDI) software can now pool and compose memory in tandem with GPU, NVMe, persistent memory, FPGA, and other accelerator devices. By making DRAM a composable resource over CXL fabrics, Liqid, Samsung, and Tanzanite showcase the efficiency and flexibility necessary to meet the changing infrastructure demands being driven by rapid advancements in artificial intelligence and machine learning (AI+ML), edge computing and hybrid cloud environments.”

Samsung has been collaborating with data center, server, and chipset manufacturers to develop CXL interface technology since the CXL Consortium was formed in 2019. Its recently unveiled DDR5-based CXL module is the industry’s first memory expansion module supporting the interface, according to the company. “CXL memory expansion technology scales memory capacity and bandwidth beyond what is available commercially, enabling organizations to meet the demands of much larger, more complex workloads associated with AI and other evolving data center applications,” the company said.

“With new DDR5 based CXL memory modules, Samsung is helping to lay the foundation for a high-bandwidth, low-latency memory ecosystem designed to support and advance the modern computing era in which AI+ML is integrated into more day-to-day data center operations,” said Cheolmin Park, vice president of memory global sales & marketing at Samsung Electronics. “We are growing the CXL ecosystem with Liqid and others to unlock the unprecedented infrastructure performance required to achieve breakthroughs in high-performance computing for our customers and the industry as a whole.”

Tanzanite Silicon Solutions’ architecture and the design of its “Smart Logic Interface Connector” (SLICTZ) is built to enable scaling and sharing of memory and compute in a low-latency pool within and across server racks. It provides a scalable architecture for exa-scale level memory capacity and compute acceleration, the company said.

Today at Dell Technologies World, the three companies collaborated to demonstrate composable DRAM in real-world scenarios. The lab configuration consists of two Intel Xeon Scalable processor-based Archer City systems (codenamed Sapphire Rapids), along with Tanzanite’s SLICTZ SoC implemented in an Intel Agilex FPGA, demonstrating clustered/tiered memory allocated across two hosts and orchestrated using Liqid Matrix CDI software.

Today at Dell Technologies World, the three companies collaborated to demonstrate composable DRAM in real-world scenarios. The lab configuration consists of two Intel Xeon Scalable processor-based Archer City systems (codenamed Sapphire Rapids), along with Tanzanite’s SLICTZ SoC implemented in an Intel Agilex FPGA, demonstrating clustered/tiered memory allocated across two hosts and orchestrated using Liqid Matrix CDI software.

“With the breakthrough performance provided by CXL, the industry will be better positioned to support and make sense of the massive wave of AI innovation predicted over just the next few years, and we’re excited to collaborate with Samsung and Tanzanite to illustrate the power of this new protocol,” said Ben Bolles, executive director, product management, Liqid. “As our demonstration illustrates, by decoupling DRAM from the CPU, CXL enables us to achieve these milestone results in performance, infrastructure flexibility, and more sustainable resource efficiency, preparing organizations to rise to the architectural challenges that industries face as AI evolves at the speed of data.”

“With the breakthrough performance provided by CXL, the industry will be better positioned to support and make sense of the massive wave of AI innovation predicted over just the next few years, and we’re excited to collaborate with Samsung and Tanzanite to illustrate the power of this new protocol,” said Ben Bolles, executive director, product management, Liqid. “As our demonstration illustrates, by decoupling DRAM from the CPU, CXL enables us to achieve these milestone results in performance, infrastructure flexibility, and more sustainable resource efficiency, preparing organizations to rise to the architectural challenges that industries face as AI evolves at the speed of data.”

According to Gartner, AI use in the enterprise has tripled in the past two years, and by 2025 AI will be the top driver of infrastructure decision making as the AI market matures, resulting in a 10x growth in compute requirements over that same period.

According to Gartner, AI use in the enterprise has tripled in the past two years, and by 2025 AI will be the top driver of infrastructure decision making as the AI market matures, resulting in a 10x growth in compute requirements over that same period.

CXL is being developed to aid in addressing demand for expansive compute performance, efficiency, and sustainability, “allowing previously static DRAM resources to be shared for exponentially higher performance, reduced software stack complexity, and lower overall system cost, permitting users to focus on accelerating time to results for target workloads as opposed to maintaining physical hardware.”

“With a wave of CXL-supported servers becoming commercially available, composability incorporated into any server refresh will both enable existing resources to continue to be utilized, while also deploying DRAM as a shared, bare-metal resource that can be utilized in tandem with accelerator technologies already available to Liqid Matrix CDI software,” Liqid said.