AMD Chair and CEO Lisa Su this morning in San Francisco

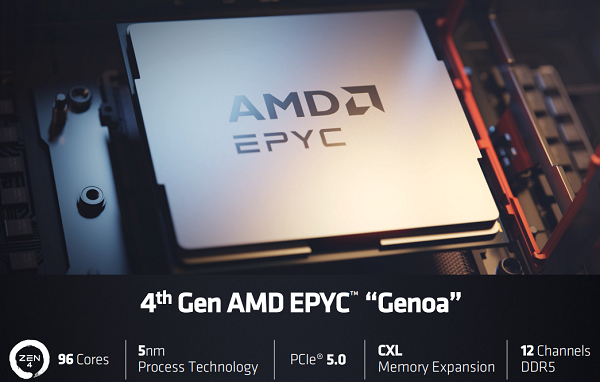

At a product unveiling event this morning in San Francisco, AMD announced updates to its 4th Gen EPYC “Genoa” 5nm data center CPUs and released additional details on its MI300X GPU accelerator for generative AI.

The event included keyote remarks from AMD Chair and CEO Lisa Su, who said AI represents the most significant strategic opportunity for the company, a market AMD expects to grow from $30 billion this year to $150 billion by 2027, a CAGR of 50 percent. Notwithstanding the extensive comments from Su and several of her senior managers this morning about the exploding AI market, they did not mention GPU market dominator NVIDIA, though it’s clear AMD is mounting a major effort to grab GPU market share.

On the CPU side, AMD:

- Introduced 4th Gen AMD EPYC 97X4 processors, codenamed “Bergamo,” with 128 Zen 4c cores per socket. AMD said the chips offer the greatest vCPU density and performance for cloud applications, provide up to 2.7x better energy efficiency and support up to 3x more containers per server. In her keynote, Su said Bergamo is the company’s first chip designed specfically for cloud applications.

- Introduced the 4th Gen EPYC processors with AMD 3D V-Cache technology for technical computing. With up to 96 Zen 4 cores and 1GB+ of L3 cache, the processors are designed to speed up product development “by delivering up to double the design jobs per day while using fewer servers and optimizing energy efficiency,” the company said.

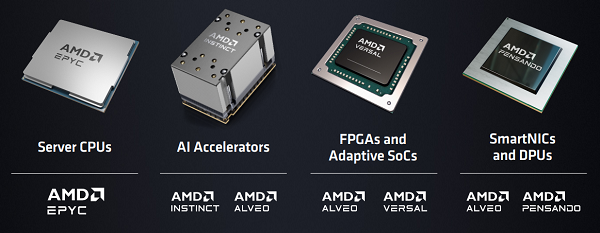

AMD also made announcements around its AI platform strategy delivering a cloud to edge to endpoint portfolio of hardware products, with industry software collaboration, designed for development of scalable and “pervasive AI.”

For generative AI, AMD shared details about the Instinct MI300 Series GPU accelerator family, including the introduction of the AMD Instinct MI300X, which is based on the AMD CDNA 3 accelerator architecture and supports up to 192 GB of HBM3 memory “to provide the compute and memory efficiency needed” for large language model inference and generative AI workloads. The company said that with the new accelerator, large language models such as Falcon-40B, a 40 billion parameter model, can fit on a single MI300X.

AMD also introduced the AMD Infinity Architecture Platform, which brings together eight MI300X accelerators into an industry-standard design for generative AI inference and training. AMD said MI300X is sampling to customers starting in Q3.

AMD also announced that the AMD Instinct MI300A, which the company said is the first APU accelerator for HPC and AI workloads, is now sampling to customers.

AMD also discussed the next generation of its DPU codenamed “Giglio,” scheduled to be available by the end of 2023.

AMD also announced the AMD Pensando Software-in-Silicon Developer Kit (SSDK), which the company said enables rapid development or migration of services to deploy on the AMD Pensando P4 programmable DPU in coordination with the existing set of features implemented on the AMD Pensando platform.