By Timothy Prickett Morgan • Get more from this author

Big Blue has been talking about the Power7-based “Blue Waters” supercomputer nodes for so long that you might think they’re already available. But although IBM gave us a glimpse of the Power 775 machines way back in November 2009, they actually won’t start shipping commercially until next month – August 26, to be exact.

Big Blue has been talking about the Power7-based “Blue Waters” supercomputer nodes for so long that you might think they’re already available. But although IBM gave us a glimpse of the Power 775 machines way back in November 2009, they actually won’t start shipping commercially until next month – August 26, to be exact.

The feeds and speeds of the Power 775 server remain essentially what we told you nearly two years ago. Today’s news is that the Power 775 is nearly ready for sale, and the clock speed on the Power7 processors and system prices have – finally – been announced.

Formerly known as the Power7 IH node, the Power 775 is not a general-purpose server node, but rather an ultra-dense, water-cooled rack server that pushes density and network bandwidth to extremes. Speaking of density, there’s not enough room between the components on the Power 775 server node – the processor units, the main memory, and the I/O units – to slip a credit card.

The brains of the Power 775 server are a multi-chip module (MCM) that crams four Power7 processors, each with eight cores and four threads per core, onto a single piece of ceramic substrate with a 5,336-pin interconnect. The chips, we now learn, run at 3.84GHz, right smack dab in the middle of the 3.5GHz to 4GHz range that IBM was anticipating. The chip package burns at 800 watts, which is why it needs water cooling.

The Power 775 node is 30 inches wide, 6 feet deep and 3.5 inches high (2U) – not exactly a small piece of iron. That node can have up to eight of the Power7 MCMs, for a total of 246 cores, on a single, massive motherboard. Each MCM has a bank of DDR3 memory associated with it, and these have big buffers to improve bandwidth and performance into and out of the processors.

Each bank has 16 memory slots per MCM, and IBM’s plan two years ago was to use 8GB sticks – but now that we are in 2011, the company has jacked up the capacity to 16GB per stick, doubling the memory to 2TB per node.

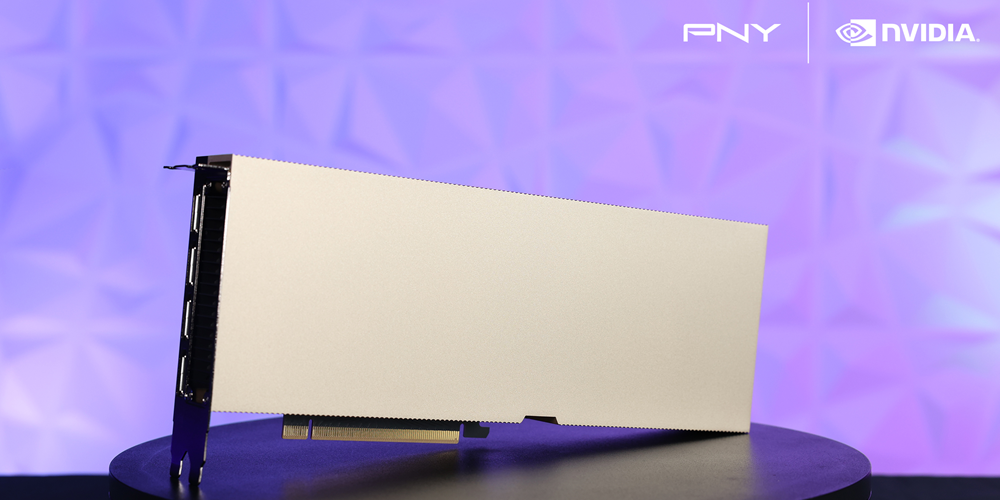

The Power 775 HPC server node

The Power7 IH hub/switch is the nervous system of the Blue Waters machine, which comes in the same 5,336-pin package and which links the eight nodes on the board to each other, to their PCI-Express peripherals, and to other nodes in adjacent racks in the complete HPC system.

Each 30-inch rack can have up to a dozen of these Power 775 servers installed and the Blue Waters interconnect allows for up to 2,048 Power 775 drawers (for a total of 524,288 cores) to be linked together with 24TB of main memory and up to 230TB of disk/flash capacity each. Each Power 775 node delivers 8 teraflops of raw number-crunching power, and a 2,048-node machine that is extremely light on disk capacity would yield over 16 petaflops of raw performance.

Each Power 775 node has 16 PCI Express 2.0 x16 peripheral slots and one x8 slot. If a customer wants a lot of storage, there are 4U disk drawers that hold up to 384 small form factor disks that can be linked to the Power 775 nodes. Up to six of these disk drawers can be put into a single rack, and they include 376 600GB disk enclosures and eight 200GB solid state disks.

The Power 775 HPC cluster node requires AIX 7.1 with ServicePack 3 and a bunch of patches, and IBM says that it will eventually support Red Hat’s Enterprise Linux (presumably 6.1 or later). There’s no love for SUSE’s Enterprise Linux Server 11 SP1 on these nodes.

If you want to build a Power 775-based super computer, you better get going on that government grant proposal. The base Power 775 node costs $560,097 with all of its cores activated and memory installed but not activated. It costs $2,690 to buy a pair of 8GB memory sticks and $5,199 for a pair of 16GB sticks, so that 2TB of memory will run you another $332,736.

Optical links for linking the hub/switch to the server nodes within the Power 775 and out to other nodes cost around $750 a pop, and you’ll need to buy thousands and thousands of them. That 384-drive drawer will run you $473,755. Toss in the custom rack with base power and cooling for $294,404 and $50,443 for lift tools and ladders for servicing the nodes – a full rack weighs 7,502 pounds – and you’re talking something on the order of $1.9m for a base machine with one server node, one I/O drawer, and one rack.

A balanced configuration, with eight Power 775 nodes and two disk nodes, will run you about $8.1m per rack and deliver 64 teraflops of raw computing oomph. Scale that up to 1,365 compute nodes and 342 storage nodes – assuming the workload needs a reasonable amount of local disk – and you are at 10.9 petaflops of raw performance, 2.7PB of memory, and 26.3PB of disk/flash storage. That will also run you something around $1.5bn at list price.

Obviously, IBM is not charging list price for this big, bad HPC box. ®

This article originally appeared in The Register.