What happens when your scientific instrument generates more data than you can decipher? Linda Vu writes that Berkeley researchers are designing new strategies for extracting interesting data from massive scientific datasets.

What happens when your scientific instrument generates more data than you can decipher? Linda Vu writes that Berkeley researchers are designing new strategies for extracting interesting data from massive scientific datasets.

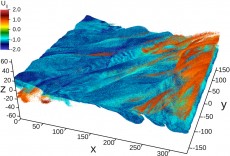

In a recent case, a team ran a state-of-the-art plasma physics code called VPIC on NERSC’s Cray XE6 “Hopper” supercomputer that generated a 3D magnetic reconnection dataset of a trillion particles.

Although our VPIC runs typically generate two types of data—grid and particle—we never did a whole lot with the particle data because it was really hard to extract information from a trillion particle dataset, and there was no way to sift out the useful information,” said Homa Karimabadi, head of the space physics group at UCSD.

Using their new tools, the researchers wrote each 32 TB file to disk in about 20 minutes, at a sustained rate of 27 gigabytes per second. By applying an enhanced version of the FastQuery tool, the team indexed this massive dataset in about 10 minutes, then queried the dataset in three seconds for interesting features to visualize.

Read the Full Story.