Matt Shipman writes of a new supercomputer being used as sort of crash-test dummy for testing codes destined for larger systems.

Matt Shipman writes of a new supercomputer being used as sort of crash-test dummy for testing codes destined for larger systems.

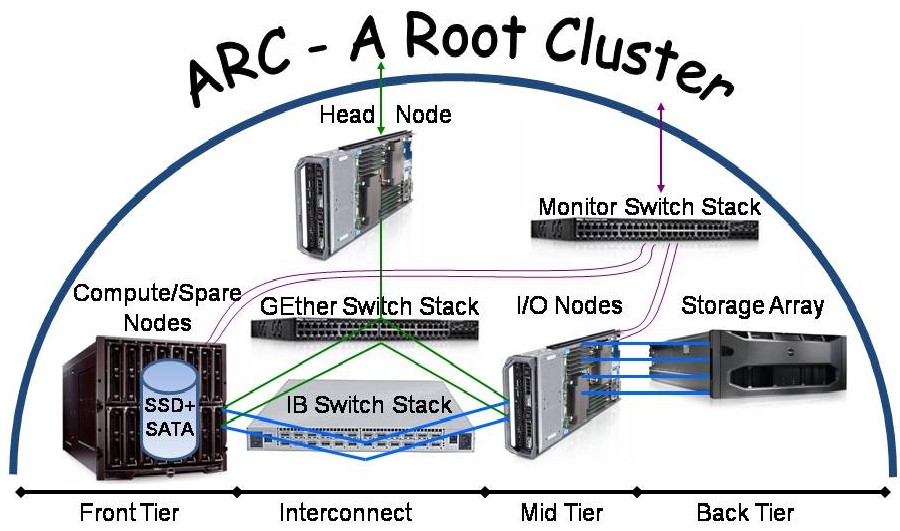

When you’re working with large-scale computational problems, sometimes you’ve got to experiment with the fundamental building blocks of whatever is involved. That means things can break. And when you’re talking about the fastest computers in the world, that would be very expensive. Solution? Build your own high-performance computing (HPC) system – then you can do whatever you want and get the bugs out before deploying at Petascale. That’s what Frank Mueller and a team of researchers set out to do when they built ARC, the largest academic HPC system in North Carolina.

“The system built by Mueller’s team was completed March 30, and will serve as a sort of crash-test dummy for potential new solutions to the major obstacles facing next generation HPC system design. “We can do anything we want with it,” Mueller says. “We can experiment with potential solutions to major problems, and we don’t have to worry about delaying work being done on the large-scale systems at other institutions.”

Once Mueller and his team have shown that a solution has worked on their system, it can be tested on more powerful, high-profile systems such as the Jaguar supercomputer at Oak Ridge. Read the Full Story.