The following guest article from Intel explores how the Intel Scalable System Framework can act as a solution for a high performance computing platform that can run deep learning workloads and more.

Don’t look now, but artificial intelligence is gaining on us. Deep learning applications have overtaken human abilities in strategic-thinking games, such as Go and poker, and in several real-world tasks, including speech recognition, image recognition, and fraud detection. These high-profile successes are driving a deep interest in AI for organizations that are looking for better ways to analyze complex, high-volume data in fields such as astrophysics, weather research, financial forecasting and more.

AI can be used to identify patterns in large data sets and to improve granularity and accuracy in complex computer simulations. Yet integrating neural networks into existing computing environments is challenging, typically requiring specialized and costly hardware infrastructure. The Intel Scalable System Framework (Intel SSF) offers a solution, providing a simpler and more flexible high performance computing (HPC) platform that can efficiently run the full range of HPC workloads, including deep learning and other data analytics applications.

A Common Platform for Data- and Compute-Intensive Workloads

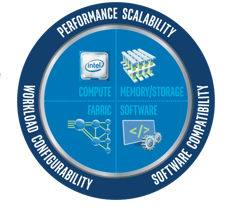

Like many other HPC workloads, deep learning is a tightly coupled application that alternates between compute-intensive number-crunching and high-volume data sharing. It stresses every aspect of an HPC cluster. Intel SSF addresses these demands by delivering balanced and scalable performance at every layer of the HPC solution stack: compute, memory, storage, fabric, and software.

The Intel Scalable System Framework brings hardware and software together to support AI and other HPC-class workloads on simpler, more affordable, and massively scalable clusters.

Powerful Compute for AI and Other Workloads

Breakthrough Memory and Storage SolutionsMainstream Intel Xeon processors currently support 97 percent of AI workloads[1]. Intel Xeon Phi processors provide the extreme parallelism needed for the most demanding neural network training requirements, and there’s much more to come. Intel expects to deliver a massive increase in neural network training throughput within the next three years to help fuel more and faster innovation.

Memory and storage speeds have been falling further behind processor performance for decades, forcing complex and costly workarounds. Intel is reversing this trend with disruptive new technologies that are ideal for deep learning and other data-hungry applications. Intel Optane Solid-State Drives represent the first of many offerings. These drives are designed to provide 5-8 times the performance of previous-generation SSDs at low queue depth workloads2. They can be used as ultra-fast storage or as non-volatile extensions to system memory.

A Fast, Scalable Fabric

Low-latency, high-bandwidth data movement between compute nodes is essential for AI and many other HPC workloads.

Low-latency, high-bandwidth data movement between compute nodes is essential for AI and many other HPC workloads. Intel SSF addresses this need with Intel® Omni-Path Architecture (Intel® OPA), which matches or exceeds the line speed of EDR InfiniBand, while providing significant advantages in efficiency, scalability, and cost models. Integrated fabric in Intel Xeon and Intel Xeon Phi processors further reduces cost and complexity, and improves performance.

An Optimized HPC Software Stack

Building and maintaining an HPC software environment is a complex undertaking. Intel SSF simplifies this process with Intel HPC Orchestrator, a complete software stack that can be provisioned in minutes. This is the glue that binds everything together to transform the HPC experience.

Simpler, Faster AI Applications

[clickToTweet tweet=”A cluster is only as powerful as the applications it runs. #AI” quote=”A cluster is only as powerful as the applications it runs. #AI”]

A cluster is only as powerful as the applications it runs, and most deep learning algorithms were not originally designed to scale on modern computing systems. Intel has been working with researchers, vendors and the open-source community to fill this gap. The optimized tools, libraries, and frameworks often boost performance by orders of magnitude. Intel has integrated these components into Intel HPC Orchestrator to add advanced development and runtime support for AI applications.

Powering the Future

AI offers powerful new tools and strategies for solving complex problems in science and industry, but cost and complexity can be significant barriers to adoption. Intel SSF provides an efficient and cost-effective foundation for developing, deploying, and scaling AI applications and integrating them with traditional HPC workloads. It’s a framework for the future of AI and HPC.

[1] Intel internal estimates

2 https://insidehpc.com/2017/03/intel-rolls-first-optane-ssd-3d-xpoint-technology/