Over at Argonne, Nils Heinonen writes that Researchers are using the open source Singularity framework as a kind of Rosetta Stone for running supercomputing code most anywhere.

Scaling code for massively parallel architectures is a common challenge the scientific community faces. When moving from a system used for development—a personal laptop, for instance, or even a university’s computing cluster—to a large-scale supercomputer like those housed at the Argonne Leadership Computing Facility, researchers traditionally would only migrate the target application: the underlying software stack would be left behind.

Scaling code for massively parallel architectures is a common challenge the scientific community faces. When moving from a system used for development—a personal laptop, for instance, or even a university’s computing cluster—to a large-scale supercomputer like those housed at the Argonne Leadership Computing Facility, researchers traditionally would only migrate the target application: the underlying software stack would be left behind.

To help alleviate this problem, the ALCF has deployed the service Singularity. Singularity, an open-source framework originally developed by Lawrence Berkeley National Laboratory (LBNL) and now supported by Sylabs Inc., is a tool for creating and running containers (platforms designed to package code and its dependencies so as to facilitate fast and reliable switching between computing environments)—albeit one intended specifically for scientific workflows and high-performance computing resources.

There is a definite need for increased reproducibility and flexibility when a user is getting started here, and containers can be tremendously valuable in that regard,” said Katherine Riley, Director of Science at the ALCF. “Supporting emerging technologies like Singularity is part of a broader strategy to provide users with services and tools that help advance science by eliminating barriers to productive use of our supercomputers.”

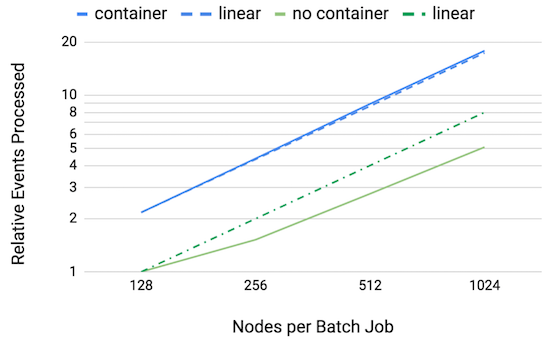

This plot shows the number of events ATLAS events simulated (solid lines) with and without containerization. Linear scaling is shown (dotted lines) for reference.

The demand for such services has grown at the ALCF as a direct result of the HPC community’s diversification.

When the ALCF first opened, it was catering to a smaller user base representative of the handful of domains conventionally associated with scientific computing (high energy physics and astrophysics, for example). HPC is now a principal research tool in new fields such as genomics, which perhaps lack some of the computing culture ingrained in certain older disciplines. Moreover, researchers tackling problems in machine learning, for example, constitute a new community. This creates a strong incentive to make HPC more immediately approachable to users so as to reduce the amount of time spent preparing code and establishing migration protocols, and thus hasten the start of research.

Singularity, to this end, promotes strong mobility of compute and reproducibility due to the framework’s employment of a distributable image format. This image format incorporates the entire software stack and runtime environment of the application into a single monolithic file. Users thereby gain the ability to define, create, and maintain an application on different hosts and operating environments. Once a containerized workflow is defined, its image can be snapshotted, archived, and preserved for future use. The snapshot itself represents a boon for scientific provenance by detailing the exact conditions under which given data were generated: in theory, by providing the machine, the software stack, and the parameters, one’s work can be completely reproduced. Because reproducibility is so crucial to the scientific process, this capability can be seen as one of the primary assets of container technology.

ALCF users have already begun to take advantage of the service. Argonne computational scientist Taylor Childers (in collaboration with a team of researchers from Brookhaven National Laboratory, LBNL, and the Large Hadron Collider’s ATLAS experiment) led ASCR Leadership Computing Challenge and ALCF Data Science Program projects to improve the performance of ATLAS software and workflows on DOE supercomputers. Every year ATLAS generates petabytes of raw data, the interpretation of which requires even larger simulated datasets, making recourse to leadership-scale computing resources an attractive option. The ATLAS software itself—a complex collection of algorithms with many different authors—is terabytes in size and features manifold dependencies, making manual installation a cumbersome task.

The researchers were able to run the ATLAS software on Theta inside a Singularity container via Yoda, an MPI-enabled Python application the team developed to communicate between CERN and ALCF systems and ensure all nodes in the latter are supplied with work throughout execution. The use of Singularity resulted in linear scaling on up to 1024 of Theta’s nodes, with event processing improved by a factor of four.

All told, with this setup we were able to deliver to ATLAS 65 million proton collisions simulated on Theta using 50 million core-hours,” said Childers.

Containerization also effectively circumvented the software’s relative “unfriendliness” toward distributed shared file systems by accelerating metadata access calls; tests performed without the ATLAS software suggested that containerization could speed up such access calls by a factor of seven.

While Singularity can present a tradeoff between immediacy and computational performance (because the containerized software stacks, generally speaking, are not written to exploit massively parallel architectures), the data-intensive ATLAS project demonstrates the potential value in such a compromise for some scenarios, given the impracticality of retooling the code at its center.

Because containers afford users the ability to switch between software versions without risking incompatibility, the service has also been a mechanism to expand research and try out new computing environments. Rick Stevens—Argonne’s Associate Laboratory Director for Computing, Environment, and Life Sciences (CELS)—leads the Aurora Early Science Program project Virtual Drug Response Prediction. The machine learning-centric project, whose workflow is built from the CANDLE (CANcer Distributed Learning Environment) framework, enables billions of virtual drugs to be screened singly and in numerous combinations while predicting their effects on tumor cells. Their distribution made possible by Singularity containerization, CANDLE workflows are shared between a multitude of users whose interests span basic cancer research, deep learning, and exascale computing. Accordingly, different subsets of CANDLE users are concerned with experimental alterations to different components of the software stack.

CANDLE users at health institutes, for instance, may have no need for exotic code alterations intended to harness the bleeding-edge capabilities of new systems, instead requiring production-ready workflows primed to address realistic problems,” explained Tom Brettin, Strategic Program Manager for CELS and a co-principal investigator on the project. Meanwhile, through the support of DOE’s Exascale Computing Project, CANDLE is being prepared for exascale deployment.

Containers are relatively new technology for HPC, and their role may well continue to grow. “I don’t expect this to be a passing fad,” said Riley. “It’s functionality that, within five years, will likely be utilized in ways we can’t even anticipate yet.”

Source: Argonne