Today WekaIO introduced Weka AI, a transformative storage solution framework underpinned by the Weka File System (WekaFS) that enables accelerated edge-to-core-to-cloud data pipelines. Weka AI is a framework of customizable reference architectures (RAs) and software development kits (SDKs) with leading technology alliances like NVIDIA, Mellanox, and others in the Weka Innovation Network (WIN). Weka AI enables chief data officers, data scientists and data engineers to accelerate genomics, medical imaging, the financial services industry (FSI), and advanced driver-assistance systems (ADAS) deep learning (DL) pipelines. In addition, Weka AI easily scales from entry to large integrated solutions provided through VARs and channel partners.

Today WekaIO introduced Weka AI, a transformative storage solution framework underpinned by the Weka File System (WekaFS) that enables accelerated edge-to-core-to-cloud data pipelines. Weka AI is a framework of customizable reference architectures (RAs) and software development kits (SDKs) with leading technology alliances like NVIDIA, Mellanox, and others in the Weka Innovation Network (WIN). Weka AI enables chief data officers, data scientists and data engineers to accelerate genomics, medical imaging, the financial services industry (FSI), and advanced driver-assistance systems (ADAS) deep learning (DL) pipelines. In addition, Weka AI easily scales from entry to large integrated solutions provided through VARs and channel partners.

GPUDirect Storage eliminates IO bottlenecks and dramatically reduces latency, delivering full bandwidth to data-hungry applications,” said Liran Zvibel, CEO and Co-Founder, WekaIO. “By supporting GPUDirect Storage in its implementations, Weka AI continues to deliver on its promise of highest performance at any scale for the most data-intensive applications.”

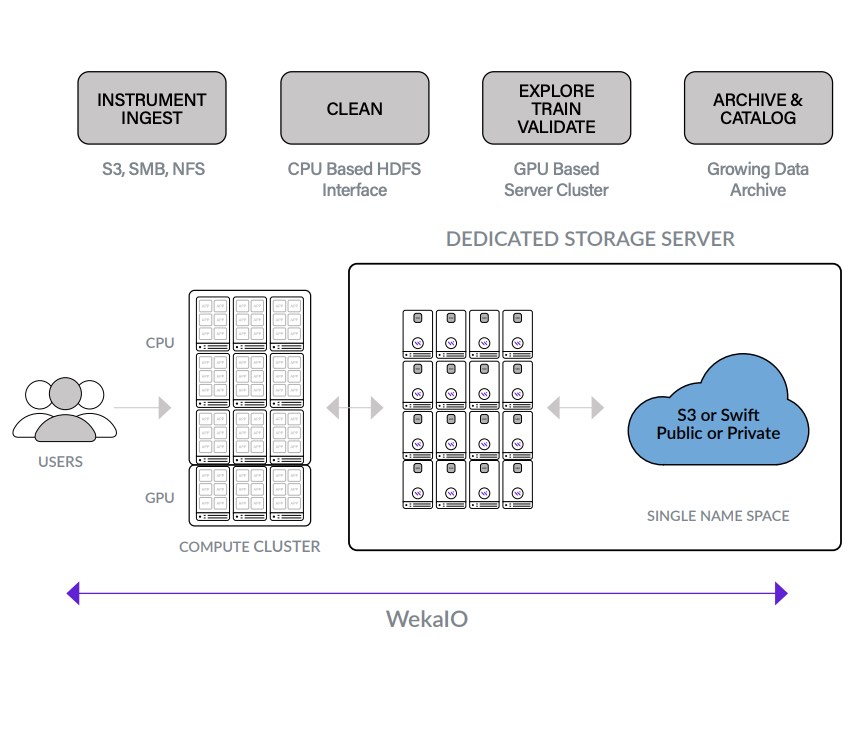

Artificial Intelligence (AI) data pipelines are inherently different from traditional file-based IO applications. Each stage within AI data pipelines has distinct storage IO requirements: massive bandwidth for ingest and training; mixed read/write handling for extract, transform, load (ETL); ultra-low latency for inference; and a single namespace for entire data pipeline visibility. Furthermore, AI at the edge is driving the need for edge-to-core-to-cloud data pipelines. Hence, the ideal solution must meet all these varied requirements and deliver timely insights at scale. Traditional solutions lack these capabilities and often fall short in meeting performance and shareability across personas and data mobility requirements. Today’s industries demand solutions that overcome these limitations for AI data pipelines. The solutions must provide data management that delivers operational agility, governance, and actionable intelligence by breaking silos.

End-to-end application performance for AI requires feeding high-performance NVIDIA GPUs with a high-throughput data pipeline,” said Paresh Kharya, Director of Product Management for Accelerated Computing, NVIDIA. “Weka AI leverages GPUDirect storage to provide a direct path between storage and GPUs, eliminating I/O bottlenecks for data intensive AI applications.”

Weka AI is architected to deliver production-ready solutions and accelerate DataOps by solving the storage challenges common with IO-intensive workloads such as AI. It helps accelerate the AI data pipeline, delivering more than 73 GB/sec of bandwidth to a single GPU client. In addition, it delivers operational agility with versioning, explainability, and reproducibility and provides governance and compliance with in-line encryption and data protection. Engineered solutions with partners in the WIN program ensure that Weka AI will provide data collection, workspace and deep neural network (DNN) training, simulation, inference, and lifecycle management for the entire data pipeline.

Weka AI provides a solution to meet the requirements of modern AI applications,” said Kevin Tubbs, Senior Director, Technology and Business Development, Advanced Solutions Group at Penguin Computing. “We are very excited to be working with Weka to accelerate next generation AI data pipelines.”