Continuing its long and impressive march back to prominence in data center and HPC-AI chips, AMD this morning announced the new Instinct MI200 GPU series, labeled by the company as “the first exascale-class GPU accelerators.”

Continuing its long and impressive march back to prominence in data center and HPC-AI chips, AMD this morning announced the new Instinct MI200 GPU series, labeled by the company as “the first exascale-class GPU accelerators.”

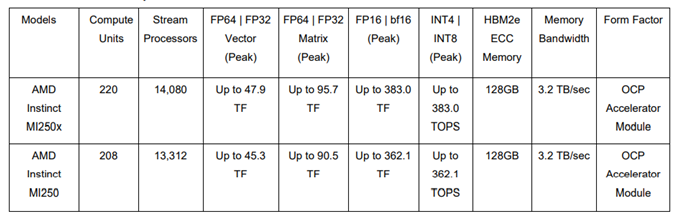

The product line includes the Instinct MI250X HPC-AI accelerator, built on AMD CDNA 2 architecture and providing up to 4.9X better performance than competitive accelerators for double precision (FP64) HPC applications and surpasses 380 teraflops of peak theoretical half-precision (FP16) for AI workloads, according to AMD.

“AMD Instinct MI200 accelerators deliver leadership HPC and AI performance, helping scientists make generational leaps in research that can dramatically shorten the time between initial hypothesis and discovery,” said Forrest Norrod, SVP/GM, Data Center and Embedded Solutions Business Group, AMD. “With key innovations in architecture, packaging and system design, the AMD Instinct MI200 series accelerators are the most advanced data center GPUs ever, providing exceptional performance for supercomputers and data centers to solve the world’s most complex problems.”

AMD, in collaboration with the U.S. Department of Energy, Oak Ridge National Laboratory and HPE, designed Frontier, the first U.S. exascale supercomputer, scheduled to be installed at Oak Ridge by year’s end. Powered by optimized 3rd Gen AMD EPYC CPUs and AMD Instinct MI250X accelerators, it is expected to deliver more than 1.5 exaflops of peak computing power.

Key features of the AMD Instinct MI200 series accelerators, according to AMD, include:

- AMD CDNA 2 architecture – 2 nd Gen Matrix Cores accelerating FP64 and FP32 matrix operations, delivering up to 4X the peak theoretical FP64 performance vs. AMD previous gen GPUs

- Packaging Technology – Industry-first multi-die GPU design with AMD’s new 2.5D Elevated Fanout Bridge (EFB) technology delivers 1.8X more cores and 2.7X higher memory bandwidth vs. AMD previous gen GPUs, offering the industry’s aggregate peak theoretical memory bandwidth at 3.2 terabytes per second

- 3rd Gen AMD Infinity Fabric technology – Up to 8 Infinity Fabric links connect the AMD Instinct MI200 with optimized EPYC CPUs and other GPUs in the node to enable unified CPU/GPU memory coherency and maximize system throughput, allowing for an easier on-ramp for CPU codes to tap the power of accelerators.

AMD ROCm is an open software platform allowing researchers to tap the power of AMD Instinct accelerators. The ROCm platform is built on the foundation of open portability, supporting environments across multiple accelerator vendors and architectures. With ROCm 5.0, AMD extends its open platform for HPC and AI applications with AMD Instinct MI200 series accelerators, increasing accessibility of ROCm for developers.

Through the AMD Infinity Hub, researchers, data scientists and end-users can find, download and install containerized HPC apps and ML frameworks that are optimized and supported on AMD [AMD Official Official Use Only]- Internal Distribution Only] Instinct accelerators and ROCm. The hub currently offers a range of containers supporting either Radeon Instinct MI50, AMD Instinct MI100 or AMD Instinct MI200 accelerators including several applications like Chroma, CP2k, LAMMPS, NAMD, OpenMM and more, along with popular ML frameworks TensorFlow and PyTorch. New containers are continually being added to the hub.

The AMD Instinct MI250X and AMD Instinct MI250 are available in the open-hardware compute accelerator module or OCP Accelerator Module (OAM) form factor. The AMD Instinct MI210 will be available in a PCIe card form factor in OEM servers. The AMD MI250X accelerator is currently available from HPE in the HPE Cray EX Supercomputer, and additional AMD Instinct MI200 series accelerators are expected in systems from major OEM and ODM partners in enterprise markets in Q1 2022, including ASUS, ATOS, Dell Technologies, Gigabyte, Hewlett Packard Enterprise (HPE), Lenovo and Supermicro.